uipath uipath-saiv1 practice test

UiPath Certified Professional Specialized AI Professional v1.0

Question 1

When is it recommended to use Main-ActionCenter in the context of the Document Understanding

Process?

- A. When implementing an attended process.

- B. When testing locally or implementing an attended process.

- C. When testing locally.

- D. When testing locally or implementing an unattended process.

Answer:

B

Explanation:

Main-ActionCenter is a workflow that allows you to create and manage Document Understanding

actions in Action Center, which is a web application that enables human intervention in automation

processes. You can use Main-ActionCenter when you want to test your Document Understanding

process locally, or when you want to implement an attended process that requires human validation

or classification of documents. Main-ActionCenter is not recommended for unattended processes, as

they do not involve human interaction.

References:

Action Center - Document Understanding activities

,

Document Understanding Process

22.10 now in General Availability!

,

How to Start a UiPath Document Understanding Project

Question 2

What components are part of the Document Understanding Process template?

- A. Import. Classification. Text Extractor, and Data Validation.

- B. Load Document. Categorization. Data Extraction, and Validation.

- C. Load Taxonomy, Digitization. Classification, Data Extraction, and Data Validation Export.

- D. Load Taxonomy, Digitization. Categorization. Data Validation, and Export.

Answer:

C

Explanation:

The Document Understanding Process template is a fully functional UiPath Studio project template

based on a document processing flowchart. It provides logging, exception handling, retry

mechanisms, and all the methods that should be used in a Document Understanding workflow, out

of the box. The template has an architecture decoupled from other connected automations and

supports both attended and unattended processes with human-in-the-loop validation via Action

Center.

The template consists of the following components1

:

Load Taxonomy: This component loads the taxonomy file that defines the document types and fields

to be extracted. The taxonomy file can be created using the Taxonomy Manager in Studio or the Data

Manager web application.

Digitization: This component converts the input document into a digital format that can be processed

by the subsequent components. It uses the Digitize Document activity to perform OCR (optical

character recognition) on the document and obtain a Document Object Model (DOM).

Classification: This component determines the document type of the input document using the

Classify Document Scope activity. It can use either a Keyword Based Classifier or a Machine Learning

Classifier, depending on the configuration. The classification result is stored in a ClassificationResult

variable.

Data Extraction: This component extracts the relevant data from the input document using the Data

Extraction Scope activity. It can use different extractors for different document types, such as the

Form Extractor, the Machine Learning Extractor, the Regex Based Extractor, or the Intelligent Form

Extractor. The extraction result is stored in an ExtractionResult variable.

Data Validation: This component allows human validation and correction of the extracted data using

the Present Validation Station activity. It opens the Validation Station window where the user can

review and edit the extracted data, as well as provide feedback for retraining the classifiers and

extractors. The validated data is stored in a DocumentValidationResult variable.

Export: This component exports the validated data to a desired output, such as an Excel file, a

database, or a downstream process. It uses the Export Extraction Results activity to convert the

DocumentValidationResult variable into a DataTable variable, which can then be manipulated or

written using other activities.

References:

Document Understanding Process: Studio Template

,

Document Understanding Process -

New Studio Template

,

Document Understanding Process Template in UiPath Studio

Question 3

What is the Document Object Model (DOM) in the context of Document Understanding?

- A. The DOM is a JSON object containing information such as name, content type, text length, number of pages, page rotation, detected language, content, and coordinates for the words identified in the file.

- B. The DOM is a built-in artificial intelligence system that automatically understands and interprets the content and the type of documents, eliminating the need for manual data extraction.

- C. The DOM is a feature that allows you to convert physical documents into virtual objects that can be manipulated using programming code.

- D. The DOM is a graphical user interface (GUI) tool in UiPath Document Understanding that provides visual representations of documents, making it easier for users to navigate and interact with the content.

Answer:

A

Explanation:

The Document Object Model (DOM) is a data representation of the objects that comprise the

structure and content of a document on the web1

.

In the context of Document Understanding, the

DOM is a JSON object that is generated by the Digitize Document activity, which uses the UiPath

Document OCR engine to extract the text and layout information from the input document2

.

The

DOM contains the following properties for each document3

:

name: The name of the document file.

contentType: The MIME type of the document file, such as application/pdf or image/jpeg.

textLength: The number of characters in the document text.

pages: An array of objects, each representing a page in the document. Each page object has the

following properties:

pageNumber: The number of the page, starting from 1.

rotation: The angle of rotation of the page, in degrees. A positive value indicates clockwise rotation,

and a negative value indicates counterclockwise rotation.

language: The language code of the page, such as en or fr.

content: An array of objects, each representing a word or a line in the page. Each content object has

the following properties:

type: The type of the content, either word or line.

text: The text of the content.

boundingBox: An array of four numbers, representing the coordinates of the top-left and bottom-

right corners of the content, in the format [x1, y1, x2, y2]. The coordinates are relative to the page,

with the origin at the top-left corner, and the unit is pixel.

confidence: A number between 0 and 1, indicating the confidence level of the OCR engine in

recognizing the content.

The DOM can be used as an input for other activities in the Document Understanding framework,

such as Classify Document Scope, Data Extraction Scope, or Export Extraction Results. The DOM can

also be manipulated using programming code, such as JavaScript or Python, to perform custom

operations on the document data.

References:

:

Introduction to the DOM - Web APIs | MDN 2: Digitize Document 3

: Document Object Model

Question 4

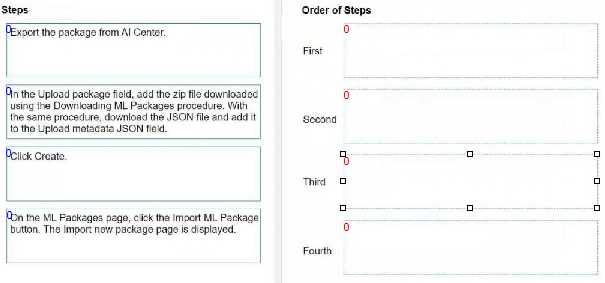

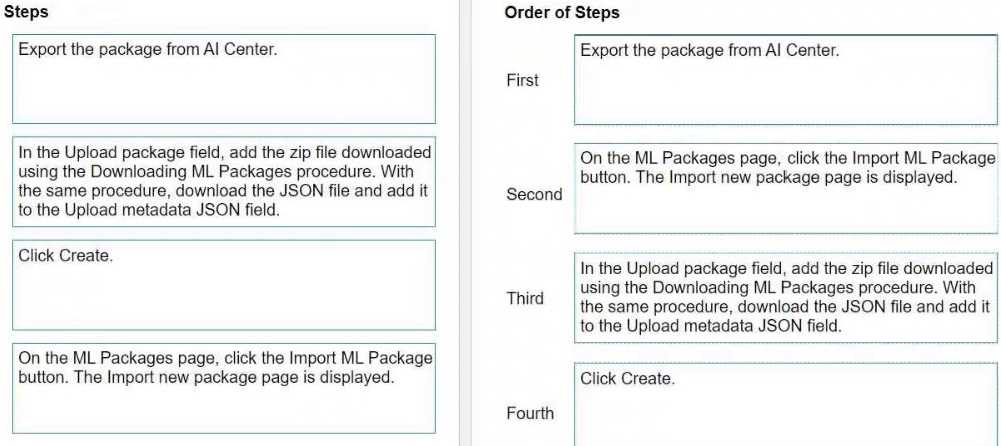

DRAG DROP

What is the correct order of uploading a package exported from UiPath AI Center?

Instructions: Drag the steps found on the "Left" and drop them on the "Right" in the correct order.

Answer:

Explanation:

Export the package from AI Center. This is the first step where you prepare the package to be moved.

On the ML Packages page, click the Import ML Package button. This step is where you start the

process of importing the package you've exported.

On the Upload package field, add the zip file downloaded using the Downloading ML Packages

procedure. After starting the import process, you will upload the actual package.

Click Create. This is the final step where you finalize the uploading process of your ML package.

Please proceed with these steps in the UiPath AI Center to upload your exported package correctly.

Question 5

For an analytics use case, what are the recommended minimum model performance requirements in

UiPath Communications Mining?

- A. Model Ratings of "Good" or better and individual performance factors rated as "Good" or better.

- B. Model Ratings of "Good" and individual performance factors rated as "Excellent".

- C. Model Ratings of "Excellent" and individual performance factors rated as "Good" or better.

- D. Model Ratings of "Excellent" and individual performance factors rated as "Excellent".

Answer:

A

Question 6

DRAG DROP

What is the correct order of recommended steps when introducing new labels into a mature

taxonomy?

Instructions: Drag the steps found on the "Left" and drop them on the "Right" in the correct order.

Answer:

Explanation:

Create the new label by assigning it at least once. This is the initial step to introduce a new category

or classification within your data taxonomy.

Search for instances in the reviewed data where the new label should have been assigned, and apply

the label accordingly. This step is crucial for maintaining consistency across your data set.

Use 'Missed Label' to find all additional missing examples in the reviewed dat

a. This action helps in identifying and rectifying any instances that may have been overlooked during

the initial review.

Check validation to ensure the label is performing as expected, and follow recommended actions if

further training is required. Validation is key to assess the accuracy and performance of the new label

within the system.

Question 7

What do entities represent in UiPath Communications Mining?

- A. Structured data points.

- B. Concepts, themes, and intents.

- C. Thread properties.

- D. Metadata properties.

Answer:

B

Explanation:

Entities are additional elements of structured data which can be extracted from within the

verbatims. Entities include data such as monetary quantities, dates, currency codes, organisations,

people, email addresses, URLs, as well as many other industry specific categories. Entities represent

concepts, themes, and intents that are relevant to the business use case and can be used for filtering,

searching, and analyzing the verbatims.

References:

Communications Mining - Entities

Communications Mining - Using Entities in your Application

Communications Mining - Configuring Entities

Question 8

A Document Understanding Process is in production. According to best practices, what are the

locations recommended for exporting the result files?

- A. Network Attached Storage and Orchestrator Bucket.

- B. Locally, Temp Folder, Network Attached Storage, and Orchestrator Bucket.

- C. Orchestrator Bucket and Queue Item.

- D. On a VM, Orchestrator Bucket, and Network Attached Storage.

Answer:

A

Explanation:

In a Document Understanding Process, particularly when it is in production, it is crucial to manage

output data securely and efficiently. Utilizing Network Attached Storage (NAS) and Orchestrator

Buckets are recommended practices for exporting result files for several reasons:

Network Attached Storage (NAS): NAS is a dedicated file storage that allows multiple users and client

devices to retrieve data from centralized disk capacity. Using NAS in a production environment for

storing result files is beneficial due to its accessibility, capacity, and security features. It facilitates

easy access and sharing of files within a network while maintaining data security.

Orchestrator Bucket: Orchestrator Buckets in UiPath are used for storing files that can be easily

accessed by the robots. This is particularly useful in a production environment because it provides a

centralized, cloud-based storage solution that is scalable, secure, and accessible from anywhere. This

aligns with the best practices of maintaining high availability and security for business-critical data.

The other options (B, C, and D) include locations that might not be as secure or efficient for a

production environment. For example, storing files locally or in a temp folder can pose security risks

and is not scalable for large or distributed systems. Similarly, storing directly on a VM might not be

the most efficient or secure method, especially when dealing with sensitive data.

Question 9

While training a UiPath Communications Mining model, the Search feature was used to pin a certain

label on a few communications. After retraining, the new model version starts to predict the tagged

label but infrequently and with low confidence.

According to best practices, what would be the correct next step to improve the model's predictions

for the label, in the "Explore" phase of training?

- A. Use the "Rebalance" training mode to pin the label to more communications.

- B. Use the 'Teach" training mode to pin the label to more communications.

- C. Use the "Low confidence" training mode to pin the label to more communications.

- D. Use the "Search" feature to pin the label to more communications.

Answer:

B

Explanation:

According to the UiPath documentation, the ‘Teach’ training mode is used to improve the model’s

predictions for a specific label by pinning it to more communications that match the label’s criteria.

This helps the model learn from more examples and increase its confidence and accuracy. The ‘Teach’

mode also allows you to unpin the label from communications that do not match it, which helps the

model avoid false positives. The other training modes are not as effective for this purpose, as they

either focus on different aspects of the model performance or do not provide enough feedback to

the model.

References:

Model training and labelling best practice

Overview of the model training process

Model Training FAQs

Question 10

DRAG DROP

What is the correct execution order of the Document Understanding template stages?

Instructions: Drag the stages found on the "Left" and drop them on the "Right” in the correct order.

Answer:

Explanation:

The correct execution order of the Document Understanding template stages is:

Taxonomy

Digitize

Classify

Extract

Extraction Validation

Export

Comprehensive and Detailed Explanation: The Document Understanding template stages are based

on a document processing flowchart that follows these steps:

First, you need to define the Taxonomy of the document types and fields that you want to process

and extract information from.

This is done using the Taxonomy Manager in UiPath Studio1

.

Next, you need to Digitize the input documents, which can be in various formats such as PDF, image,

or text.

This is done using the Digitize Document activity, which converts the documents into a

machine-readable format and performs OCR if needed2

.

Then, you need to Classify the digitized documents into the predefined document types in your

taxonomy.

This is done using the Classify Document Scope activity, which can use various classifiers

such as Keyword Based Classifier, Machine Learning Classifier, or Intelligent Form Extractor3

.

After that, you need to Extract the relevant information from the classified documents based on the

fields in your taxonomy. This is done using the Data Extraction Scope activity, which can use various

extractors such as Regex Based Extractor, Machine Learning Extractor, or Form Extractor.

Next, you need to perform Extraction Validation to review and correct the extracted information,

either manually or automatically. This is done using the Present Validation Station activity, which can

use either the Validation Station or the Action Center for human-in-the-loop validation.

Finally, you need to Export the validated information to the desired output location, such as a file, a

database, or a queue. This is done using the Export Extraction Results activity, which can use various

exporters such as Excel Exporter, CSV Exporter, or Queue Item Exporter.

References:

UiPath Studio - Taxonomy Manager

UiPath Activities - Digitize Document

UiPath Activities - Classify Document Scope

Question 11

Which of the following are unstructured documents?

- A. Invoices, receipts, purchase orders, and medical bills.

- B. Banking forms, tax forms, surveys, and identity cards.

- C. Contracts, emails, banking forms, and tax forms.

- D. Contracts, agreements, and emails.

Answer:

D

Explanation:

Unstructured documents are those that do not have a predefined format or layout, and therefore

cannot be easily processed by traditional methods. They often contain free-form text, images, tables,

and other elements that vary from document to document. Examples of unstructured documents

include contracts, agreements, emails, letters, reports, articles, and so on.

UiPath Document

Understanding is a solution that enables the processing of unstructured documents using AI-

powered models and RPA workflows1

.

The other options are not correct because they are examples of structured or semi-structured

documents. Structured documents are those that have a fixed format or layout, and can be easily

processed by rules-based methods. They often contain fields, labels, and values that are consistent

across documents. Examples of structured documents include banking forms, tax forms, surveys,

identity cards, and so on. Semi-structured documents are those that have some elements of

structure, but also contain variations or unstructured content. They often require a combination of

rules-based and AI-powered methods to process.

Examples of semi-structured documents include

invoices, receipts, purchase orders, medical bills, and so on2

.

References: 1

:

Unstructured Data Analysis with AI, RPA, and OCR | UiPath 2

:

Structured, semi

structured, unstructured sample documents for UiPath document understanding - Studio - UiPath

Community Forum

Question 12

When creating a training dataset, what is the recommended number of samples for the Classification

fields?

- A. 5-10 document samples from each class.

- B. 10-20 document samples from each class.

- C. 20-50 document samples from each class.

- D. 50-200 document samples from each class.

Answer:

C

Explanation:

According to the UiPath documentation, the recommended number of samples for the classification

fields depends on the number of document types and layouts that you want to classify. The more

document types and layouts you have, the more samples you need to cover the diversity of your

data.

However, a general guideline is to have at least 20-50 document samples from each class, as

this would provide enough data for the classifiers to learn from12

.

A large number of samples per

layout is not mandatory, as the classifiers can generalize from other layouts as well3

.

References: 1: Document Classification Training Overview 2: Document Classification Training Related

Activities 3

: Training High Performing Models

Question 13

What is one of the purposes of the Config file in the UiPath Document Understanding Template?

- A. It contains the configuration settings for the UiPath Robot and Orchestrator integration.

- B. It stores the API keys and authentication credentials for accessing external services.

- C. It specifies the output file path and format for the processed documents.

- D. It defines the input document types and formats supported by the template.

Answer:

B

Explanation:

The Config file in the UiPath Document Understanding Template is a JSON file that contains various

parameters and values that control the behavior and functionality of the template. One of the

purposes of the Config file is to store the API keys and authentication credentials for accessing

external services, such as the Document Understanding API, the Computer Vision API, the Form

Recognizer API, and the Text Analysis API. These services are used by the template to perform

document classification, data extraction, and data validation tasks. The Config file also allows the

user to customize the template according to their needs, such as enabling or disabling human-in-the-

loop validation, setting the retry mechanism, defining the custom success logic, and specifying the

taxonomy of document types.

References:

Document Understanding Process: Studio Template

,

Automation Suite - Document

Understanding configuration file

Question 14

Which of the following file types are supported for the DocumentPath property in the Classify

Document Scope activity?

- A. .bmp, .pdf, .jpe, .psd

- B. .png, .gif, .jpe, .tiff

- C. .pdf, .jpeg, .raw, tif

- D. .jpe, .eps, .jpg, .tiff

Answer:

B

Explanation:

According to the UiPath documentation portal1

, the DocumentPath property in the Classify

Document Scope activity accepts the path to the document you want to validate. This field supports

only strings and String variables. The supported file types for this property field are .png, .gif, .jpe,

.jpg, .jpeg, .tiff, .tif, .bmp, and .pdf. Therefore, option B is the correct answer, as it contains four of the

supported file types. Option A is incorrect, as .psd is not a supported file type. Option C is incorrect,

as .raw is not a supported file type. Option D is incorrect, as .eps is not a supported file type.

References: 1

Activities - Classify Document Scope - UiPath Documentation Portal

Question 15

When processing a document type that comes in a high variety of layouts, what is the recommended

data extraction methodology?

- A. Model-based data extraction.

- B. Hybrid data extraction.

- C. Manual data extraction.

- D. Rule-based data extraction.

Answer:

B

Explanation:

Based on the classification of documents, there are two common types of data extraction

methodologies: rule-based data extraction and model-based data extraction1

.

Rule-based data

extraction targets structured documents, while model-based data extraction is used to process semi-

structured and unstructured documents1

. However, neither of these methods alone can handle the

high variety of layouts that some document types may have.

Therefore, a hybrid data extraction

approach is recommended, which combines the strengths of both methods and allows for more

flexibility and accuracy23

.

A hybrid data extraction approach can use one or more extractors, such as

RegEx Based Extractor, Form Extractor, Intelligent Form Extractor, Machine Learning Extractor, or

FlexiCapture Extractor, depending on the document type and the fields of interest3

.

The Data

Extraction Scope activity in UiPath enables the configuration and execution of a hybrid data

extraction methodology, by allowing the user to customize which fields are requested from each

extractor, what is the minimum confidence threshold for a given data point extracted by each

extractor, what is the taxonomy mapping, at field level, between the project taxonomy and the

extractor’s internal taxonomy (if any), and how to implement “fall-back” rules for data extraction2

.

References: 2

:

Data Extraction Overview 3

:

Data Extraction 1

:

Document Processing with Improved

Data Extraction