teradata tdvan5 practice test

Vantage Administration

Question 1

There is a call center application that repetitively sends tactical queries to Vantage These queries use

a few small tables that are joined on the primary index column.

The following maps are defined in the system:

* TD_GlobalMap

* TD_Map1

* TD_DataDictionaryMap

* TD_1AmpSparseMap_1Node

What can be done to optimize these queries?

- A. Assign these tables to TD_1AmpSparseMap_1Node using different colocation names for each table.

- B. Assign these tables to TD_1AmpSparseMap_1Node using the same colocation name for every table

- C. Assign these tables to TD_Map1 using the same colocation name for every table.

- D. Assign these tables to TD_Map1 using different colocation names for each table.

Answer:

B

Explanation:

Example:

TD_1AmpSparseMap_1Node is a sparse map that assigns the data to a single AMP (or a few AMPs),

and using the same colocation name ensures that the tables are collocated on the same AMP. This

helps in efficient joining because no data redistribution is required between AMPs when tables are

joined on the primary index.

Using different colocation names for each table (options A and D) would place the tables on different

AMPs, leading to less efficient joins since data would need to be shuffled between AMPs.

TD_Map1 is a predefined map in the system but does not specifically optimize small, frequently

accessed tables in the same way that TD_1AmpSparseMap_1Node does, which is more suitable for

these scenarios.

Thus, using the same colocation name within the TD_1AmpSparseMap_1Node ensures that the joins

are AMP-local, optimizing the repetitive tactical queries.

Question 2

Which workload management technique should the Administrator use to reject all QueryGrid

queries during a pre-defined critical batch state?

A.

An exception that includes the following Foreign Servers:

TD_SYSFNLIB.QGInitiatorExport

TD_SYSFNLIB.QGInitiatorlmport

TD_SYSFNLIB.QGRemoteExport

TD_SYSFNLIB.QGRemotelmport

B.

A filter that includes the following functions:

TD_SYSFNLIB.QGInitiatorExport

TD_SYSFNLIB.QGInitiatorlmport

TD_SYSFNLIB.QGRemoteExport

TD_SYSFNLIB.QGRemotelmport

C.

An exception that excludes the following Foreign Servers:

TD_SYSFNLIB.QGInitiatorExport

TD_SYSFNLIB.QGInitiatorlmport

TD_SYSFNLIB.QGRemoteExport

TD_SYSFNLIB.QGRemotelmport

D.

A filter that excludes the following functions:

TD_SYSFNLIB.QGInitiatorExport

TD_SYSFNLIB.QGInitiatorlmport

TD_SYSFNLIB.QGRemoteExport

TD_SYSFNLIB.QGRemotelmport

Answer:

A

Explanation:

In this case, using an exception with the Foreign Servers related to QueryGrid functions will prevent

queries involving those foreign servers from running. This is a typical method to block or reject

specific workloads during critical times, such as a batch processing window.

Exceptions in workload management are used to define specific conditions under which queries or

workloads should be rejected or managed differently. By including these QueryGrid-related functions

in an exception, the administrator ensures that any QueryGrid queries involving the listed foreign

servers will be rejected during the critical batch state.

Options B and D, which mention filters, are not appropriate for rejecting queries. Filters are used for

monitoring or routing purposes, not for outright rejection of workloads.

Option C, which suggests excluding the foreign servers in an exception, would not achieve the goal,

as this would mean those queries are not affected by the exception. The goal here is to include these

functions in an exception to actively reject them.

Question 3

A customer is complaining that the creation of a large table in the lab environment is getting stuck in

the merge step. The customer is using the following command to create the table:

CREATE TABLE ... AS (SELECT * ...) WITH DATA

Which recommendation will help the merge step?

- A. A column that provides good distribution should be specified as PRIMARY INDEX.

- B. The MERGEBLOCKRATIO should be specified for the table to improve the data block merge operation.

- C. The table should be created with "CREATE TABLE ... AS (SELECT *...) WITH NO DATA", and afterwards, "INSERT ... SELECT *" should be used to fill the table.

- D. Users of the lab may have lower priority than other workloads, so the creation of the table should be moved to off-peak hours.

Answer:

C

Explanation:

Merge step issues often occur when a large amount of data is being processed during the table

creation, especially if the system is trying to simultaneously create the table and insert data.

By using "WITH NO DATA", the table structure is created first, without the actual data being inserted

during the table creation process. The *"INSERT ... SELECT " command can then be used afterwards

to populate the table in a more controlled way, reducing the load on the system during the creation

phase and potentially improving the efficiency of the merge step.

Specifying a good distribution for the primary index can help overall performance, but it doesn't

directly address the issue with the merge step in this scenario.

Specifying the MERGEBLOCKRATIO isn't typically a solution for this specific problem; the merge block

ratio is more about the optimization of data block merges rather than the creation of tables.

Moving the creation to off-peak hours may help if the environment is busy, but it doesn't directly

address the core issue of the merge step getting stuck.

Question 4

Which benefit is achieved by creating profiles?

- A. Reducing the growth of the DBC.AccessRights table

- B. Managing user privileges on database objects

- C. Assigning permanent space for groups of users with similar needs

- D. Defining spool space for groups of users with similar needs

Answer:

D

Explanation:

Profiles in Teradata are used to manage and enforce resource limits for groups of users. This includes

defining spool space, temporary space, and permanent space for users who share similar needs.

Spool space is important for query processing, and managing it by profile helps prevent individual

users from consuming excessive resources and ensures better overall system performance.

Reducing the growth of the DBC.AccessRights table is not directly related to profiles. Profiles do not

reduce access rights or the growth of this table, which tracks individual user privileges.

Managing user privileges on database objects is handled through roles and grants, not profiles.

Profiles focus on resource management, not privileges.

Assigning permanent space for groups of users is partially true since profiles can manage some space

attributes, but the main focus of profiles is on managing spool space rather than permanent space.

Question 5

What is a use case for Data Mover?

- A. Archiving data to a Hadoop system

- B. Copying data between Vantage systems for active-active replication

- C. Replicating data to a disaster recovery system

- D. Copying data between Hadoop systems

Answer:

C

Explanation:

Teradata Data Mover is primarily designed to copy and replicate data between Teradata or Vantage

systems. One of its common use cases is to move data to a disaster recovery system, ensuring that

data is available in case of system failure or disaster, and making it a valuable tool for maintaining

high availability and business continuity.

Archiving data to Hadoop and Copying data between Hadoop systems are more relevant to other

tools such as Teradata QueryGrid, which integrates Vantage with Hadoop and other external systems.

Copying data between Vantage systems for active-active replication might involve Data Mover, but

active-active replication typically involves more sophisticated real-time synchronization technologies

like Teradata's Unity or QueryGrid.

Question 6

An Administrator manages a Vantage system that is continually updated. The system is critical to the

business and must be available as much as possible. The Administrator decides to use a backup

strategy that will allow changes to tables while a backup is in progress.

Which backup strategy should be used?

- A. Offline

- B. Skip statistics

- C. Online

- D. Dictionary Only

Answer:

C

Explanation:

An Online backup strategy allows the database to remain accessible and operational while the

backup is taking place. This means that users can continue to modify data, and the system can

remain available without requiring downtime. It is a common approach for mission-critical systems

that need to maximize uptime.

Offline would require the system or certain tables to be unavailable during the backup process, which

is not suitable for a system that needs to remain available.

Skip statistics refers to skipping the backup of statistics on database objects and does not relate to

whether the system can be updated during the backup.

Dictionary Only involves backing up only the system catalog or dictionary data, not the actual table

data, and would not fulfill the requirement of a comprehensive backup while allowing updates.

Question 7

A client is having many problems with a poorly written SQL. They have product joins running against

large tables, and this is causing severe performance issues. The Administrator needs to help the

client avoid the harm to the system that is being caused by running these queries.

Which action should be taken by the Administrator to assist this client?

- A. Use the Query Spotlight Viewpoint portlet to deprioritize the queries.

- B. Use the Flex Throttle option.

- C. Use a TASM system filter.

- D. Use the Query Log Viewpoint portlet to identity and abort the harmful queries.

Answer:

C

Explanation:

Teradata Active System Management (TASM) allows the system administrator to control and manage

query workload in real-time. A TASM system filter can be used to prevent or deprioritize certain

types of queries, such as product joins or poorly written queries, that are consuming excessive

resources. This approach ensures that problematic queries do not harm the overall system

performance.

Query Spotlight Viewpoint portlet focuses on identifying and managing individual queries but does

not provide the same level of proactive filtering and control as TASM system filters.

Flex Throttle is useful for controlling the concurrency of queries, but it doesn't specifically address

preventing or managing product joins in a way that would directly resolve the issue with poorly

written SQL.

Query Log Viewpoint portlet would help in identifying and aborting harmful queries, but it's a

reactive approach rather than a proactive solution like using TASM system filters.

Question 8

The Administrator has dropped a profile which was associated with a group of users.

What will happen when one of the users from the group logs on to the database?

- A. The default profile setting for the user will be reset to NULL.

- B. Password attributes defined at the system level in DBCSecurityDefaultsV will apply

- C. The system will show a warning message.

- D. SPOOL and DEFAULT DATABASE specifications for the dropped profile will still apply to the user.

Answer:

B

Explanation:

When a profile is dropped, the users who were associated with that profile will no longer have the

specific resource and configuration settings defined by that profile. Instead, the system-level default

settings, such as those specified in DBC.SecurityDefaultsV, will apply to the users. This includes

password attributes and other system-wide settings.

Option A (The default profile setting for the user will be reset to NULL) is not accurate, as the system

will revert to the default settings rather than leaving values unset.

Option C (The system will show a warning message) does not typically occur when a profile is

dropped. The system will manage the transition without user-facing warnings at login.

Option D (SPOOL and DEFAULT DATABASE specifications for the dropped profile will still apply to the

user) is incorrect because once the profile is dropped, its settings no longer apply to the users who

were associated with it.

Thus, the correct outcome is that system-level attributes defined in DBC.SecurityDefaultsV will

govern the users' settings moving forward.

Question 9

An Administrator has been asked to improve the response time of the workloads in the tactical tier.

Workloads are in all tiers of TASM. They are at CPU and I/O capacity, and they have AWT reserved for

tactical. The Administrator begins by analyzing the data and the workload prioritization.

Which action should the Administrator take?

- A. Increase the relative weight of the tactical tier.

- B. Disable the expedite option of the workloads in the SLG Tier level 1.

- C. Increase the number of AWTs.

- D. Adjust the limits of awt concurrency in the tactical tier using DBS control.

Answer:

A

Explanation:

In a TASM (Teradata Active System Management) environment, the relative weight of different tiers

(such as tactical, SLG, or background) determines the amount of CPU and I/O resources allocated to

each tier. Increasing the relative weight of the tactical tier would prioritize these workloads, giving

them more resources relative to other tiers and improving their response time.

Option B (Disable the expedite option of the workloads in the SLG Tier level 1) might alleviate some

resource pressure from SLG Tier 1 workloads, but it doesn't directly improve the prioritization or

resource allocation for tactical workloads.

Option C (Increase the number of AWTs) is not applicable because the system already has reserved

AWTs for tactical workloads, and AWT (AMP Worker Task) availability is likely not the bottleneck

here. Simply increasing the number of AWTs without addressing the core CPU and I/O resource

allocation will not improve tactical workload response.

Option D (Adjust the limits of AWT concurrency in the tactical tier using DBS control) might provide

marginal improvements but won't have a significant impact on overall resource prioritization. It

focuses more on concurrency management than directly improving workload performance through

resource allocation.

Question 10

At a large car manufacturer, huge volumes of diagnostic data for cars are collected in the following

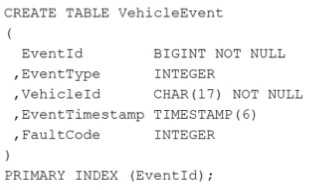

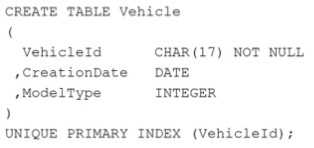

table:

The master data for each car is stored in the following table:

Many reports require data from both tables by joining via column VehicleId.

A very frequently performed query on the system returns the number of events by FaultCode and

ModelType. This query consumes many CPU and I/O resources each day.

Which action should the Administrator take to improve the runtime and resource consumption for

this query?

- A. Use an aggregate join index with columns FaultCode, ModelType, as well as an appropriate aggregate function.

- B. Use a sparse join index with columns FaultCode and ModelType, as well as an appropriate filter function.

- C. Use a NUSI on VehicleEvent.FaultCode and a NUSI on Vehicle.ModelType

- D. Use a single table join index on VehicleEvent hashed by FaultCode and another single table join index on Vehicle hashed by ModelType.

Answer:

A

Explanation:

To improve the runtime and resource consumption for a query that returns the number of events by

FaultCode and ModelType from the two tables VehicleEvent and Vehicle, the most appropriate action

would be:

A . Use an aggregate join index with columns FaultCode, ModelType, as well as an appropriate

aggregate function.

Aggregate Join Index: This type of join index will pre-join the tables VehicleEvent and Vehicle on

VehicleId and store the results of frequently queried aggregations (in this case, counts by FaultCode

and ModelType). It would significantly reduce the need to perform full joins and aggregations at

query time, saving both CPU and I/O resources.

Option B: A sparse join index is useful for selective filtering but does not offer aggregation. Since the

query involves counting (aggregation), the aggregate join index is more suitable.

Option C: Creating Non-Unique Secondary Indexes (NUSIs) on FaultCode and ModelType would help

speed up searches for those columns, but it won't help with the pre-aggregation or frequent joins

that are consuming the majority of the resources.

Option D: Creating separate single table join indexes for FaultCode and ModelType on different

tables won't improve the performance of the aggregation and join-heavy query, because the

problem stems from the frequent joins and aggregations, not just individual table access.

Question 11

An Administrator has been given a task to generate a list of users who have not changed their

password in the last 90 days.

Which DBC view should be used to generate this list?

- A. DBC.LOGONOFFV

- B. DBC.USERSV

- C. DBC.SECURITYDEFAULTSV

- D. DBC.ACCESSLOGV

Answer:

B

Explanation:

DBC.USERSV contains information about users, including the passwordlastmodified column, which

records the date and time the user last changed their password. By querying this view, the

Administrator can identify users who have not updated their password within the specified time

frame (in this case, 90 days).

Option A (DBC.LOGONOFFV) logs user logon and logoff events, but it does not track password

changes.

Option C (DBC.SECURITYDEFAULTSV) contains system-wide security defaults, but it does not track

individual user password activity.

Option D (DBC.ACCESSLOGV) logs access control events, like who accessed which database objects,

but it doesn't track password changes either.

Therefore, DBC.USERSV is the appropriate view to use for this task.

Question 12

A client has a healthy system but often sees delays in some queries because of workload concurrency

limits. These limits have been carefully chosen, so the client needs a solution that will not modify

them.

What should the Administrator use to assist this client?

- A. Use a system throttle.

- B. Use Query Group Viewpoint gortjet to change the throttle limit temporally.

- C. Use Flex Throttle option. Use Query Monitor Viewpoint portlet to change query workloads.

Answer:

C

Explanation:

The Flex Throttle option allows the system to temporarily adjust workload concurrency limits based

on system conditions. This provides more flexibility when handling spikes in query concurrency,

without permanently modifying the established workload limits. Flex Throttle is ideal for handling

temporary peaks in activity, helping to smooth out delays while keeping the core concurrency limits

intact.

Option A (Use a system throttle) would enforce strict concurrency limits but doesn't provide the

flexibility needed in this scenario, where the client is trying to avoid modifying existing limits.

Option B (Use Query Group Viewpoint portlet to change the throttle limit temporarily) suggests

manually adjusting the throttle limit, which is not desirable in this case as the limits have been

carefully chosen.

Option D (Use Query Monitor Viewpoint portlet to change query workloads) would involve changing

the way queries are handled or prioritized but does not address the need to keep concurrency limits

unchanged while still dealing with temporary delays.

Thus, Flex Throttle (Option C) provides the best solution to assist the client without altering the

concurrency limits permanently.

Question 13

Given a user creation request on a 10-AMP system:

CREATE USER hr_user AS PERM = 100e9 SKEW = 10 PERCENT,

SPOOL = 100e9 SKEW = 20 PERCENT;

How does the SKEW factor affect the user's Perm space, assuming the total space consumed is under

the specified perm space limit?

- A. The per AMP limit of any AMP can reach 20 GB.

- B. The per AMP limit of 100 GB can be breached up to 10 percent.

- C. The per AMP limit of 10 GB can be breached to any percent.

- D. The per AMP limit of any AMP can reach 11 GET

Answer:

D

Explanation:

In the given CREATE USER statement, the SKEW = 10 PERCENT parameter applies to Perm space and

allows some AMPs (Access Module Processors) to use up to 10% more space than the average

allocation across the AMPs.

The user is allocated 100 GB of Perm space across a 10-AMP system, meaning the average space per

AMP is 10 GB.

With a 10% skew allowed, this means that an AMP can use up to 10% more than the average

allocation, which is 10 GB + 1 GB = 11 GB.

Question 14

An Administrator has been tasked with analyzing previous growth and usage patterns by utilities

such as Multiload and FastLoad. The aim is to project the likely resource requirements of the existing

loads in the next three to six months.

In the last month, the Administrator team have started using AccountString expansion on all user

accounts and have started maintenance jobs to housekeep system data older than seven years. Two

years ago, the following command was issued on the system:

BEGIN QUERY LOGGING WITH ALL ON ALL;

No other logging commands have been issued.

Which view contains the utility usage data for the prior months?

- A. AMPUsageV

- B. LogOnOffV

- C. QryLogUtilityV

- D. QryLogV

Answer:

C

Explanation:

QryLogUtilityV is a specific DBQL (Database Query Logging) view that provides information about

utility usage (such as Multiload and FastLoad) on the system. Since the BEGIN QUERY LOGGING WITH

ALL ON ALL command was issued two years ago, the DBQL (Database Query Logging) has been

tracking various events, including utility usage, which is stored in QryLogUtilityV.

Option A (AMPUsageV) contains AMP (Access Module Processor) level statistics and usage data but

not detailed information about utility jobs.

Option B (LogOnOffV) tracks user logon and logoff activities but does not provide information about

utility usage.

Option D (QryLogV) logs general query execution data but is not specifically focused on utility usage.

Question 15

A middle-tier application server logs on to the database as TrustedUser and submits requests on

behalf of application end users. The server is shared by Finance and Human Resources groups and

uses ProxyUser query band to identify end users to the database. Each group needs access to its own

sensitive data, so the Administrator has created two separate roles with the appropriate

permissions.

What is the best way to control access to each group's sensitive data?

- A. Define the roles as external and use the ProxyRole query band to specify one role.

- B. Grant both roles to TrustedUser, and add the ProxyRole query band to specify one role.

- C. Specify the appropriate role for each end user in a grant connect through statement.

- D. Include both roles in the grant connect through statement, and use ProxyRole in the query band to select the appropriate role.

Answer:

D

Explanation:

The GRANT CONNECT THROUGH statement allows the TrustedUser to act on behalf of multiple end

users while securely connecting to the database. By granting both roles (Finance and Human

Resources) in this statement, you allow the ProxyUser to switch between roles depending on the

query band's ProxyRole value.

Using the ProxyRole query band, the application can specify which role (Finance or Human

Resources) should be used for each specific request. This approach provides flexibility, as the

application can dynamically assign the appropriate role to the user based on the query context.

Option A (Defining roles as external and using ProxyRole) wouldn't fully address the need to manage

multiple roles dynamically for a shared server.

Option B (Granting both roles to TrustedUser) doesn't allow for flexible role switching on a per-

request basis without the use of GRANT CONNECT THROUGH and could lead to over-granting of

permissions.

Option C (Specifying a role for each end user in GRANT CONNECT THROUGH) isn't as flexible as

allowing both roles to be used and dynamically selected through the query band.

Thus, Option D is the most appropriate solution, as it provides both security and flexibility, enabling

the application to use the correct role based on the ProxyRole query band for each query submitted.