Splunk splk-3003 practice test

Splunk Core Certified Consultant Exam

Question 1

When monitoring and forwarding events collected from a file containing unstructured textual events,

what is the difference in the Splunk2Splunk payload traffic sent between a universal forwarder (UF)

and indexer compared to the Splunk2Splunk payload sent between a heavy forwarder (HF) and the

indexer layer? (Assume that the file is being monitored locally on the forwarder.)

- A. The payload format sent from the UF versus the HF is exactly the same. The payload size is identical because theyre both sending 64K chunks.

- B. The UF sends a stream of data containing one set of medata fields to represent the entire stream, whereas the HF sends individual events, each with their own metadata fields attached, resulting in a lager payload.

- C. The UF will generally send the payload in the same format, but only when the sourcetype is specified in the inputs.conf and EVENT_BREAKER_ENABLE is set to true.

- D. The HF sends a stream of 64K TCP chunks with one set of metadata fields attached to represent the entire stream, whereas the UF sends individual events, each with their own metadata fields attached.

Answer:

B

Question 2

The universal forwarder (UF) should be used whenever possible, as it is smaller and more efficient. In

which of the following scenarios would a heavy forwarder (HF) be a more appropriate choice?

- A. When a predictable version of Python is required.

- B. When filtering 10%–15% of incoming events.

- C. When monitoring a log file.

- D. When running a script.

Answer:

B

Reference:

https://www.splunk.com/en_us/blog/tips-and-tricks/universal-or-heavy-that-is-the-

questionhtml

Question 3

A non-ES customer has a concern about data availability during a disaster recovery event. Which of

the following Splunk Validated Architectures (SVAs) would be recommended for that use case?

- A. Topology Category Code: M4

- B. Topology Category Code: M14

- C. Topology Category Code: C13

- D. Topology Category Code: C3

Answer:

B

Reference:

https://www.splunk.com/pdfs/technical-briefs/splunk-validated-architectures.pdf

(21)

Question 4

Which statement is correct?

- A. In general, search commands that can be distributed to the search peers should occur as early as possible in a well-tuned search.

- B. As a streaming command, streamstats performs better than stats since stats is just a reporting command.

- C. When trying to reduce a search result to unique elements, the dedup command is the only way to achieve this.

- D. Formatting commands such as fieldformat should occur as early as possible in the search to take full advantage of the often larger number of search peers.

Answer:

D

Question 5

Which event processing pipeline contains the regex replacement processor that would be called

upon to run event masking routines on events as they are ingested?

- A. Merging pipeline

- B. Indexing pipeline

- C. Typing pipeline

- D. Parsing pipeline

Answer:

A

Question 6

What happens to the indexer cluster when the indexer Cluster Master (CM) runs out of disk space?

- A. A warm standby CM needs to be brought online as soon as possible before an indexer has an outage.

- B. The indexer cluster will continue to operate as long as no indexers fail.

- C. If the indexer cluster has site failover configured in the CM, the second cluster master will take over.

- D. The indexer cluster will continue to operate as long as a replacement CM is deployed within 24 hours.

Answer:

C

Question 7

In addition to the normal responsibilities of a search head cluster captain, which of the following is a

default behavior?

- A. The captain is not a cluster member and does not perform normal search activities.

- B. The captain is a cluster member who performs normal search activities.

- C. The captain is not a cluster member but does perform normal search activities.

- D. The captain is a cluster member but does not perform normal search activities.

Answer:

B

Reference:

https://docs.splunk.com/Documentation/Splunk/8.1.0/DistSearch/

SHCarchitecture#Search_head_cluster_captain

Question 8

In an environment that has Indexer Clustering, the Monitoring Console (MC) provides dashboards to

monitor environment health. As the environment grows over time and new indexers are added,

which steps would ensure the MC is aware of the additional indexers?

- A. No changes are necessary, the Monitoring Console has self-configuration capabilities.

- B. Using the MC setup UI, review and apply the changes.

- C. Remove and re-add the cluster master from the indexer clustering UI page to add new peers, then apply the changes under the MC setup UI.

- D. Each new indexer needs to be added using the distributed search UI, then settings must be saved under the MC setup UI.

Answer:

B

Question 9

A working search head cluster has been set up and used for 6 months with just the native/local

Splunk user authentication method. In order to integrate the search heads with an external Active

Directory server using LDAP, which of the following statements represents the most appropriate

method to deploy the configuration to the servers?

- A. Configure the integration in a base configuration app located in shcluster-apps directory on the search head deployer, then deploy the configuration to the search heads using the splunk apply shcluster- bundle command.

- B. Log onto each search using a command line utility. Modify the authentication.conf and authorize.conf files in a base configuration app to configure the integration.

- C. Configure the LDAP integration on one Search Head using the Settings > Access Controls > Authentication Method and Settings > Access Controls > Roles Splunk UI menus. The configuration setting will replicate to the other nodes in the search head cluster eliminating the need to do this on the other search heads.

- D. On each search head, login and configure the LDAP integration using the Settings > Access Controls > Authentication Method and Settings > Access Controls > Roles Splunk UI menus.

Answer:

C

Reference:

https://docs.splunk.com/Documentation/Splunk/8.1.0/Security/ConfigureLDAPwithSplunkWeb

Question 10

A customer would like to remove the output_file capability from users with the default user role to

stop them from filling up the disk on the search head with lookup files. What is the best way to

remove this capability from users?

- A. Create a new role without the output_file capability that inherits the default user role and assign it to the users.

- B. Create a new role with the output_file capability that inherits the default user role and assign it to the users.

- C. Edit the default user role and remove the output_file capability.

- D. Clone the default user role, remove the output_file capability, and assign it to the users.

Answer:

C

Question 11

A customer has 30 indexers in an indexer cluster configuration and two search heads. They are

working on writing SPL search for a particular use-case, but are concerned that it takes too long to

run for short time durations.

How can the Search Job Inspector capabilities be used to help validate and understand the customer

concerns?

- A. Search Job Inspector provides statistics to show how much time and the number of events each indexer has processed.

- B. Search Job Inspector provides a Search Health Check capability that provides an optimized SPL query the customer should try instead.

- C. Search Job Inspector cannot be used to help troubleshoot the slow performing search; customer should review index=_introspection instead.

- D. The customer is using the transaction SPL search command, which is known to be slow.

Answer:

A

Question 12

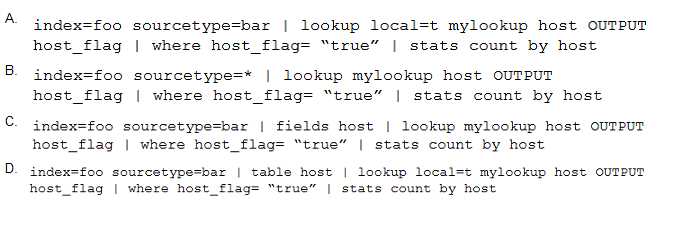

Which of the following is the most efficient search?

- A. Option A

- B. Option B

- C. Option C

- D. Option D

Answer:

C

Question 13

In a large cloud customer environment with many (>100) dynamically created endpoint systems,

each with a UF already deployed, what is the best approach for associating these systems with an

appropriate serverclass on the deployment server?

- A. Work with the cloud orchestration team to create a common host-naming convention for these systems so a simple pattern can be used in the serverclass.conf whitelist attribute.

- B. Create a CSV lookup file for each severclass, manually keep track of the endpoints within this CSV file, and leverage the whitelist.from_pathname attribute in serverclass.conf.

- C. Work with the cloud orchestration team to dynamically insert an appropriate clientName setting into each endpoints local/deploymentclient.conf which can be matched by whitelist in serverclass.conf.

- D. Using an installation bootstrap script run a CLI command to assign a clientName setting and permit serverclass.conf whitelist simplification.

Answer:

C

Question 14

The Splunk Validated Architectures (SVAs) document provides a series of approved Splunk

topologies. Which statement accurately describes how it should be used by a customer?

- A. Customer should look at the category tables, pick the highest number that their budget permits, then select this design topology as the chosen design.

- B. Customers should identify their requirements, provisionally choose an approved design that meets them, then consider design principles and best practices to come to an informed design decision.

- C. Using the guided requirements gathering in the SVAs document, choose a topology that suits requirements, and be sure not to deviate from the specified design.

- D. Choose an SVA topology code that includes Search Head and Indexer Clustering because it offers the highest level of resilience.

Answer:

B

Reference:

https://www.splunk.com/en_us/blog/tips-and-tricks/splunk-validated-architectures.html

Question 15

Which of the following server roles should be configured for a host which indexes its internal logs

locally?

- A. Cluster master

- B. Indexer

- C. Monitoring Console (MC)

- D. Search head

Answer:

B

Reference:

https://community.splunk.com/t5/Deployment-Architecture/How-to-identify-Splunk-

Instance-role-by- internal-logs/m-p/365555