oracle 1z0-1109-25 practice test

Oracle Cloud Infrastructure 2025 DevOps Professional

Question 1

As a cloud engineer, you are responsible for managing a Kubernetes cluster on the Oracle Cloud

Infrastructure (OCI) platform for your organization. You are looking for ways to ensure reliable

operations of Kubernetes at scale while minimizing the operational overhead of managing the

worker node infrastructure.

Which cluster option is the best fit for your requirement?

- A. Using OCI OKE managed nodes with cluster autoscalers to eliminate worker node infrastructure management

- B. Using OCI OKE virtual nodes to eliminate worker node infrastructure management

- C. Using Kubernetes cluster add-ons to automate worker node management

- D. Creating and managing worker nodes using OCI compute instances

Answer:

B

Explanation:

Step 1: Understanding the Requirement

The goal is to ensure reliable operations of Kubernetes at scale while minimizing the operational

overhead of managing worker node infrastructure. In this context, a solution is needed that abstracts

away the complexity of managing, scaling, and maintaining worker nodes.

Step 2: Explanation of the Options

A . Using OCI OKE managed nodes with cluster autoscalers

While this option provides managed node pools and uses cluster autoscalers to adjust resources

based on demand, it still requires some level of management for the underlying worker nodes (e.g.,

patching, upgrading, monitoring).

Operational overhead: Moderate.

B . Using OCI OKE virtual nodes

Virtual nodes in OCI OKE are a serverless option for running Kubernetes pods. They remove the need

to manage underlying worker nodes entirely.

OCI provisions resources dynamically, allowing scaling based purely on pod demand.

There’s no need for node management, patching, or infrastructure planning, which perfectly aligns

with the requirement to minimize operational overhead.

Operational overhead: Minimal.

Best Fit for This Scenario: Since the requirement emphasizes minimizing operational overhead, this is

the ideal solution.

C . Using Kubernetes cluster add-ons to automate worker node management

Kubernetes add-ons like Cluster Autoscaler or Node Problem Detector help in automating some

aspects of worker node management. However, this still requires managing worker node

infrastructure at the core level.

Operational overhead: Moderate to high.

D . Creating and managing worker nodes using OCI compute instances

This involves manually provisioning and managing compute instances for worker nodes, including

scaling, patching, and troubleshooting.

Operational overhead: High.

Not Suitable for the Requirement: This option contradicts the goal of minimizing operational

overhead.

Step 3: Why Virtual Nodes Are the Best Fit

Virtual Nodes in OCI OKE:

Virtual nodes provide serverless compute for Kubernetes pods, allowing users to run workloads

without provisioning or managing worker node infrastructure.

Scaling: Pods are automatically scheduled, and the required infrastructure is dynamically provisioned

behind the scenes.

Cost Efficiency: You only pay for the resources consumed by the running workloads.

Use Case Alignment: Eliminating the burden of worker node infrastructure management while

ensuring Kubernetes reliability at scale.

Step 4: References and OCI Resources

OCI Documentation:

OCI Kubernetes Virtual Nodes

OCI Container Engine for Kubernetes Overview

Best Practices for Kubernetes on OCI:

Best Practices for OCI Kubernetes Clusters

Question 2

How do OCI DevOps Deployment Pipelines reduce risk and complexity of production applications?

- A. By reducing change-driven errors introduced by manual deployments

- B. By scaling builds with service-managed build runners

- C. By working with existing Git repositories and CI systems

- D. By eliminating downtime of production applications

Answer:

A

Explanation:

OCI DevOps Deployment Pipelines automate the process of deploying applications to production

environments. By using automated, repeatable deployment processes, they help reduce the risk of

change-driven errors, which are often introduced during manual deployments. This automation

reduces human errors and ensures consistency across environments, thus minimizing complexity and

risk in production.

Question 3

How does the Oracle Cloud Infrastructure Container Engine for Kubernetes (OKE) Cluster Autoscaler

determine when to create new nodes for an OKE cluster?

- A. When the CPU or memory utilization crosses a configured threshold.

- B. When the resource requests from pods exceed a configured threshold.

- C. When the custom metrics from the services exceed a configured threshold.

- D. When the rate of requests to the application crosses a configured threshold.

Answer:

B

Explanation:

The OKE Cluster Autoscaler automatically adjusts the number of worker nodes in an OKE cluster

based on the resource requests made by Kubernetes pods. When there are not enough resources

available (e.g., CPU or memory) on existing nodes to accommodate pending pods, the Cluster

Autoscaler will create new nodes to meet the resource demand.

Question 4

A team wants to deploy artificial intelligence and machine learning workloads in their OCI Container

Engine for Kubernetes (OKE) cluster. They prioritize strong isolation, cost-efficiency, and the ability to

leverage serverless capabilities.

Which solution is best suited for their requirements?

- A. Virtual nodes in OKE

- B. Self-Managed Nodes in OKE

- C. Managed nodes in OKE

- D. Container Instances in OCI

Answer:

A

Explanation:

Virtual nodes in OKE provide a serverless experience for deploying Kubernetes workloads, which

means you do not have to manage or scale the underlying infrastructure. This solution is particularly

cost-efficient because you only pay for the resources used by the pods, and it provides strong

isolation for workloads.

Virtual nodes are well suited for AI/ML workloads as they allow users to easily scale compute

resources without being constrained by the limits of individual worker nodes.

Question 5

Which command creates the docker registry secret required in the application manifests for OKE to

pull images from Oracle Cloud Infrastructure Registry?

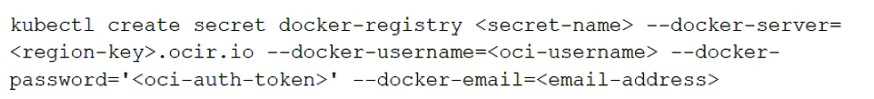

A)

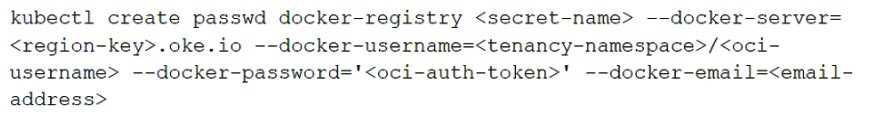

B)

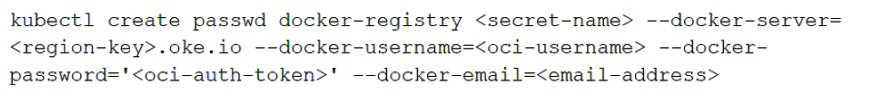

C)

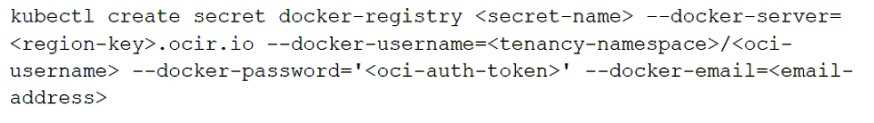

D)

- A. Option A

- B. Option B

- C. Option C

- D. Option D

Answer:

D

Explanation:

To create a Docker registry secret to pull images from the Oracle Cloud Infrastructure Registry (OCIR),

you need to specify the correct parameters such as the region key, namespace, OCI username, and

OCI authentication token.

Chosen command is correct because:

The kubectl create secret docker-registry command creates a Docker registry secret.

The --docker-server=<region-key>.ocir.io specifies the correct endpoint for OCIR.

The --docker-username=<tenancy-namespace>/<oci-username> provides both the tenancy

namespace and the OCI username, which is the required format for authentication with OCIR.

The --docker-password='<oci-auth-token>' specifies the OCI auth token, which acts as a password for

authentication.

The --docker-email=<email-address> is also included.

The other commands have errors, such as missing tenancy namespace or using incorrect flags

(passwd instead of secret).

Question 6

A DevOps engineer is asked to access an Oracle Cloud Infrastructure Container Engine for Kubernetes

(OKE) cluster to deploy new applications and manage existing ones.

Which two statements are true? (Choose two.)

- A. To access the cluster using kubectl, you have to set up a Kubernetes configuration file for the cluster. The kubeconfig file by default is named config and stored in the $HOME/.kube directory.

- B. When a cluster’s Kubernetes API endpoint has a public IP address, you can access the cluster in Cloud Shell by setting up a kubeconfig file.

- C. Generating an API signing key pair is not required while setting up cluster access using local machine if the public key is not already uploaded in the console.

- D. The only available option when a cluster’s Kubernetes API endpoint has a public IP address is to control the cluster locally using kubectl and the Kubernetes Dashboard.

- E. To access the cluster using kubectl, you have to set up a Kubernetes manifest file for the cluster. The kubeconfig file by default is named config and stored in the $HOME/.manifest directory.

Answer:

A, B

Explanation:

To access an OKE cluster using kubectl, you need to set up a Kubernetes configuration file

(kubeconfig). By default, the kubeconfig file is named config and stored in the $HOME/.kube

directory.

When a cluster’s Kubernetes API endpoint has a public IP address, you can use Cloud Shell to access

the cluster. Setting up a kubeconfig file is required to authenticate and manage the cluster.

Question 7

What is the correct approach to upgrade an Oracle Container Engine for Kubernetes (OKE) Cluster to

a newer version of Kubernetes?

- A. Upgrade the control plane, then upgrade the node pools.

- B. Initiate the automated upgrade process using the OCI Console, CLI, or API.

- C. Upgrade the node pools one at a time, then once all node pools are upgraded, upgrade the control plane.

- D. Initiate the control plane and node pool upgrades simultaneously.

Answer:

A

Explanation:

The correct approach to upgrade an Oracle Container Engine for Kubernetes (OKE) cluster involves

first upgrading the Kubernetes control plane, followed by upgrading the node pools. The control

plane must be upgraded first to ensure compatibility with newer versions of Kubernetes, as node

pools rely on the control plane for orchestration and management.

After upgrading the control plane, each node pool is upgraded to match the new Kubernetes version.

This phased approach ensures the cluster remains in a stable state during the upgrade.

Question 8

As a DevOps engineer at XYZ Corp, you have been assigned the task of setting up a new OKE (Oracle

Kubernetes Engine) cluster to manage the organization’s Kubernetes applications hosted on Oracle

Cloud Infrastructure (OCI). Your goal is to ensure a smooth and efficient process while preparing for

the cluster creation.

Which of the following statements is false regarding the preparation process for setting up a new

OKE cluster?

- A. Container Engine for Kubernetes cannot utilize existing network resources for the creation of the new cluster.

- B. Container Engine for Kubernetes automatically creates and configures new network resources for the new cluster.

- C. It is necessary to ensure sufficient quota on different resource types in your OCI tenancy for the cluster setup.

- D. Access to an Oracle Cloud Infrastructure tenancy is required to set up the new OKE cluster.

Answer:

A

Explanation:

This statement is false because Container Engine for Kubernetes (OKE) can utilize existing network

resources such as Virtual Cloud Networks (VCNs), subnets, security lists, and route tables for the

creation of a new cluster. You can either use pre-existing network resources or let OKE create new

network resources automatically.

Question 9

How can you scale a deployment named nodejs-deployment to have two replicas?

- A. kubectl set replicas deployment nodejs-deployment --replicas=2

- B. kubectl resize deployment nodejs-deployment --replicas=2

- C. kubectl adjust deployment nodejs-deployment --replicas=2

- D. kubectl scale deployment nodejs-deployment --replicas=2

Answer:

D

Explanation:

The kubectl scale command is used to scale the number of replicas in a deployment. By specifying

the --replicas flag, you define the desired number of replicas for the deployment.

(kubectl set replicas) is not the correct syntax for scaling a deployment.

(kubectl resize) is not a valid command for scaling a deployment.

(kubectl adjust) is also not a valid Kubernetes command.

Question 10

As a DevOps engineer working on managing clusters on the OCI platform for your organization, which

statement is true about managing cluster add-ons in OCI OKE Cluster?

- A. When creating a new cluster, essential cluster add-ons cannot be disabled.

- B. When enabling a cluster add-on, you cannot configure the add-on by specifying one or more key/value pairs to pass as arguments to the cluster add-on.

- C. When creating a new cluster, essential cluster add-ons are set to manually update.

- D. When you disable a cluster add-on using the console, the add-on is completely removed from the cluster.

Answer:

A

Explanation:

Essential cluster add-ons are required for the basic functioning of the Kubernetes cluster and cannot

be disabled during cluster creation. These add-ons provide necessary features such as core DNS,

networking, and other critical functionalities for the cluster's operation.

Question 11

The Kubernetes Master node serves as the central control plane for managing the cluster’s resources

and orchestrating workload deployment.

What are the primary responsibilities of the Kubernetes Master node?

- A. The Master node is primarily tasked with executing application workloads and ensuring their availability within the cluster.

- B. The Master node oversees the scheduling and allocation of cluster resources, ensuring optimal utilization across nodes.

- C. The Master node monitors network traffic within the cluster, ensuring secure communication and efficient data transfer between nodes.

- D. The Master node serves as a repository for storing container images, facilitating rapid deployment and scaling of applications.

Answer:

B

Explanation:

The Kubernetes Master node is the central control plane responsible for managing the cluster’s

resources and orchestrating workload deployment. Its primary responsibilities include:

Scheduling: Deciding which workloads (pods) should run on which worker nodes, based on resource

availability and scheduling constraints.

Resource Allocation: Allocating resources to workloads to ensure optimal utilization across nodes.

Cluster Management: Maintaining the desired state of applications, managing cluster events, and

ensuring that all the components of the cluster are functioning properly.

Question 12

As a DevOps Engineer, you are tasked with explaining the key concepts of Terraform to a new team

member. You want to ensure they understand the fundamental concepts of Terraform.

Which of the following best describes the purpose of Terraform variables?

- A. Terraform variables are used to manage the life cycle of Terraform resources.

- B. Terraform variables are used to define input values for Terraform configurations, allowing for customization and reuse of infrastructure code.

- C. Terraform variables are used to output the final state of the infrastructure after deployment.

- D. Terraform variables are used to define the structure and organization of Terraform configuration files.

Answer:

B

Explanation:

Terraform variables are used to define input values for Terraform configurations. They allow users to

customize infrastructure deployments by providing different values without modifying the

configuration files themselves. Variables help in creating reusable infrastructure code, making it easy

to maintain and adjust the infrastructure setup according to different environments or needs.

Question 13

As a DevOps engineer at XYZ Corp, you are responsible for ensuring the smooth operation of high-

traffic web applications hosted on Oracle Cloud Infrastructure (OCI). The web applications run on

multiple OCI resources, including virtual machines, load balancers, and databases. Recently, users

have reported failures while accessing one of the OCI-based web applications, and you suspect HTTP

5XX errors on the load balancer. You need to quickly identify and address this issue.

Which of the following statements can assist you in quickly identifying and monitoring the HTTP 5XX

error rate on the load balancer and setting up notifications?

- A. Use Custom Metrics of the Monitoring service to collect HTTP 5XX error rates from the load balancer and set up Service Connectors with third-party services such as PagerDuty or Slack.

- B. Use Metrics and Alarms of the Monitoring service with Container Engine for Kubernetes (OKE) to monitor HTTP 5XX errors on Kubernetes resources and correlate them with other OCI resources.

- C. Use Event Rules to detect HTTP 5XX errors on the load balancer and trigger automated actions using OCI Functions or API Gateway.

- D. Use Metrics and Alarms of the Monitoring service to monitor the HTTP 5XX error rate on the load balancer and set up notifications with OCI Notifications.

Answer:

B

Explanation:

The Monitoring service in OCI can be used to track metrics for various OCI resources, including load

balancers. You can monitor specific metrics, such as HTTP 5XX error rates, to identify issues.

By using Alarms, you can set up thresholds for the HTTP 5XX error rate and receive notifications

when the threshold is breached. The notifications can be configured through OCI Notifications, which

allows integration with email, PagerDuty, Slack, and other channels.

Question 14

Your team is responsible for deploying a new version of an application that is being used by your

company’s finance department. The application is critical to the department’s operations, and any

downtime could have serious consequences.

What is the recommended approach in OCI for creating environments for this scenario?

- A. Deploy the application to two separate OCI tenancies to ensure complete isolation between environments.

- B. Use a single Kubernetes cluster with two node pools, one for the blue-green environment and one for the canary environment.

- C. Configure two OKE clusters, selecting the blue-green traffic shift strategy using a load balancer.

- D. Use a single OCI region and create two separate Virtual Cloud Networks (VCNs), one for the blue environment and one for the green environment.

Answer:

B

Explanation:

For critical applications, such as the one used by the finance department, a blue-green deployment

strategy is recommended to ensure minimal or zero downtime during upgrades. The blue-green

strategy involves running two separate environments: blue (current version) and green (new

version).

Question 15

Your team is working on a project to deploy a microservices-based application on a cloud platform

using Terraform. Each microservice has specific configurations and dependencies, and you want to

ensure modularity, reusability, and consistency across deployments.

Which Terraform feature would you use to achieve these objectives efficiently?

- A. Terraform Providers

- B. Terraform Workspaces

- C. Terraform Variables

- D. Terraform Modules

Answer:

D

Explanation:

Terraform Modules are used to organize and group related configuration resources into reusable

components. By using modules, you can achieve modularity, reusability, and consistency across

different deployments, making it easier to manage complex infrastructure setups.

For a microservices-based application, where each microservice has specific configurations and

dependencies, modules allow you to define the infrastructure for each microservice in a modular

way. This helps to maintain clean, reusable code and ensures consistency across deployments.