MuleSoft mcpa-level-1 practice test

MuleSoft Certified Platform Architect - Level 1 Exam

Question 1

True or False. We should always make sure that the APIs being designed and developed are self-

servable even if it needs more man-day effort and resources.

- A. FALSE

- B. TRUE

Answer:

B

Explanation:

Correct Answer: TRUE

*****************************************

>> As per MuleSoft proposed IT Operating Model, designing APIs and making sure that they are

discoverable and self-servable is VERY VERY IMPORTANT and decides the success of an API and its

application network.

Question 2

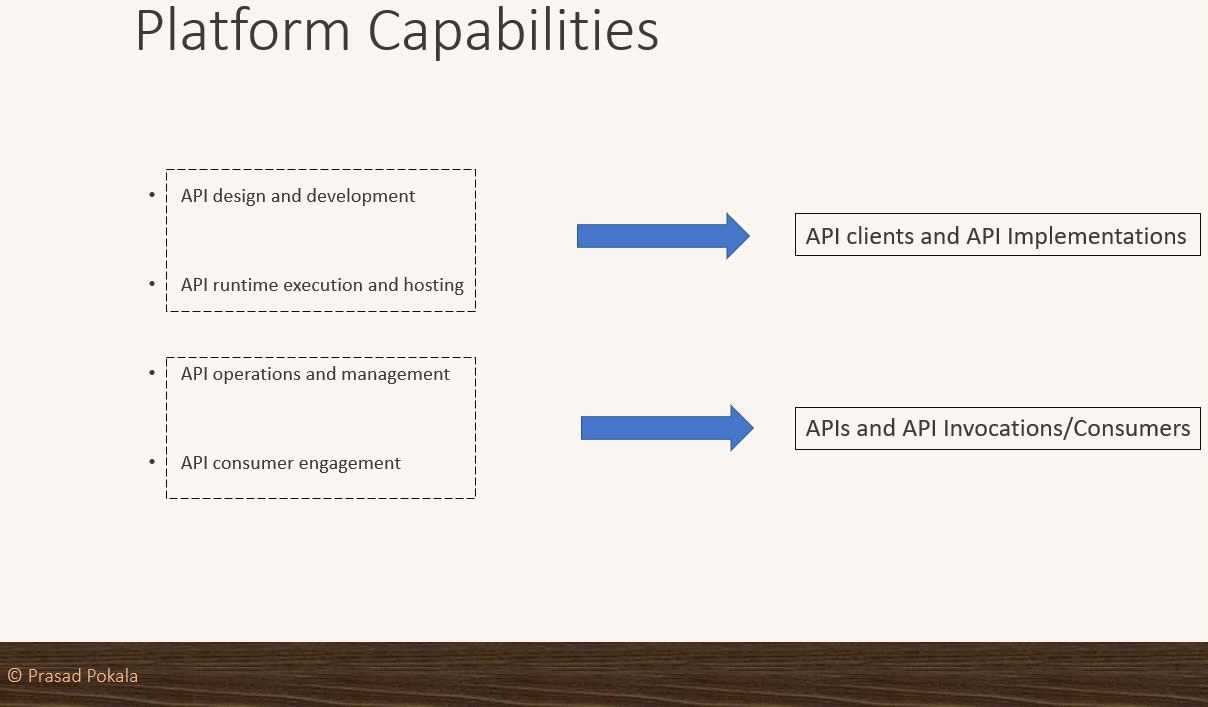

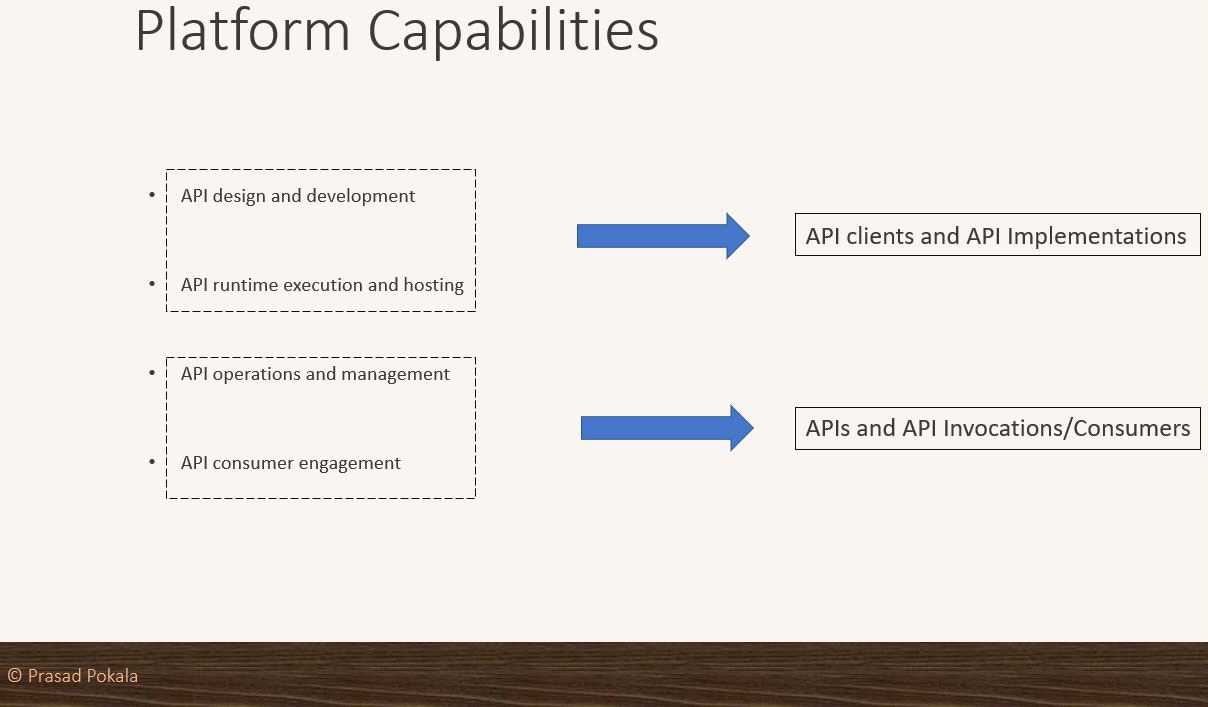

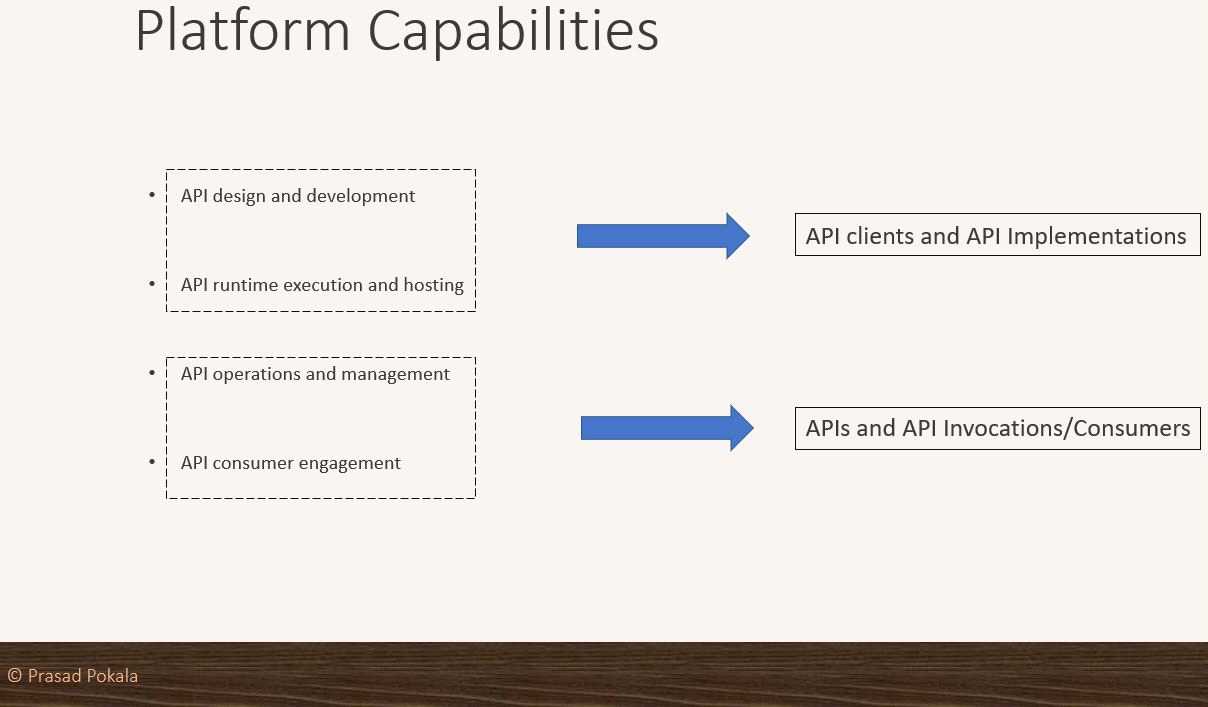

What are 4 important Platform Capabilities offered by Anypoint Platform?

- A. API Versioning, API Runtime Execution and Hosting, API Invocation, API Consumer Engagement

- B. API Design and Development, API Runtime Execution and Hosting, API Versioning, API Deprecation

- C. API Design and Development, API Runtime Execution and Hosting, API Operations and Management, API Consumer Engagement

- D. API Design and Development, API Deprecation, API Versioning, API Consumer Engagement

Answer:

C

Explanation:

Correct Answer: API Design and Development, API Runtime Execution and Hosting, API Operations

and Management, API Consumer Engagement

*****************************************

>> API Design and Development - Anypoint Studio, Anypoint Design Center, Anypoint Connectors

>> API Runtime Execution and Hosting - Mule Runtimes, CloudHub, Runtime Services

>> API Operations and Management - Anypoint API Manager, Anypoint Exchange

>> API Consumer Management - API Contracts, Public Portals, Anypoint Exchange, API Notebooks

Question 3

What Anypoint Platform Capabilities listed below fall under APIs and API Invocations/Consumers

category? Select TWO.

- A. API Operations and Management

- B. API Runtime Execution and Hosting

- C. API Consumer Engagement

- D. API Design and Development

Answer:

D

Explanation:

Correct Answers: API Design and Development and API Runtime Execution and Hosting

*****************************************

>> API Design and Development - Anypoint Studio, Anypoint Design Center, Anypoint Connectors

>> API Runtime Execution and Hosting - Mule Runtimes, CloudHub, Runtime Services

>> API Operations and Management - Anypoint API Manager, Anypoint Exchange

>> API Consumer Management - API Contracts, Public Portals, Anypoint Exchange, API Notebooks

Explanation:

Correct Answers:API Operations and Management and API Consumer Engagement

*****************************************

>>API Design and Development- Anypoint Studio, Anypoint Design Center, Anypoint Connectors

>>API Runtime Execution and Hosting- Mule Runtimes, CloudHub, Runtime Services

>>API Operations and Management- Anypoint API Manager, Anypoint Exchange

>>API Consumer Management- API Contracts, Public Portals, Anypoint Exchange, API Notebooks

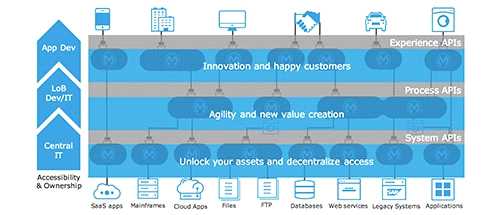

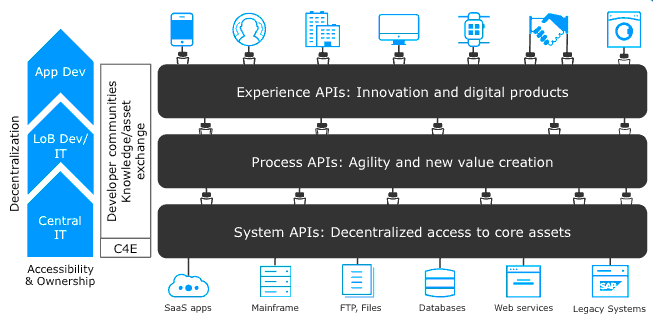

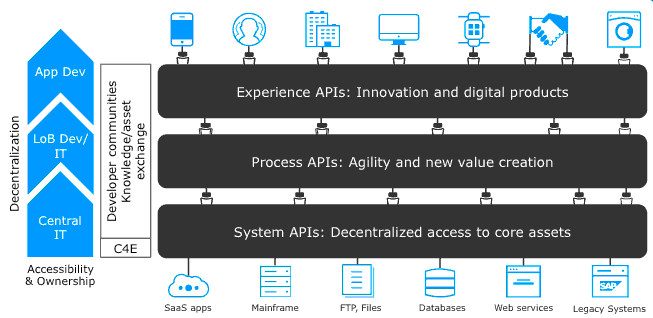

Question 4

Select the correct Owner-Layer combinations from below options

A.

1. App Developers owns and focuses on Experience Layer APIs

2. Central IT owns and focuses on Process Layer APIs

3. LOB IT owns and focuses on System Layer APIs

B.

1. Central IT owns and focuses on Experience Layer APIs

2. LOB IT owns and focuses on Process Layer APIs

3. App Developers owns and focuses on System Layer APIs

C.

1. App Developers owns and focuses on Experience Layer APIs

2. LOB IT owns and focuses on Process Layer APIs

3. Central IT owns and focuses on System Layer APIs

Answer:

C

Explanation:

Correct Answer:

1. App Developers owns and focuses on Experience Layer APIs

2. LOB IT owns and focuses on Process Layer APIs

3. Central IT owns and focuses on System Layer APIs

References:

https://blogs.mulesoft.com/biz/api/experience-api-ownership/

https://blogs.mulesoft.com/biz/api/process-api-ownership/

https://blogs.mulesoft.com/biz/api/system-api-ownership/

Question 5

Which layer in the API-led connectivity focuses on unlocking key systems, legacy systems, data

sources etc and exposes the functionality?

- A. Experience Layer

- B. Process Layer

- C. System Layer

Answer:

C

Explanation:

Correct Answer: System Layer

The APIs used in an API-led approach to connectivity fall into three categories:

System APIs these usually access the core systems of record and provide a means of insulating the

user from the complexity or any changes to the underlying systems. Once built, many users, can

access data without any need to learn the underlying systems and can reuse these APIs in multiple

projects.

Process APIs These APIs interact with and shape data within a single system or across systems

(breaking down data silos) and are created here without a dependence on the source systems from

which that data originates, as well as the target channels through which that data is delivered.

Experience APIs Experience APIs are the means by which data can be reconfigured so that it is most

easily consumed by its intended audience, all from a common data source, rather than setting up

separate point-to-point integrations for each channel. An Experience API is usually created with API-

first design principles where the API is designed for the specific user experience in mind.

Question 6

A Mule application exposes an HTTPS endpoint and is deployed to three CloudHub workers that do

not use static IP addresses. The Mule application expects a high volume of client requests in short

time periods. What is the most cost-effective infrastructure component that should be used to serve

the high volume of client requests?

A.

A customer-hosted load balancer

B.

The CloudHub shared load balancer

C. An API proxy

D. Runtime Manager autoscaling

Answer:

B

Explanation:

Correct Answer: The CloudHub shared load balancer

*****************************************

The scenario in this question can be split as below:

>> There are 3 CloudHub workers (So, there are already good number of workers to handle high

volume of requests)

>> The workers are not using static IP addresses (So, one CANNOT use customer load-balancing

solutions without static IPs)

>> Looking for most cost-effective component to load balance the client requests among the

workers.

Based on the above details given in the scenario:

>> Runtime autoscaling is NOT at all cost-effective as it incurs extra cost. Most over, there are already

3 workers running which is a good number.

>> We cannot go for a customer-hosted load balancer as it is also NOT most cost-effective (needs

custom load balancer to maintain and licensing) and same time the Mule App is not having Static IP

Addresses which limits from going with custom load balancing.

>> An API Proxy is irrelevant there as it has no role to play w.r.t handling high volumes or load

balancing.

So, the only right option to go with and fits the purpose of scenario being most cost-effective is -

using a CloudHub Shared Load Balancer.

Question 7

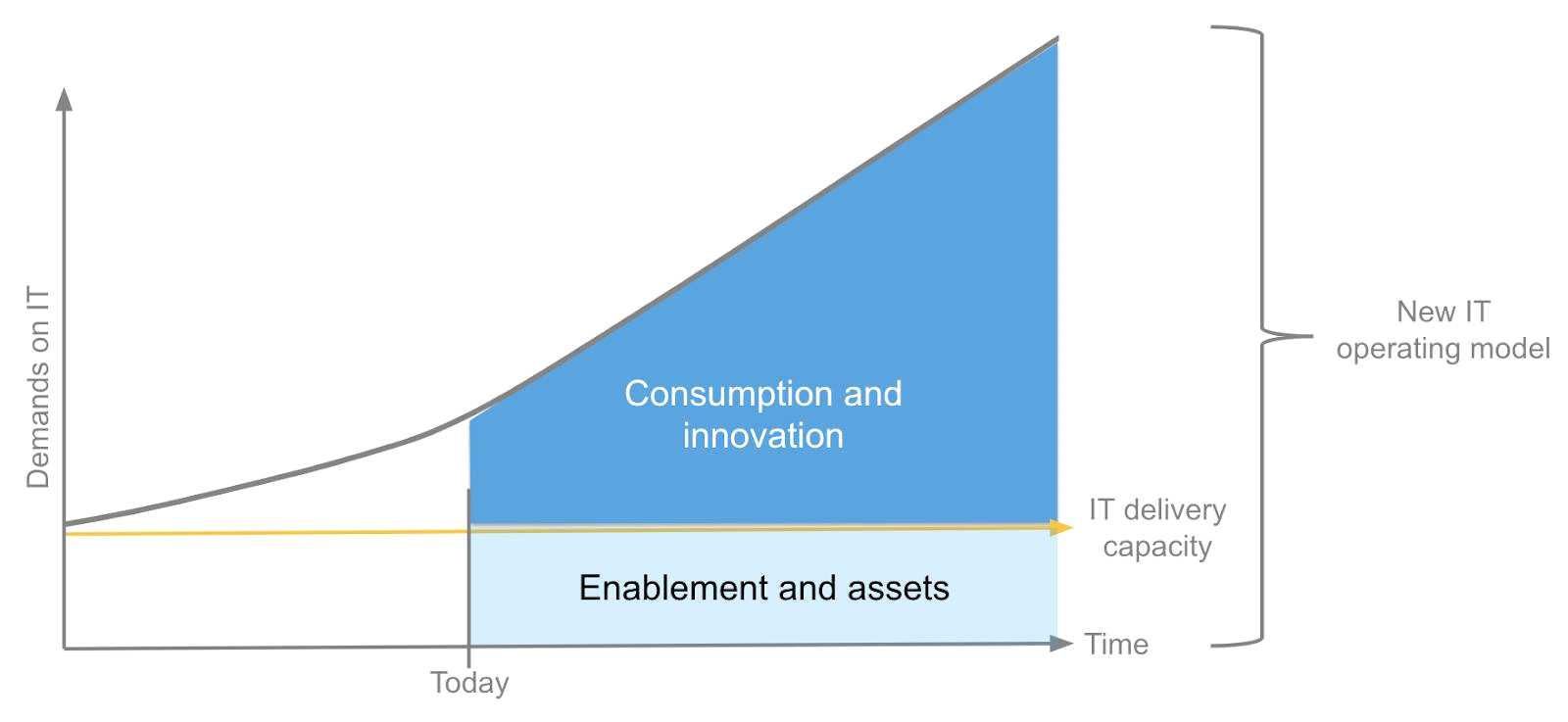

What are the major benefits of MuleSoft proposed IT Operating Model?

A.

1. Decrease the IT delivery gap

2. Meet various business demands without increasing the IT capacity

3. Focus on creation of reusable assets first. Upon finishing creation of all the possible assets then

inform the LOBs in the organization to start using them

B.

1. Decrease the IT delivery gap

2. Meet various business demands by increasing the IT capacity and forming various IT departments

3. Make consumption of assets at the rate of production

C.

1. Decrease the IT delivery gap

2. Meet various business demands without increasing the IT capacity

3. Make consumption of assets at the rate of production

Answer:

C

Explanation:

Correct Answer:

1. Decrease the IT delivery gap

2. Meet various business demands without increasing the IT capacity

3. Make consumption of assets at the rate of production.

*****************************************

Reference:

https://www.youtube.com/watch?v=U0FpYMnMjmM

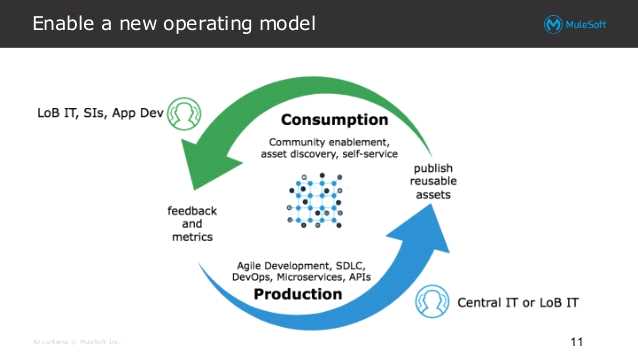

Question 8

Which of the following best fits the definition of API-led connectivity?

A.

API-led connectivity is not just an architecture or technology but also a way to organize people and

processes for efficient IT delivery in the organization

B.

API-led connectivity is a 3-layered architecture covering Experience, Process and System layers

C.

API-led connectivity is a technology which enabled us to implement Experience, Process and System

layer based APIs

Answer:

A

Explanation:

Correct Answer: API-led connectivity is not just an architecture or technology but also a way to

organize people and processes for efficient IT delivery in the organization.

*****************************************

Reference:

https://blogs.mulesoft.com/dev/api-dev/what-is-api-led-connectivity/

Question 9

A system API has a guaranteed SLA of 100 ms per request. The system API is deployed to a primary

environment as well as to a disaster recovery (DR) environment, with different DNS names in each

environment. An upstream process API invokes the system API and the main goal of this process API

is to respond to client requests in the least possible time. In what order should the system APIs be

invoked, and what changes should be made in order to speed up the response time for requests from

the process API?

A.

In parallel, invoke the system API deployed to the primary environment and the system API deployed

to the DR environment, and ONLY use the first response

B.

In parallel, invoke the system API deployed to the primary environment and the system API deployed

to the DR environment using a scatter-gather configured with a timeout, and then merge the

responses

C.

Invoke the system API deployed to the primary environment, and if it fails, invoke the system API

deployed to the DR environment

D.

Invoke ONLY the system API deployed to the primary environment, and add timeout and retry logic

to avoid intermittent failures

Answer:

A

Explanation:

Correct Answer: In parallel, invoke the system API deployed to the primary environment and the

system API deployed to the DR environment, and ONLY use the first response.

*****************************************

>> The API requirement in the given scenario is to respond in least possible time.

>> The option that is suggesting to first try the API in primary environment and then fallback to API in

DR environment would result in successful response but NOT in least possible time. So, this is NOT a

right choice of implementation for given requirement.

>> Another option that is suggesting to ONLY invoke API in primary environment and to add timeout

and retries may also result in successful response upon retries but NOT in least possible time. So, this

is also NOT a right choice of implementation for given requirement.

>> One more option that is suggesting to invoke API in primary environment and API in DR

environment in parallel using Scatter-Gather would result in wrong API response as it would return

merged results and moreover, Scatter-Gather does things in parallel which is true but still completes

its scope only on finishing all routes inside it. So again, NOT a right choice of implementation for

given requirement

The Correct choice is to invoke the API in primary environment and the API in DR environment

parallelly, and using ONLY the first response received from one of them.

Question 10

The application network is recomposable: it is built for change because it "bends but does not break"

A. TRUE

B. FALSE

Answer:

A

*****************************************

>> Application Network is a disposable architecture.

>> Which means, it can be altered without disturbing entire architecture and its components.

>> It bends as per requirements or design changes but does not break

Reference:

https://www.mulesoft.com/resources/api/what-is-an-application-network

Question 11

A company has created a successful enterprise data model (EDM). The company is committed to

building an application network by adopting modern APIs as a core enabler of the company's IT

operating model. At what API tiers (experience, process, system) should the company require reusing

the EDM when designing modern API data models?

- A. At the experience and process tiers

- B. At the experience and system tiers

- C. At the process and system tiers

- D. At the experience, process, and system tiers

Answer:

C

Explanation:

Correct Answer: At the process and system tiers

*****************************************

>> Experience Layer APIs are modeled and designed exclusively for the end user's experience. So, the

data models of experience layer vary based on the nature and type of such API consumer. For

example, Mobile consumers will need light-weight data models to transfer with ease on the wire,

where as web-based consumers will need detailed data models to render most of the info on web

pages, so on. So, enterprise data models fit for the purpose of canonical models but not of good use

for experience APIs.

>> That is why, EDMs should be used extensively in process and system tiers but NOT in experience

tier.

Question 12

Due to a limitation in the backend system, a system API can only handle up to 500 requests per

second. What is the best type of API policy to apply to the system API to avoid overloading the

backend system?

- A. Rate limiting

- B. HTTP caching

- C. Rate limiting - SLA based

- D. Spike control

Answer:

D

Explanation:

Correct Answer: Spike control

*****************************************

>> First things first, HTTP Caching policy is for purposes different than avoiding the backend system

from overloading. So this is OUT.

>> Rate Limiting and Throttling/ Spike Control policies are designed to limit API access, but have

different intentions.

>> Rate limiting protects an API by applying a hard limit on its access.

>> Throttling/ Spike Control shapes API access by smoothing spikes in traffic.

That is why, Spike Control is the right option.

Question 13

A retail company with thousands of stores has an API to receive data about purchases and insert it

into a single database. Each individual store sends a batch of purchase data to the API about every 30

minutes. The API implementation uses a database bulk insert command to submit all the purchase

data to a database using a custom JDBC driver provided by a data analytics solution provider. The API

implementation is deployed to a single CloudHub worker. The JDBC driver processes the data into a

set of several temporary disk files on the CloudHub worker, and then the data is sent to an analytics

engine using a proprietary protocol. This process usually takes less than a few minutes. Sometimes a

request fails. In this case, the logs show a message from the JDBC driver indicating an out-of-file-

space message. When the request is resubmitted, it is successful. What is the best way to try to

resolve this throughput issue?

- A. se a CloudHub autoscaling policy to add CloudHub workers

- B. Use a CloudHub autoscaling policy to increase the size of the CloudHub worker

- C. Increase the size of the CloudHub worker(s)

- D. Increase the number of CloudHub workers

Answer:

D

Explanation:

Correct Answer: Increase the size of the CloudHub worker(s)

*****************************************

The key details that we can take out from the given scenario are:

>> API implementation uses a database bulk insert command to submit all the purchase data to a

database

>> JDBC driver processes the data into a set of several temporary disk files on the CloudHub worker

>> Sometimes a request fails and the logs show a message indicating an out-of-file-space message

Based on above details:

>> Both auto-scaling options does NOT help because we cannot set auto-scaling rules based on error

messages. Auto-scaling rules are kicked-off based on CPU/Memory usages and not due to some

given error or disk space issues.

>> Increasing the number of CloudHub workers also does NOT help here because the reason for the

failure is not due to performance aspects w.r.t CPU or Memory. It is due to disk-space.

>> Moreover, the API is doing bulk insert to submit the received batch data. Which means, all data is

handled by ONE worker only at a time. So, the disk space issue should be tackled on "per worker"

basis. Having multiple workers does not help as the batch may still fail on any worker when disk is

out of space on that particular worker.

Therefore, the right way to deal this issue and resolve this is to increase the vCore size of the worker

so that a new worker with more disk space will be provisioned.

Question 14

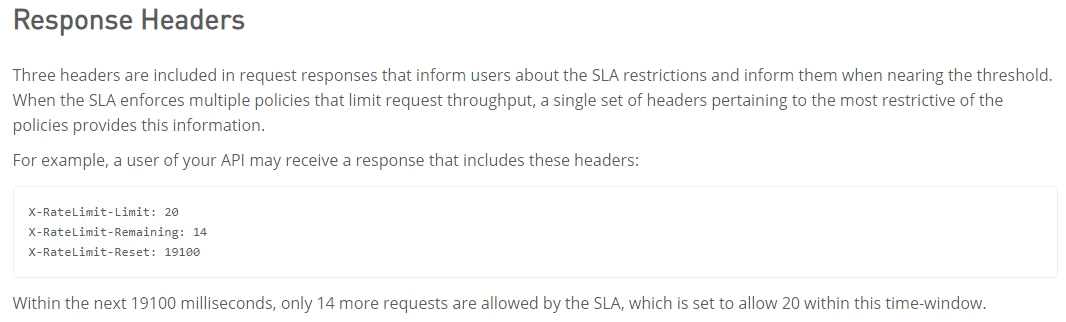

An API implementation returns three X-RateLimit-* HTTP response headers to a requesting API

client. What type of information do these response headers indicate to the API client?

- A. The error codes that result from throttling

- B. A correlation ID that should be sent in the next request

- C. The HTTP response size

- D. The remaining capacity allowed by the API implementation

Answer:

D

Explanation:

Correct Answer: The remaining capacity allowed by the API implementation.

*****************************************

>> Reference:

https://docs.mulesoft.com/api-manager/2.x/rate-limiting-and-throttling-sla-based-

policies#response-headers

Question 15

An API has been updated in Anypoint exchange by its API producer from version 3.1.1 to 3.2.0

following accepted semantic versioning practices and the changes have been communicated via the

APIs public portal. The API endpoint does NOT change in the new version. How should the developer

of an API client respond to this change?

- A. The API producer should be requested to run the old version in parallel with the new one

- B. The API producer should be contacted to understand the change to existing functionality

- C. The API client code only needs to be changed if it needs to take advantage of the new features

- D. The API clients need to update the code on their side and need to do full regression

Answer:

C