microsoft dp-420 practice test

designing and implementing cloud-native applications using microsoft azure cosmos db

Question 1

HOTSPOT

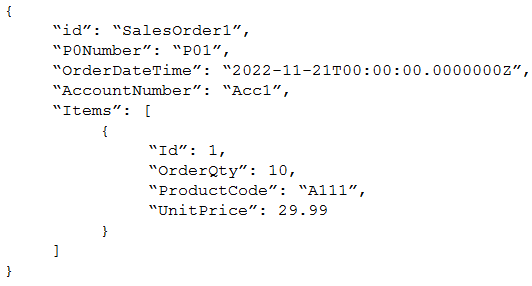

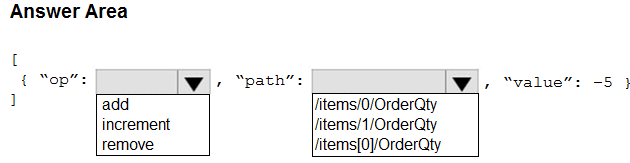

You have an Azure Cosmos DB for NoSQL container that contains the following item.

You need to update the OrderQty value to 5 by using a patch operation.

How should you complete the JSON Patch document? To answer, select the appropriate options in the answer area.

Answer:

Question 2

You are creating an Azure Cosmos DB for NoSQL account.

You need to choose whether to configure capacity by using either provisioned throughput or serverless mode.

Which two workload characteristics cause you to choose serverless mode? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- A. geo-distribution requirements

- B. low average-to-peak traffic ratio

- C. 25 TB of data in a single container

- D. high average-to-peak traffic ratio

- E. intermittent traffic

Answer:

be

Question 3

You are building an application that will store data in an Azure Cosmos DB Core (SQL) API account. The account uses the session default consistency level. The account is used by five other applications. The account has a single read-write region and 10 additional read region.

Approximately 20 percent of the items stored in the account are updated hourly.

Several users will access the new application from multiple devices.

You need to ensure that the users see the same item values consistently when they browse from the different devices. The solution must NOT affect the other applications.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- A. Set the default consistency level to eventual

- B. Associate a session token to the device

- C. Use implicit session management when performing read requests

- D. Provide a stored session token when performing read requests

- E. Associate a session token to the user account

Answer:

de

Use Session consistency and use Stateful Entities in Durable Functions or something similar that will allow you to implement a distributed mutex to store and update the Session token across multiple Cosmos client instances.

Note: The session consistency is the default consistency that you get while configuring the cosmos DB account. This level of consistency honors the client session. It ensures a strong consistency for an application session with the same session token. What that means is that whatever is written by a session will return the latest version for reads as well, from that same session.

Incorrect:

Not A: Eventual consistency is the weakest consistency level of all. The first thing to consider in this model is that there is no guarantee on the order of the data and also no guarantee of how long the data can take to replicate. As the name suggests, the reads are consistent, but eventually.

This model offers high availability and low latency along with the highest throughput of all. This model suits the application that does not require any ordering guarantee. The best usage of this type of model would be the count of retweets, likes, non-threaded comments where the count is more important than any other information.

Not B: The reads should be the same from different devices.

Reference:

https://stackoverflow.com/questions/64084499/can-i-use-a-client-constructed-session-token-for-cosmosdb

Question 4

You have an Azure Cosmos DB Core (SQL) API account.

The change feed is enabled on a container named invoice.

You create an Azure function that has a trigger on the change feed.

What is received by the Azure function?

- A. only the changed properties and the system-defined properties of the updated items

- B. only the partition key and the changed properties of the updated items

- C. all the properties of the original items and the updated items

- D. all the properties of the updated items

Answer:

b

Change feed is available for each logical partition key within the container.

The change feed is sorted by the order of modification within each logical partition key value.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/change-feed

Question 5

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account.

You need to make the contents of container1 available as reference data for an Azure Stream Analytics job.

Solution: You create an Azure function to copy data to another Azure Cosmos DB Core (SQL) API container.

Does this meet the goal?

- A. Yes

- B. No

Answer:

b

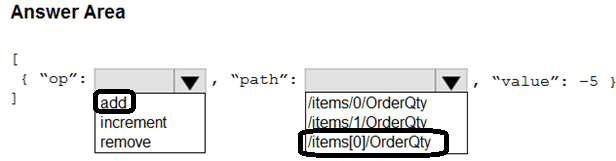

Instead: Create an Azure function that uses Azure Cosmos DB Core (SQL) API change feed as a trigger and Azure event hub as the output.

Note: The Azure Cosmos DB change feed is a mechanism to get a continuous and incremental feed of records from an Azure Cosmos container as those records are being created or modified. Change feed support works by listening to container for any changes. It then outputs the sorted list of documents that were changed in the order in which they were modified.

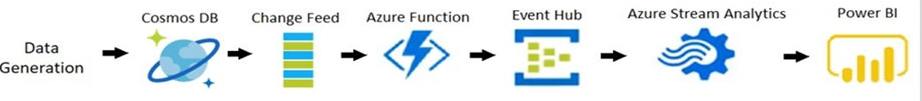

The following diagram represents the data flow and components involved in the solution:

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/changefeed-ecommerce-solution

Question 6

You have an Azure Cosmos DB Core (SQL) API account.

You configure the diagnostic settings to send all log information to a Log Analytics workspace.

You need to identify when the provisioned request units per second (RU/s) for resources within the account were modified.

You write the following query.

AzureDiagnostics | where Category == ControlPlaneRequests

What should you include in the query?

- A. | where OperationName startswith "AccountUpdateStart"

- B. | where OperationName startswith "SqlContainersDelete"

- C. | where OperationName startswith "MongoCollectionsThroughputUpdate"

- D. | where OperationName startswith "SqlContainersThroughputUpdate"

Answer:

a

The following are the operation names in diagnostic logs for different operations:

RegionAddStart, RegionAddComplete

RegionRemoveStart, RegionRemoveComplete

AccountDeleteStart, AccountDeleteComplete

RegionFailoverStart, RegionFailoverComplete

AccountCreateStart, AccountCreateComplete

*AccountUpdateStart*, AccountUpdateComplete

VirtualNetworkDeleteStart, VirtualNetworkDeleteComplete

DiagnosticLogUpdateStart, DiagnosticLogUpdateComplete

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/audit-control-plane-logs

Question 7

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account.

You need to make the contents of container1 available as reference data for an Azure Stream Analytics job.

Solution: You create an Azure Synapse pipeline that uses Azure Cosmos DB Core (SQL) API as the input and Azure Blob Storage as the output.

Does this meet the goal?

- A. Yes

- B. No

Answer:

b

Instead create an Azure function that uses Azure Cosmos DB Core (SQL) API change feed as a trigger and Azure event hub as the output.

The Azure Cosmos DB change feed is a mechanism to get a continuous and incremental feed of records from an Azure Cosmos container as those records are being created or modified. Change feed support works by listening to container for any changes. It then outputs the sorted list of documents that were changed in the order in which they were modified.

The following diagram represents the data flow and components involved in the solution:

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/changefeed-ecommerce-solution

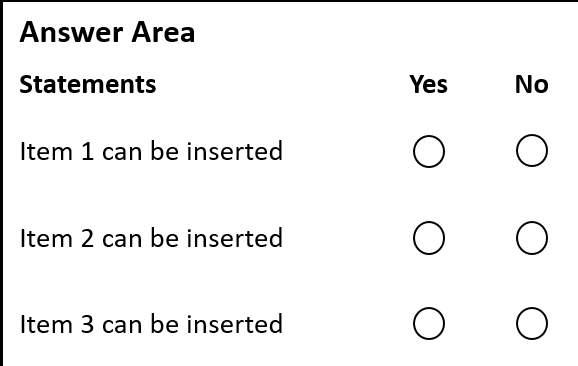

Question 8

HOTSPOT

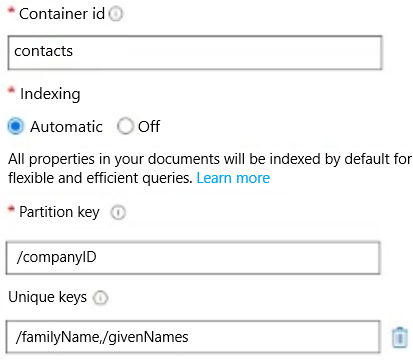

You have an Azure Cosmos DB for NoSQL container named Contacts that is configured as shown in the following exhibit.

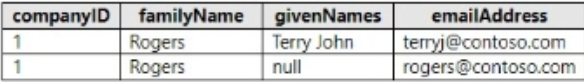

Contacts contains the items shown in the following table.

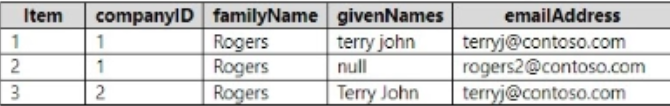

To Contacts, you plan to Insert the items shown in the following table.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Answer:

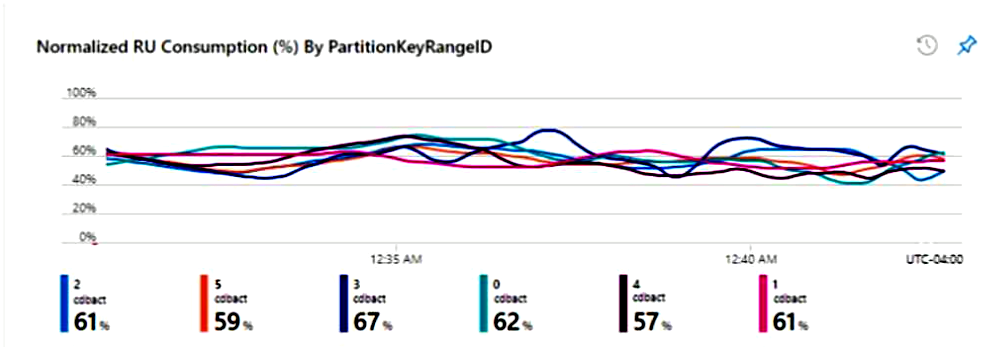

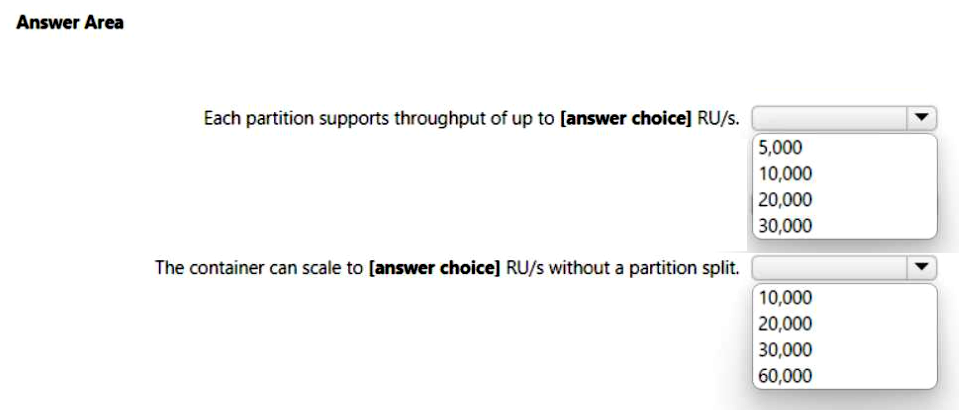

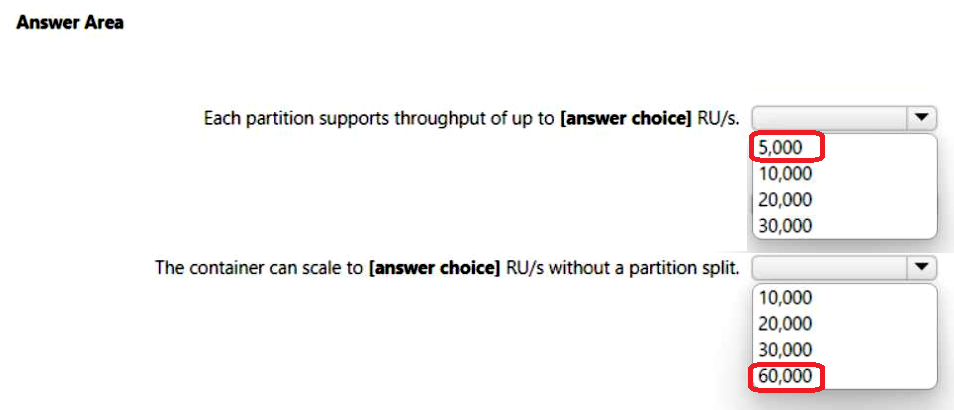

Question 9

HOTSPOT

You have a container in an Azure Cosmos DB Core (SQL) API account. The database that has a manual throughput of 30,000 request units per second (RU/s).

The current consumption details are shown in the following chart.

Use the drop-down menus to select the answer choice that answers each question based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

Answer:

Question 10

You have operational data in an Azure Cosmos DB for NoSQL database.

Database users report that the performance of the database degrades significantly when a business analytics team runs large Apache Spark-based queries against the database.

You need to reduce the impact that running the Spark-based queries has on the database users.

What should you implement?

- A. Azure Synapse Link

- B. a default consistency level of Consistent Prefix

- C. a default consistency level of Strong

- D. the Spark connector

Answer:

b