linux foundation ckad practice test

Certified Kubernetes Application Developer

Question 1

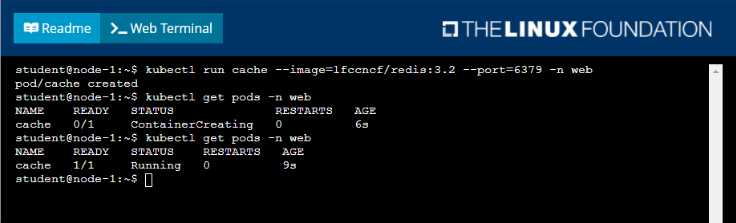

Refer to Exhibit.

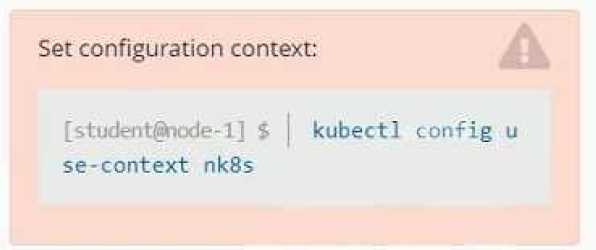

Set Configuration Context:

[student@node-1] $ | kubectl

Config use-context k8s

Context

A web application requires a specific version of redis to be used as a cache.

Task

Create a pod with the following characteristics, and leave it running when complete:

• The pod must run in the web namespace.

The namespace has already been created

• The name of the pod should be cache

• Use the Ifccncf/redis image with the 3.2 tag

• Expose port 6379

Answer:

See the

solution below.

Explanation:

Solution:

Question 2

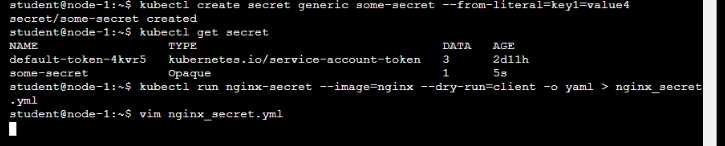

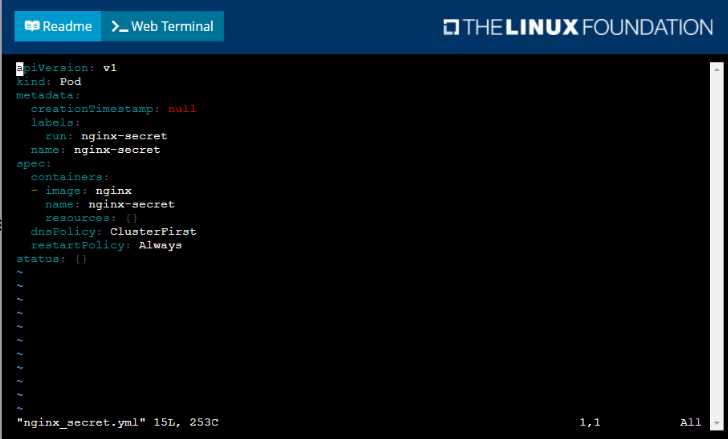

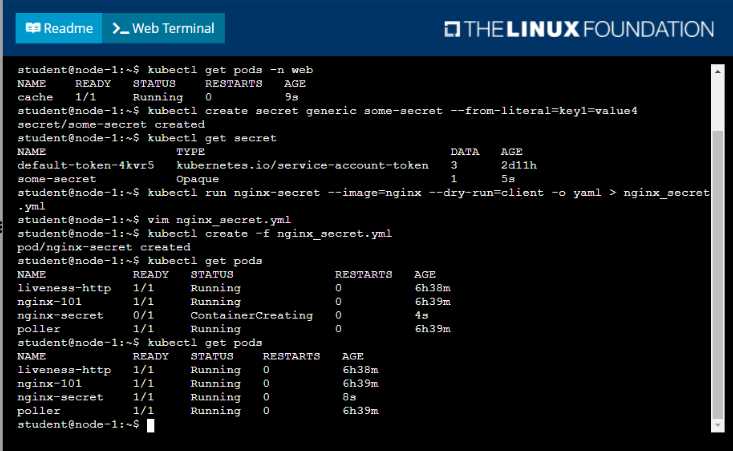

Refer to Exhibit.

Context

You are tasked to create a secret and consume the secret in a pod using environment variables as

follow:

Task

• Create a secret named another-secret with a key/value pair; key1/value4

• Start an nginx pod named nginx-secret using container image nginx, and add an environment

variable exposing the value of the secret key key 1, using COOL_VARIABLE as the name for the

environment variable inside the pod

Answer:

See the

solution below.

Explanation:

Solution:

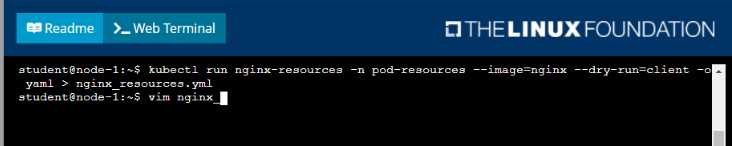

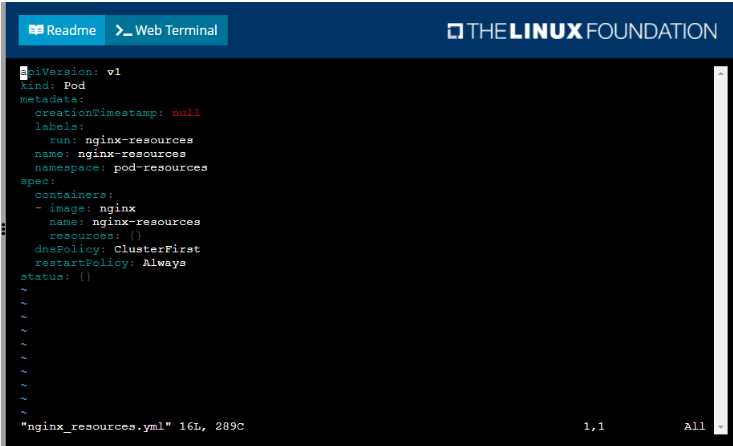

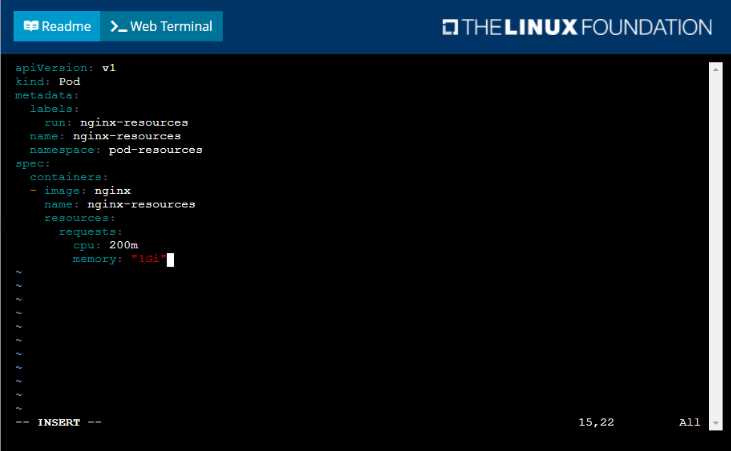

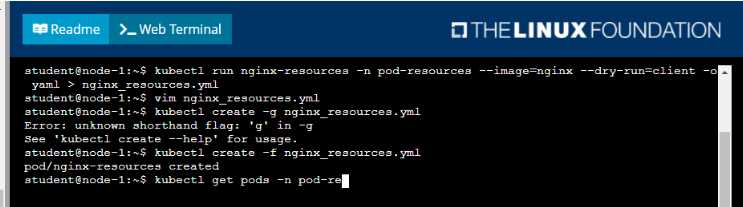

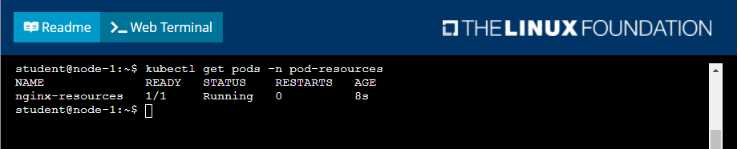

Question 3

Refer to Exhibit.

Task

You are required to create a pod that requests a certain amount of CPU and memory, so it gets

scheduled to-a node that has those resources available.

• Create a pod named nginx-resources in the pod-resources namespace that requests a minimum of

200m CPU and 1Gi memory for its container

• The pod should use the nginx image

• The pod-resources namespace has already been created

Answer:

See the

solution below.

Explanation:

Solution:

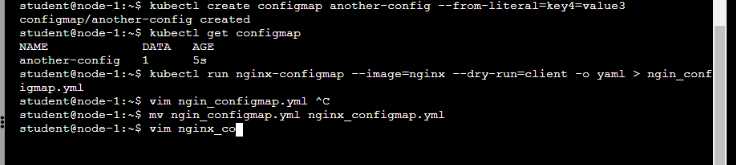

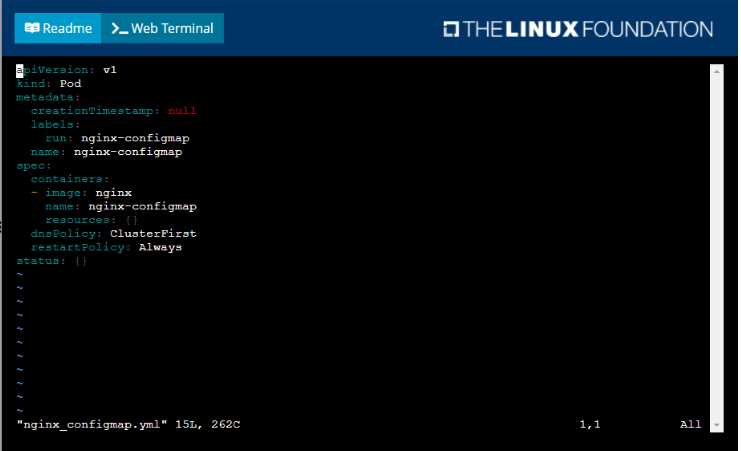

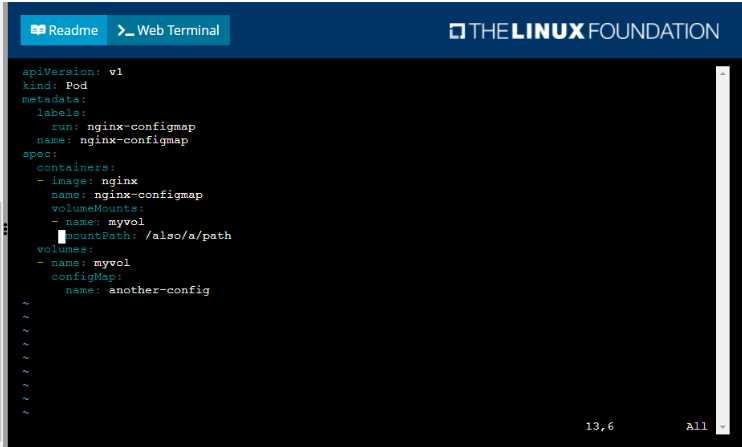

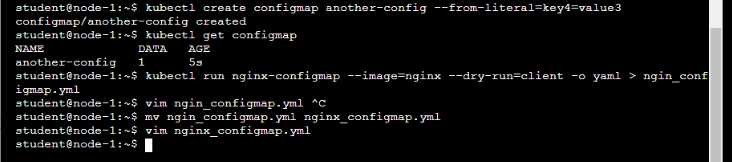

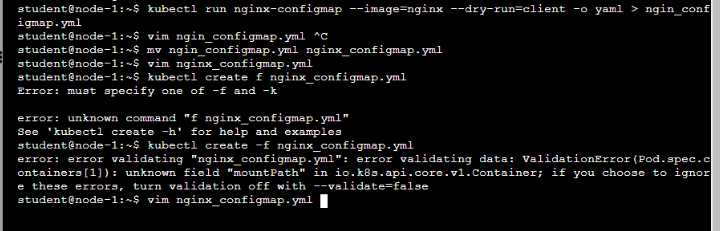

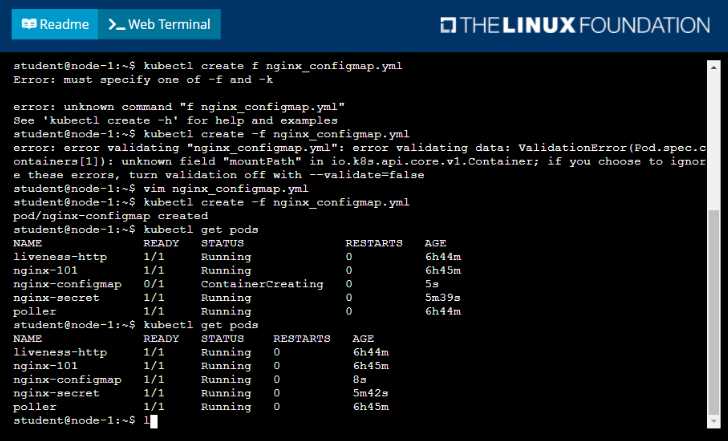

Question 4

Refer to Exhibit.

Context

You are tasked to create a ConfigMap and consume the ConfigMap in a pod using a volume mount.

Task

Please complete the following:

• Create a ConfigMap named another-config containing the key/value pair: key4/value3

• start a pod named nginx-configmap containing a single container using the

nginx image, and mount the key you just created into the pod under directory /also/a/path

Answer:

See the

solution below.

Explanation:

Solution:

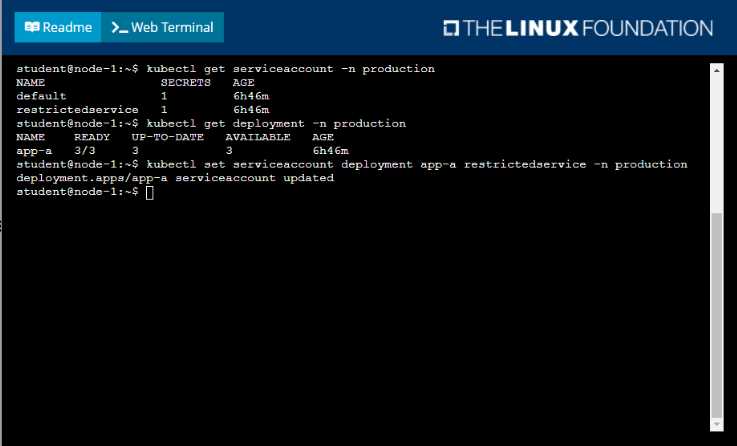

Question 5

Refer to Exhibit.

Context

Your application’s namespace requires a specific service account to be used.

Task

Update the app-a deployment in the production namespace to run as the restrictedservice service

account. The service account has already been created.

Answer:

See the

solution below.

Explanation:

Solution:

Question 6

Refer to Exhibit.

Set Configuration Context:

[student@node-1] $ | kubectl

Config use-context k8s

Context

A pod is running on the cluster but it is not responding.

Task

The desired behavior is to have Kubemetes restart the pod when an endpoint returns an HTTP 500 on

the /healthz endpoint. The service, probe-pod, should never send traffic to the pod while it is failing.

Please complete the following:

• The application has an endpoint, /started, that will indicate if it can accept traffic by returning an

HTTP 200. If the endpoint returns an HTTP 500, the application has not yet finished initialization.

• The application has another endpoint /healthz that will indicate if the application is still working as

expected by returning an HTTP 200. If the endpoint returns an HTTP 500 the application is no longer

responsive.

• Configure the probe-pod pod provided to use these endpoints

• The probes should use port 8080

Answer:

See the

solution below.

Explanation:

Solution:

To have Kubernetes automatically restart a pod when an endpoint returns an HTTP 500 on the

/healthz endpoint, you will need to configure liveness and readiness probes on the pod.

First, you will need to create a livenessProbe and a readinessProbe in the pod's definition yaml file.

The livenessProbe will check the /healthz endpoint, and if it returns an HTTP 500, the pod will be

restarted. The readinessProbe will check the /started endpoint, and if it returns an HTTP 500, the pod

will not receive traffic.

Here's an example of how you can configure the liveness and readiness probes in the pod definition

yaml file:

apiVersion: v1

kind: Pod

metadata:

name: probe-pod

spec:

containers:

- name: probe-pod

image: <image-name>

ports:

- containerPort: 8080

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 15

periodSeconds: 10

failureThreshold: 3

readinessProbe:

httpGet:

path: /started

port: 8080

initialDelaySeconds: 15

periodSeconds: 10

failureThreshold: 3

The httpGet specifies the endpoint to check and the port to use. The initialDelaySeconds is the

amount of time the pod will wait before starting the probe. periodSeconds is the amount of time

between each probe check, and the failureThreshold is the number of failed probes before the pod is

considered unresponsive.

You can use kubectl to create the pod by running the following command:

kubectl apply -f <filename>.yaml

Once the pod is created, Kubernetes will start monitoring it using the configured liveness and

readiness probes. If the /healthz endpoint returns an HTTP 500, the pod will be restarted. If the

/started endpoint returns an HTTP 500, the pod will not receive traffic.

Please note that if the failure threshold is set to 3, it means that if the probe fails 3 times

consecutively it will be considered as a failure.

The above configuration assumes that the application is running on port 8080 and the endpoints are

available on the same port.

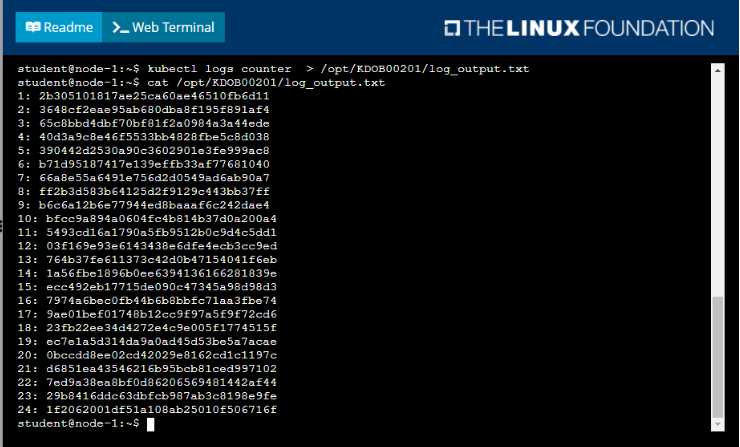

Question 7

Refer to Exhibit.

Set Configuration Context:

[student@node-1] $ | kubectl

Config use-context k8s

Context

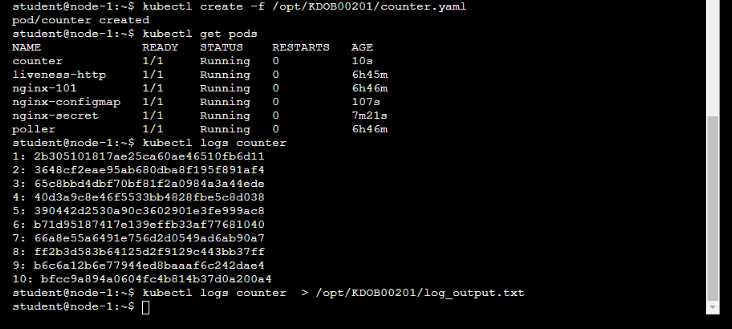

You sometimes need to observe a pod's logs, and write those logs to a file for further analysis.

Task

Please complete the following;

•

Deploy the counter

pod to the cluster using the provided YAMLspec file at

/opt/KDOB00201/counter.yaml

• Retrieve all currently available application logs from the running pod and store them in the file

/opt/KDOB0020l/log_Output.txt, which has already been created

Answer:

See the

solution below.

Explanation:

Solution:

To deploy the counter pod to the cluster using the provided YAML spec file, you can use the kubectl

apply command. The apply command creates and updates resources in a cluster.

kubectl apply -f /opt/KDOB00201/counter.yaml

This command will create the pod in the cluster. You can use the kubectl get pods command to check

the status of the pod and ensure that it is running.

kubectl get pods

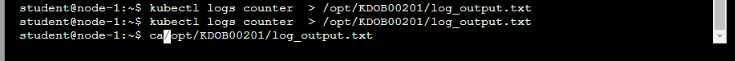

To retrieve all currently available application logs from the running pod and store them in the file

/opt/KDOB0020l/log_Output.txt, you can use the kubectl logs command. The logs command

retrieves logs from a container in a pod.

kubectl logs -f <pod-name> > /opt/KDOB0020l/log_Output.txt

Replace <pod-name> with the name of the pod.

You can also use -f option to stream the logs.

kubectl logs -f <pod-name> > /opt/KDOB0020l/log_Output.txt &

This command will retrieve the logs from the pod and write them to the

/opt/KDOB0020l/log_Output.txt file.

Please note that the above command will retrieve all logs from the pod, including previous logs. If

you want to retrieve only the new logs that are generated after running the command, you can add

the --since flag to the kubectl logs command and specify a duration, for example --since=24h for logs

generated in the last 24 hours.

Also, please note that, if the pod has multiple containers, you need to specify the container name

using -c option.

kubectl logs -f <pod-name> -c <container-name> > /opt/KDOB0020l/log_Output.txt

The above command will redirect the logs of the specified container to the file.

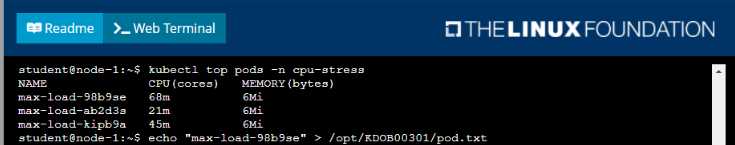

Question 8

Refer to Exhibit.

Context

It is always useful to look at the resources your applications are consuming in a cluster.

Task

•

From the pods running in namespace cpu-stress , write the name only of the pod that is

consuming the most CPU to file /opt/KDOBG030l/pod.txt, which has already been created.

Answer:

See the

solution below.

Explanation:

Solution:

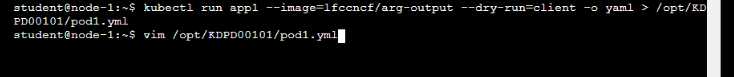

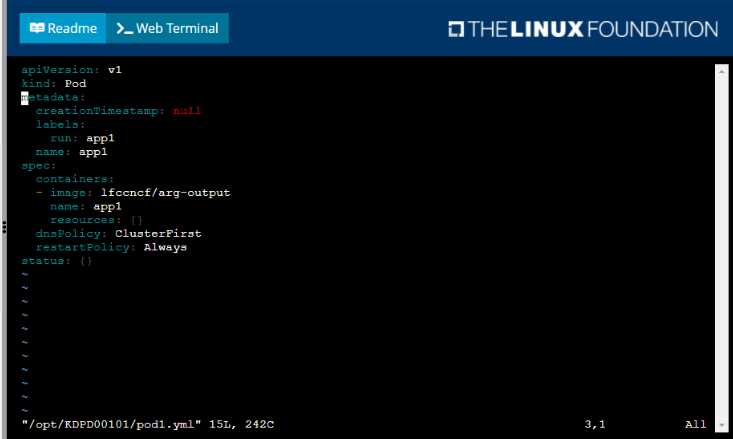

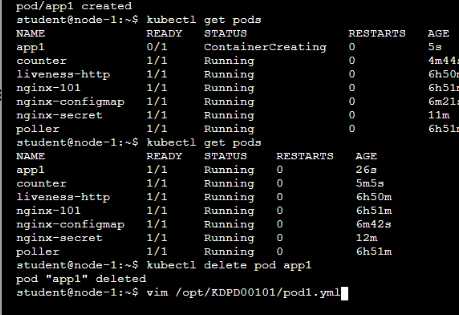

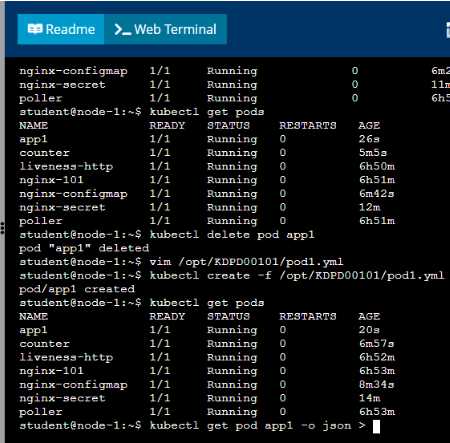

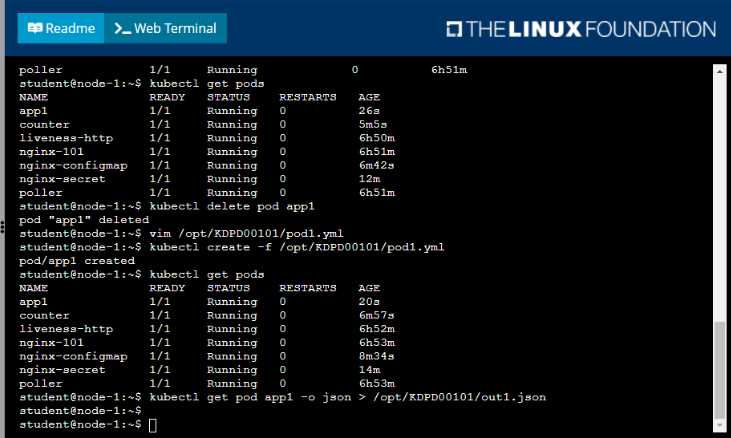

Question 9

Context

Anytime a team needs to run a container on Kubernetes they will need to define a pod within which

to run the container.

Task

Please complete the following:

• Create a YAML formatted pod manifest

/opt/KDPD00101/podl.yml to create a pod named app1 that runs a container named app1cont using

image Ifccncf/arg-output

with these command line arguments: -lines 56 -F

• Create the pod with the kubect1 command using the YAML file created in the previous step

• When the pod is running display summary data about the pod in JSON format using the kubect1

command and redirect the output to a file named /opt/KDPD00101/out1.json

• All of the files you need to work with have been created, empty, for your convenience

Answer:

See the

solution below.

Explanation:

Solution:

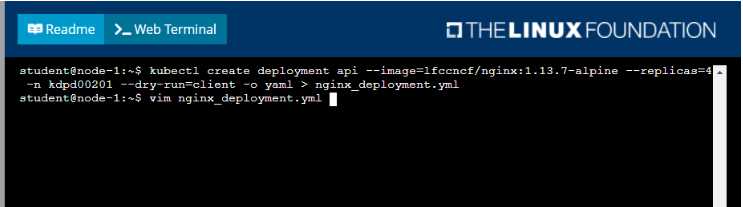

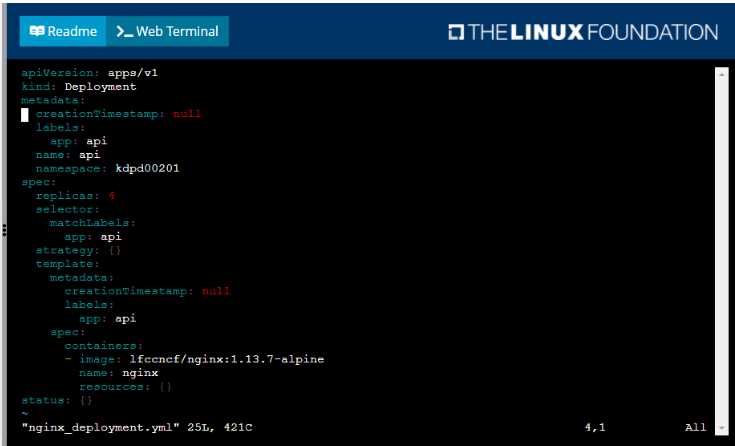

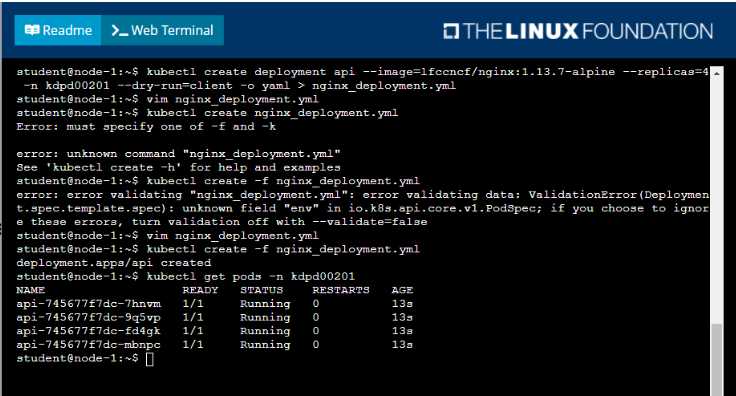

Question 10

Refer to Exhibit.

Task

Create a new deployment for running.nginx with the following parameters;

• Run the deployment in the kdpd00201 namespace. The namespace has already been created

• Name the deployment frontend and configure with 4 replicas

• Configure the pod with a container image of lfccncf/nginx:1.13.7

• Set an environment variable of NGINX__PORT=8080 and also expose that port for the container

above

Answer:

See the

solution below.

Explanation:

Solution:

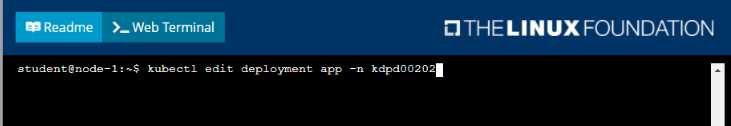

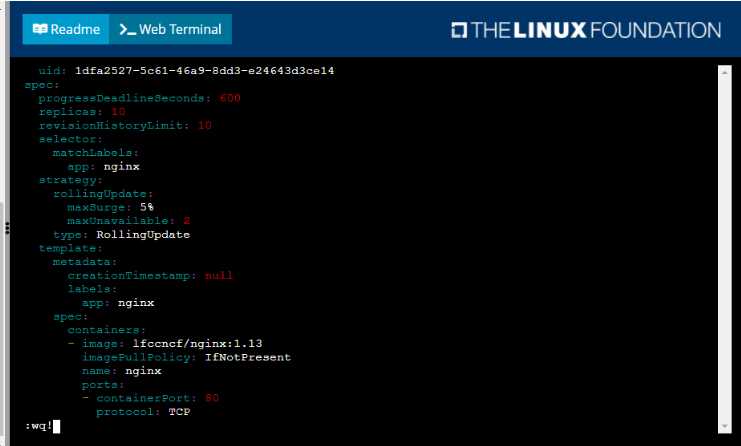

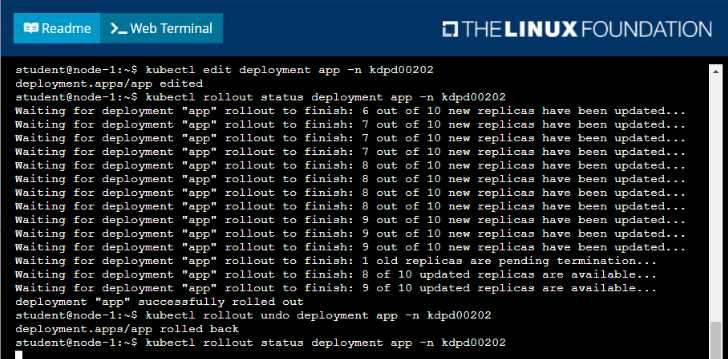

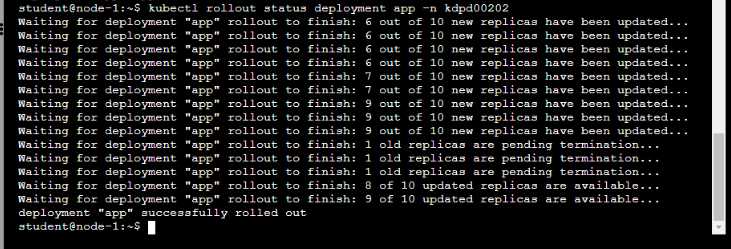

Question 11

Refer to Exhibit.

Context

As a Kubernetes application developer you will often find yourself needing to update a running

application.

Task

Please complete the following:

•

Update the app deployment in the kdpd00202 namespace with a maxSurge of 5% and a

maxUnavailable of 2%

• Perform a rolling update of the web1 deployment, changing the Ifccncf/ngmx image version to

1.13

• Roll back the app deployment to the previous version

Answer:

See the

solution below.

Explanation:

Solution:

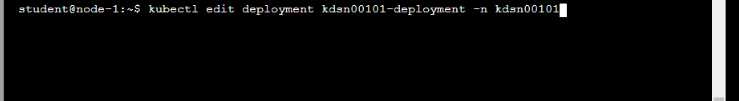

Question 12

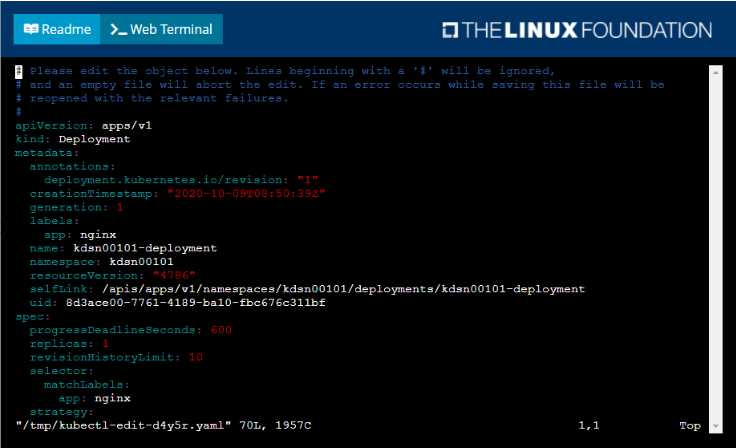

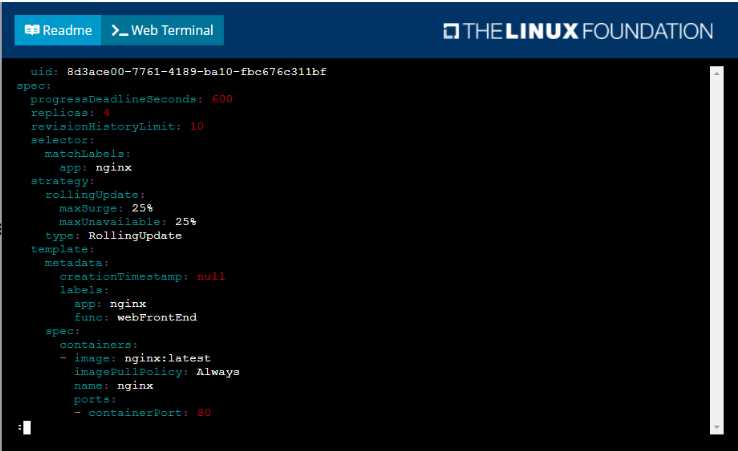

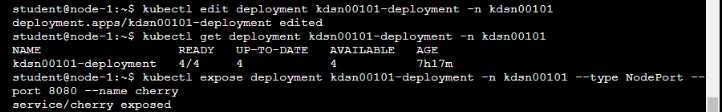

Refer to Exhibit.

Context

You have been tasked with scaling an existing deployment for availability, and creating a service to

expose the deployment within your infrastructure.

Task

Start with the deployment named kdsn00101-deployment which has already been deployed to the

namespace kdsn00101 . Edit it to:

• Add the func=webFrontEnd key/value label to the pod template metadata to identify the pod for

the service definition

• Have 4 replicas

Next, create ana deploy in namespace kdsn00l01 a service that accomplishes the following:

• Exposes the service on TCP port 8080

• is mapped to me pods defined by the specification of kdsn00l01-deployment

• Is of type NodePort

• Has a name of cherry

Answer:

See the

solution below.

Explanation:

Solution:

Question 13

Refer to Exhibit.

Set Configuration Context:

[student@node-1] $ | kubectl

Config use-context k8s

Context

A container within the poller pod is hard-coded to connect the nginxsvc service on port 90 . As this

port changes to 5050 an additional container needs to be added to the poller pod which adapts the

container to connect to this new port. This should be realized as an ambassador container within the

pod.

Task

• Update the nginxsvc service to serve on port 5050.

• Add an HAproxy container named haproxy bound to port 90 to the poller pod and deploy the

enhanced pod. Use the image haproxy and inject the configuration located at

/opt/KDMC00101/haproxy.cfg, with a ConfigMap named haproxy-config, mounted into the container

so that haproxy.cfg is available at /usr/local/etc/haproxy/haproxy.cfg. Ensure that you update the

args of the poller container to connect to localhost instead of nginxsvc so that the connection is

correctly proxied to the new service endpoint. You must not modify the port of the endpoint in

poller's args . The spec file used to create the initial poller pod is available in

/opt/KDMC00101/poller.yaml

Answer:

See the

solution below.

Explanation:

Solution:

To update the nginxsvc service to serve on port 5050, you will need to edit the service's definition

yaml file. You can use the kubectl edit command to edit the service in place.

kubectl edit svc nginxsvc

This will open the service definition yaml file in your default editor. Change the targetPort of the

service to 5050 and save the file.

To add an HAproxy container named haproxy bound to port 90 to the poller pod, you will need to

edit the pod's definition yaml file located at /opt/KDMC00101/poller.yaml.

You can add a new container to the pod's definition yaml file, with the following configuration:

containers:

- name: haproxy

image: haproxy

ports:

- containerPort: 90

volumeMounts:

- name: haproxy-config

mountPath: /usr/local/etc/haproxy/haproxy.cfg

subPath: haproxy.cfg

args: ["haproxy", "-f", "/usr/local/etc/haproxy/haproxy.cfg"]

This will add the HAproxy container to the pod and configure it to listen on port 90. It will also mount

the ConfigMap haproxy-config to the container, so that haproxy.cfg is available at

/usr/local/etc/haproxy/haproxy.cfg.

To inject the configuration located at /opt/KDMC00101/haproxy.cfg to the container, you will need to

create a ConfigMap using the following command:

kubectl create configmap haproxy-config --from-file=/opt/KDMC00101/haproxy.cfg

You will also need to update the args of the poller container so that it connects to localhost instead of

nginxsvc. You can do this by editing the pod's definition yaml file and changing the args field to args:

["poller","--host=localhost"].

Once you have made these changes, you can deploy the updated pod to the cluster by running the

following command:

kubectl apply -f /opt/KDMC00101/poller.yaml

This will deploy the enhanced pod with the HAproxy container to the cluster. The HAproxy container

will listen on port 90 and proxy connections to the nginxsvc service on port 5050. The poller

container will connect to localhost instead of nginxsvc, so that the connection is correctly proxied to

the new service endpoint.

Please note that, this is a basic example and you may need to tweak the haproxy.cfg file and the args

based on your use case.

Question 14

Refer to Exhibit.

Context

Developers occasionally need to submit pods that run periodically.

Task

Follow the steps below to create a pod that will start at a predetermined time and]which runs to

completion only once each time it is started:

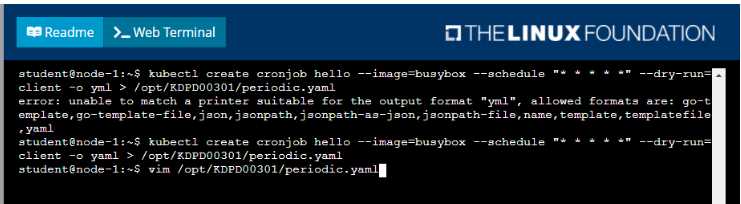

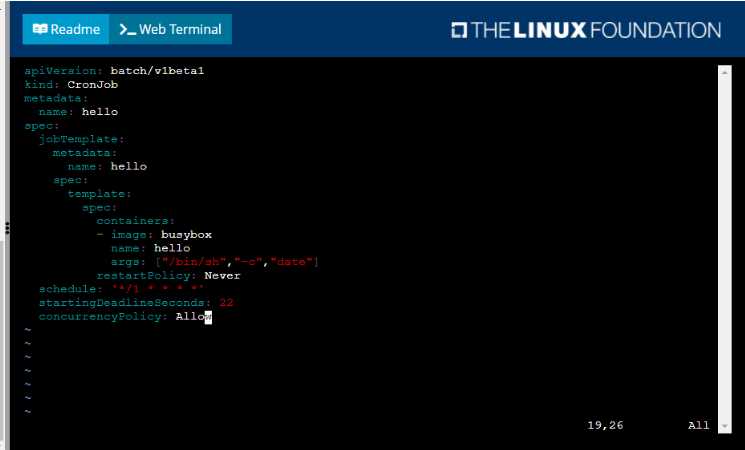

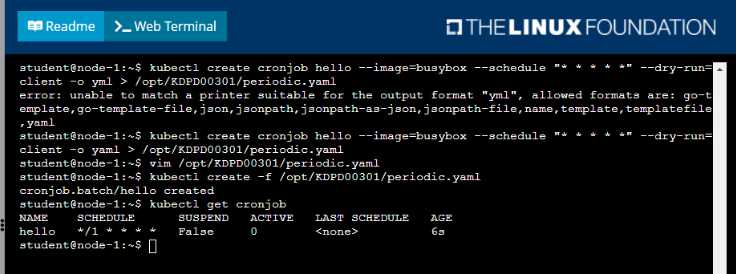

• Create a YAML formatted Kubernetes manifest /opt/KDPD00301/periodic.yaml that runs the

following shell command: date in a single busybox container. The command should run every minute

and must complete within 22 seconds or be terminated oy Kubernetes. The Cronjob namp and

container name should both be hello

• Create the resource in the above manifest and verify that the job executes successfully at least

once

Answer:

See the

solution below.

Explanation:

Solution:

Question 15

Refer to Exhibit.

Task

A deployment is falling on the cluster due to an incorrect image being specified. Locate the

deployment, and fix the problem.

Answer:

See the

solution below

Explanation:

create deploy hello-deploy --image=nginx --dry-run=client -o yaml > hello-deploy.yaml

Update deployment image to nginx:1.17.4: kubectl set image deploy/hello-

deploy nginx=nginx:1.17.4