databricks databricks certified data analyst associate practice test

Databricks Certified Data Analyst Associate Exam

Question 1

Which of the following layers of the medallion architecture is most commonly used by data analysts?

A. None of these layers are used by data analysts

B. Gold

C. All of these layers are used equally by data analysts

D. Silver

E. Bronze

Answer:

B

The gold layer of the medallion architecture contains data that is highly refined and aggregated, and

powers analytics, machine learning, and production applications. Data analysts typically use the gold

layer to access data that has been transformed into knowledge, rather than just information. The

gold layer represents the final stage of data quality and optimization in the

lakehouse. Reference:

What is the medallion lakehouse architecture?

Question 2

A data analyst has recently joined a new team that uses Databricks SQL, but the analyst has never

used Databricks before. The analyst wants to know where in Databricks SQL they can write and

execute SQL queries.

On which of the following pages can the analyst write and execute SQL queries?

A. Data page

B. Dashboards page

C. Queries page

D. Alerts page

E. SQL Editor page

Answer:

E

The SQL Editor page is where the analyst can write and execute SQL queries in Databricks SQL. The

SQL Editor page has a query pane where the analyst can type or paste SQL statements, and a results

pane where the analyst can view the query results in a table or a chart. The analyst can also browse

data objects, edit multiple queries, execute a single query or multiple queries, terminate a query,

save a query, download a query result, and more from the SQL Editor page. Reference:

Create a

query in SQL editor

Question 3

Which of the following describes how Databricks SQL should be used in relation to other business

intelligence (BI) tools like Tableau, Power BI, and looker?

A. As an exact substitute with the same level of functionality

B. As a substitute with less functionality

C. As a complete replacement with additional functionality

D. As a complementary tool for professional-grade presentations

E. As a complementary tool for quick in-platform Bl work

Answer:

E

Databricks SQL is not meant to replace or substitute other BI tools, but rather to complement them

by providing a fast and easy way to query, explore, and visualize data on the lakehouse using the

built-in SQL editor, visualizations, and dashboards. Databricks SQL also integrates seamlessly with

popular BI tools like Tableau, Power BI, and Looker, allowing analysts to use their preferred tools to

access data through Databricks clusters and SQL warehouses. Databricks SQL offers low-code and no-

code experiences, as well as optimized connectors and serverless compute, to enhance the

productivity and performance of BI workloads on the lakehouse. Reference:

Databricks

SQL

,

Connecting Applications and BI Tools to Databricks SQL

,

Databricks integrations

overview

,

Databricks SQL: Delivering a Production SQL Development Experience on the Lakehouse

Question 4

Which of the following approaches can be used to connect Databricks to Fivetran for data ingestion?

A. Use Workflows to establish a SQL warehouse (formerly known as a SQL endpoint) for Fivetran to

interact with

B. Use Delta Live Tables to establish a cluster for Fivetran to interact with

C. Use Partner Connect's automated workflow to establish a cluster for Fivetran to interact with

D. Use Partner Connect's automated workflow to establish a SQL warehouse (formerly known as a

SQL endpoint) for Fivetran to interact with

E. Use Workflows to establish a cluster for Fivetran to interact with

Answer:

C

Partner Connect is a feature that allows you to easily connect your Databricks workspace to Fivetran

and other ingestion partners using an automated workflow. You can select a SQL warehouse or a

cluster as the destination for your data replication, and the connection details are sent to Fivetran.

You can then choose from over 200 data sources that Fivetran supports and start ingesting data into

Delta Lake. Reference:

Connect to Fivetran using Partner Connect

,

Use Databricks with Fivetran

Question 5

Data professionals with varying titles use the Databricks SQL service as the primary touchpoint with

the Databricks Lakehouse Platform. However, some users will use other services like Databricks

Machine Learning or Databricks Data Science and Engineering.

Which of the following roles uses Databricks SQL as a secondary service while primarily using one of

the other services?

A. Business analyst

B. SQL analyst

C. Data engineer

D. Business intelligence analyst

E. Data analyst

Answer:

C

Data engineers are primarily responsible for building, managing, and optimizing data pipelines and

architectures. They use Databricks Data Science and Engineering service to perform tasks such as

data ingestion, transformation, quality, and governance. Data engineers may use Databricks SQL as a

secondary service to query, analyze, and visualize data from the lakehouse, but this is not their main

focus. Reference:

Databricks SQL overview

,

Databricks Data Science and Engineering overview

,

Data

engineering with Databricks

Question 6

A data analyst has set up a SQL query to run every four hours on a SQL endpoint, but the SQL

endpoint is taking too long to start up with each run.

Which of the following changes can the data analyst make to reduce the start-up time for the

endpoint while managing costs?

A. Reduce the SQL endpoint cluster size

B. Increase the SQL endpoint cluster size

C. Turn off the Auto stop feature

D. Increase the minimum scaling value

E. Use a Serverless SQL endpoint

Answer:

E

A Serverless SQL endpoint is a type of SQL endpoint that does not require a dedicated cluster to run

queries. Instead, it uses a shared pool of resources that can scale up and down automatically based

on the demand. This means that a Serverless SQL endpoint can start up much faster than a SQL

endpoint that uses a cluster, and it can also save costs by only paying for the resources that are used.

A Serverless SQL endpoint is suitable for ad-hoc queries and exploratory analysis, but it may not offer

the same level of performance and isolation as a SQL endpoint that uses a cluster. Therefore, a data

analyst should consider the trade-offs between speed, cost, and quality when choosing between a

Serverless SQL endpoint and a SQL endpoint that uses a cluster. Reference:

Databricks SQL

endpoints

,

Serverless SQL endpoints

,

SQL endpoint clusters

Question 7

A data engineering team has created a Structured Streaming pipeline that processes data in micro-

batches and populates gold-level tables. The microbatches are triggered every minute.

A data analyst has created a dashboard based on this gold-level dat

a. The project stakeholders want to see the results in the dashboard updated within one minute or

less of new data becoming available within the gold-level tables.

Which of the following cautions should the data analyst share prior to setting up the dashboard to

complete this task?

A. The required compute resources could be costly

B. The gold-level tables are not appropriately clean for business reporting

C. The streaming data is not an appropriate data source for a dashboard

D. The streaming cluster is not fault tolerant

E. The dashboard cannot be refreshed that quickly

Answer:

A

A Structured Streaming pipeline that processes data in micro-batches and populates gold-level

tables every minute requires a high level of compute resources to handle the frequent data

ingestion, processing, and writing. This could result in a significant cost for the organization,

especially if the data volume and velocity are large. Therefore, the data analyst should share this

caution with the project stakeholders before setting up the dashboard and evaluate the trade-offs

between the desired refresh rate and the available budget. The other options are not valid cautions

B . The gold-level tables are assumed to be appropriately clean for business reporting, as they are the

final output of the data engineering pipeline. If the data quality is not satisfactory, the issue should

be addressed at the source or silver level, not at the gold level.

C . The streaming data is an appropriate data source for a dashboard, as it can provide near real-time

insights and analytics for the business users. Structured Streaming supports various sources and sinks

for streaming data, including Delta Lake, which can enable both batch and streaming queries on the

same data.

D . The streaming cluster is fault tolerant, as Structured Streaming provides end-to-end exactly-once

fault-tolerance guarantees through checkpointing and write-ahead logs. If a query fails, it can be

restarted from the last checkpoint and resume processing.

E . The dashboard can be refreshed within one minute or less of new data becoming available in the

gold-level tables, as Structured Streaming can trigger micro-batches as fast as possible (every few

seconds) and update the results incrementally. However, this may not be necessary or optimal for

the business use case, as it could cause frequent changes in the dashboard and consume more

resources. Reference:

Streaming on Databricks

,

Monitoring Structured Streaming queries on

Databricks

,

A look at the new Structured Streaming UI in Apache Spark 3.0

,

Run your first Structured

Streaming workload

Question 8

Which of the following approaches can be used to ingest data directly from cloud-based object

storage?

A. Create an external table while specifying the DBFS storage path to FROM

B. Create an external table while specifying the DBFS storage path to PATH

C. It is not possible to directly ingest data from cloud-based object storage

D. Create an external table while specifying the object storage path to FROM

E. Create an external table while specifying the object storage path to LOCATION

Answer:

E

External tables are tables that are defined in the Databricks metastore using the information stored

in a cloud object storage location. External tables do not manage the data, but provide a schema and

a table name to query the data. To create an external table, you can use the CREATE EXTERNAL TABLE

statement and specify the object storage path to the LOCATION clause. For example, to create an

SQL

CREATE EXTERNAL TABLE ext_table (

col1 INT,

col2 STRING

)

STORED AS PARQUET

//bucket/path/file.parquet'

AI-generated code. Review and use carefully.

More info on FAQ

.

Reference:

External tables

Question 9

A data analyst wants to create a dashboard with three main sections: Development, Testing, and

Production. They want all three sections on the same dashboard, but they want to clearly designate

the sections using text on the dashboard.

Which of the following tools can the data analyst use to designate the Development, Testing, and

Production sections using text?

A. Separate endpoints for each section

B. Separate queries for each section

C. Markdown-based text boxes

D. Direct text written into the dashboard in editing mode

E. Separate color palettes for each section

Answer:

C

Markdown-based text boxes are useful as labels on a dashboard. They allow the data analyst to add

text to a dashboard using the %md magic command in a notebook cell and then select the dashboard

icon in the cell actions menu. The text can be formatted using markdown syntax and can include

headings, lists, links, images, and more. The text boxes can be resized and moved around on the

dashboard using the float layout option. Reference:

Dashboards in notebooks

,

How to add text to a

dashboard in Databricks

Question 10

A data analyst needs to use the Databricks Lakehouse Platform to quickly create SQL queries and

data visualizations. It is a requirement that the compute resources in the platform can be made

serverless, and it is expected that data visualizations can be placed within a dashboard.

Which of the following Databricks Lakehouse Platform services/capabilities meets all of these

requirements?

A. Delta Lake

B. Databricks Notebooks

C. Tableau

D. Databricks Machine Learning

E. Databricks SQL

Answer:

E

Databricks SQL is a serverless data warehouse on the Lakehouse that lets you run all of your SQL and

BI applications at scale with your tools of choice, all at a fraction of the cost of traditional cloud data

warehouses1

.

Databricks SQL allows you to create SQL queries and data visualizations using the SQL

Analytics UI or the Databricks SQL CLI2

.

You can also place your data visualizations within a

dashboard and share it with other users in your organization3

.

Databricks SQL is powered by Delta

Lake, which provides reliability, performance, and governance for your data lake4

. Reference:

Databricks SQL

Query data using SQL Analytics

Visualizations in Databricks notebooks

Delta Lake

Question 11

A data analyst is attempting to drop a table my_table. The analyst wants to delete all table metadata

and data.

They run the following command:

DROP TABLE IF EXISTS my_table;

While the object no longer appears when they run SHOW TABLES, the data files still exist.

Which of the following describes why the data files still exist and the metadata files were deleted?

A. The table's data was larger than 10 GB

B. The table did not have a location

C. The table was external

D. The table's data was smaller than 10 GB

E. The table was managed

Answer:

C

An external table is a table that is defined in the metastore, but its data is stored outside of the

Databricks environment, such as in S3, ADLS, or GCS. When an external table is dropped, only the

metadata is deleted from the metastore, but the data files are not affected. This is different from a

managed table, which is a table whose data is stored in the Databricks environment, and whose data

files are deleted when the table is dropped. To delete the data files of an external table, the analyst

needs to specify the PURGE option in the DROP TABLE command, or manually delete the files from

the storage system. Reference:

DROP TABLE

,

Drop Delta table features

,

Best practices for dropping a

managed Delta Lake table

Question 12

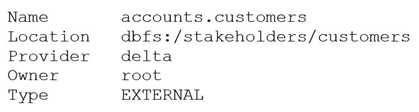

After running DESCRIBE EXTENDED accounts.customers;, the following was returned:

Now, a data analyst runs the following command:

DROP accounts.customers;

Which of the following describes the result of running this command?

A. Running SELECT * FROM delta. `dbfs:/stakeholders/customers` results in an error.

B. Running SELECT * FROM accounts.customers will return all rows in the table.

C. All files with the .customers extension are deleted.

D. The accounts.customers table is removed from the metastore, and the underlying data files are

deleted.

E. The accounts.customers table is removed from the metastore, but the underlying data files are

untouched.

Answer:

E

the accounts.customers table is an EXTERNAL table, which means that it is stored outside the default

warehouse directory and is not managed by Databricks. Therefore, when you run the DROP

command on this table, it only removes the metadata information from the metastore, but does not

delete the actual data files from the file system. This means that you can still access the data using

/stakeholders/customers) or create another table pointing to the same

location. However, if you try to query the table using its name (accounts.customers), you will get an

error because the table no longer exists in the metastore. Reference:

DROP TABLE | Databricks on

AWS

,

Best practices for dropping a managed Delta Lake table - Databricks

Question 13

Which of the following should data analysts consider when working with personally identifiable

information (PII) data?

A. Organization-specific best practices for Pll data

B. Legal requirements for the area in which the data was collected

C. None of these considerations

D. Legal requirements for the area in which the analysis is being performed

E. All of these considerations

Answer:

E

Data analysts should consider all of these factors when working with PII data, as they may affect the

data security, privacy, compliance, and quality. PII data is any information that can be used to identify

a specific individual, such as name, address, phone number, email, social security number, etc. PII

data may be subject to different legal and ethical obligations depending on the context and location

of the data collection and analysis. For example, some countries or regions may have stricter data

protection laws than others, such as the General Data Protection Regulation (GDPR) in the European

Union. Data analysts should also follow the organization-specific best practices for PII data, such as

encryption, anonymization, masking, access control, auditing, etc. These best practices can help

prevent data breaches, unauthorized access, misuse, or loss of PII data. Reference:

How to Use Databricks to Encrypt and Protect PII Data

Automating Sensitive Data (PII/PHI) Detection

Databricks Certified Data Analyst Associate

Question 14

Delta Lake stores table data as a series of data files, but it also stores a lot of other information.

Which of the following is stored alongside data files when using Delta Lake?

A. None of these

B. Table metadata, data summary visualizations, and owner account information

C. Table metadata

D. Data summary visualizations

E. Owner account information

Answer:

C

Delta Lake is a storage layer that enhances data lakes with features like ACID transactions, schema

enforcement, and time travel. While it stores table data as Parquet files, Delta Lake also keeps a

transaction log (stored in the _delta_log directory) that contains detailed table metadata.

Table schema

Partitioning information

Data file paths

Transactional operations like inserts, updates, and deletes

Commit history and version control

This metadata is critical for supporting Delta Lake’s advanced capabilities such as time travel and

efficient query execution. Delta Lake does not store data summary visualizations or owner account

information directly alongside the data files.

Reference: Delta Lake Table Features - Databricks Documentation

Question 15

Which of the following is an advantage of using a Delta Lake-based data lakehouse over common

data lake solutions?

A. ACID transactions

B. Flexible schemas

C. Data deletion

D. Scalable storage

E. Open-source formats

Answer:

A

A Delta Lake-based data lakehouse is a data platform architecture that combines the scalability and

flexibility of a data lake with the reliability and performance of a data warehouse. One of the key

advantages of using a Delta Lake-based data lakehouse over common data lake solutions is that it

supports ACID transactions, which ensure data integrity and consistency. ACID transactions enable

concurrent reads and writes, schema enforcement and evolution, data versioning and rollback, and

data quality checks. These features are not available in traditional data lakes, which rely on file-based

storage systems that do not support transactions. Reference:

Delta Lake: Lakehouse, warehouse, advantages | Definition

Synapse – Data Lake vs. Delta Lake vs. Data Lakehouse

Data Lake vs. Delta Lake - A Detailed Comparison

Building a Data Lakehouse with Delta Lake Architecture: A Comprehensive Guide