appian acd301 practice test

Appian Certified Lead Developer

Question 1

You are reviewing the Engine Performance Logs in Production for a single application that has been

live for six months. This application experiences concurrent user activity and has a fairly sustained

load during business hours. The client has reported performance issues with the application during

business hours.

During your investigation, you notice a high Work Queue - Java Work Queue Size value in the logs.

You also notice unattended process activities, including timer events and sending notification emails,

are taking far longer to execute than normal.

The client increased the number of CPU cores prior to the application going live.

What is the next recommendation?

- A. Add more engine replicas.

- B. Optimize slow-performing user interfaces.

- C. Add more application servers.

- D. Add execution and analytics shards

Answer:

A

Explanation:

As an Appian Lead Developer, analyzing Engine Performance Logs to address performance issues in a

Production application requires understanding Appian’s architecture and the specific metrics

described. The scenario indicates a high “Work Queue - Java Work Queue Size,” which reflects a

backlog of tasks in the Java Work Queue (managed by Appian engines), and delays in unattended

process activities (e.g., timer events, email notifications). These symptoms suggest the Appian

engines are overloaded, despite the client increasing CPU cores. Let’s evaluate each option:

A . Add more engine replicas:

This is the correct recommendation. In Appian, engine replicas (part of the Appian Engine cluster)

handle process execution, including unattended tasks like timers and notifications. A high Java Work

Queue Size indicates the engines are overwhelmed by concurrent activity during business hours,

causing delays. Adding more engine replicas distributes the workload, reducing queue size and

improving performance for both user-driven and unattended tasks. Appian’s documentation

recommends scaling engine replicas to handle sustained loads, especially in Production with high

concurrency. Since CPU cores were already increased (likely on application servers), the bottleneck is

likely the engine capacity, not the servers.

B . Optimize slow-performing user interfaces:

While optimizing user interfaces (e.g., SAIL forms, reports) can improve user experience, the

scenario highlights delays in unattended activities (timers, emails), not UI performance. The Java

Work Queue Size issue points to engine-level processing, not UI rendering, so this doesn’t address

the root cause. Appian’s performance tuning guidelines prioritize engine scaling for queue-related

issues, making this a secondary concern.

C . Add more application servers:

Application servers handle web traffic (e.g., SAIL interfaces, API calls), not process execution or

unattended tasks managed by engines. Increasing application servers would help with UI

concurrency but wouldn’t reduce the Java Work Queue Size or speed up timer/email processing, as

these are engine responsibilities. Since the client already increased CPU cores (likely on application

servers), this is redundant and unrelated to the issue.

D . Add execution and analytics shards:

Execution shards (for process data) and analytics shards (for reporting) are part of Appian’s data

fabric for scalability, but they don’t directly address engine workload or Java Work Queue Size.

Shards optimize data storage and query performance, not real-time process execution. The logs

indicate an engine bottleneck, not a data storage issue, so this isn’t relevant. Appian’s

documentation confirms shards are for long-term scaling, not immediate performance fixes.

Conclusion: Adding more engine replicas (A) is the next recommendation. It directly resolves the high

Java Work Queue Size and delays in unattended tasks, aligning with Appian’s architecture for

handling concurrent loads in Production. This requires collaboration with system administrators to

configure additional replicas in the Appian cluster.

Reference:

Appian Documentation: "Engine Performance Monitoring" (Java Work Queue and Scaling Replicas).

Appian Lead Developer Certification: Performance Optimization Module (Engine Scaling Strategies).

Appian Best Practices: "Managing Production Performance" (Work Queue Analysis).

Question 2

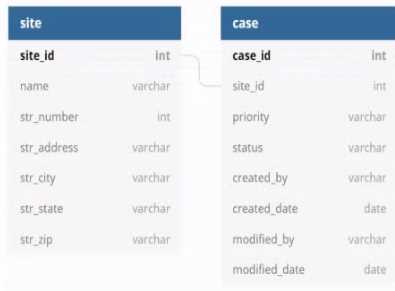

You are developing a case management application to manage support cases for a large set of sites.

One of the tabs in this application s site Is a record grid of cases, along with Information about the

site corresponding to that case. Users must be able to filter cases by priority level and status.

You decide to create a view as the source of your entity-backed record, which joins the separate

case/site tables (as depicted in the following Image).

Which three column should be indexed?

- A. site_id

- B. status

- C. name

- D. modified_date

- E. priority

- F. case_id

Answer:

ABE

Explanation:

Indexing columns can improve the performance of queries that use those columns in filters, joins, or

order by clauses. In this case, the columns that should be indexed are site_id, status, and priority,

because they are used for filtering or joining the tables. Site_id is used to join the case and site

tables, so indexing it will speed up the join operation. Status and priority are used to filter the cases

by the user’s input, so indexing them will reduce the number of rows that need to be scanned.

Name, modified_date, and case_id do not need to be indexed, because they are not used for filtering

or joining. Name and modified_date are only used for displaying information in the record grid, and

case_id is only used as a unique identifier for each record. Verified Reference:

Appian Records

Tutorial

,

Appian Best Practices

As an Appian Lead Developer, optimizing a database view for an entity-backed record grid requires

indexing columns frequently used in queries, particularly for filtering and joining. The scenario

involves a record grid displaying cases with site information, filtered by “priority level” and “status,”

and joined via the site_id foreign key. The image shows two tables (site and case) with a relationship

via site_id. Let’s evaluate each column based on Appian’s performance best practices and query

patterns:

A . site_id:

This is a primary key in the site table and a foreign key in the case table, used for joining the tables in

the view. Indexing site_id in the case table (and ensuring it’s indexed in site as a PK) optimizes JOIN

operations, reducing query execution time for the record grid. Appian’s documentation recommends

indexing foreign keys in large datasets to improve query performance, especially for entity-backed

records. This is critical for the join and must be included.

B . status:

Users filter cases by “status” (a varchar column in the case table). Indexing status speeds up filtering

queries (e.g., WHERE status = 'Open') in the record grid, particularly with large datasets. Appian

emphasizes indexing columns used in WHERE clauses or filters to enhance performance, making this

a key column for optimization. Since status is a common filter, it’s essential.

C . name:

This is a varchar column in the site table, likely used for display (e.g., site name in the grid). However,

the scenario doesn’t mention filtering or sorting by name, and it’s not part of the join or required

filters. Indexing name could improve searches if used, but it’s not a priority given the focus on

priority and status filters. Appian advises indexing only frequently queried or filtered columns to

avoid unnecessary overhead, so this isn’t necessary here.

D . modified_date:

This is a date column in the case table, tracking when cases were last updated. While useful for

sorting or historical queries, the scenario doesn’t specify filtering or sorting by modified_date in the

record grid. Indexing it could help if used, but it’s not critical for the current requirements. Appian’s

performance guidelines prioritize indexing columns in active filters, making this lower priority than

site_id, status, and priority.

E . priority:

Users filter cases by “priority level” (a varchar column in the case table). Indexing priority optimizes

filtering queries (e.g., WHERE priority = 'High') in the record grid, similar to status. Appian’s

documentation highlights indexing columns used in WHERE clauses for entity-backed records,

especially with large datasets. Since priority is a specified filter, it’s essential to include.

F . case_id:

This is the primary key in the case table, already indexed by default (as PKs are automatically indexed

in most databases). Indexing it again is redundant and unnecessary, as Appian’s Data Store

configuration relies on PKs for unique identification but doesn’t require additional indexing for

performance in this context. The focus is on join and filter columns, not the PK itself.

Conclusion: The three columns to index are A (site_id), B (status), and E (priority). These optimize the

JOIN (site_id) and filter performance (status, priority) for the record grid, aligning with Appian’s

recommendations for entity-backed records and large datasets. Indexing these columns ensures

efficient querying for user filters, critical for the application’s performance.

Reference:

Appian Documentation: "Performance Best Practices for Data Stores" (Indexing Strategies).

Appian Lead Developer Certification: Data Management Module (Optimizing Entity-Backed Records).

Appian Best Practices: "Working with Large Data Volumes" (Indexing for Query Performance).

Question 3

You are running an inspection as part of the first deployment process from TEST to PROD. You receive

a notice that one of your objects will not deploy because it is dependent on an object from an

application owned by a separate team.

What should be your next step?

- A. Create your own object with the same code base, replace the dependent object in the application, and deploy to PROD.

- B. Halt the production deployment and contact the other team for guidance on promoting the object to PROD.

- C. Check the dependencies of the necessary object. Deploy to PROD if there are few dependencies and it is low risk.

- D. Push a functionally viable package to PROD without the dependencies, and plan the rest of the deployment accordingly with the other team’s constraints.

Answer:

B

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, managing a deployment from TEST to PROD requires careful handling

of dependencies, especially when objects from another team’s application are involved. The scenario

describes a dependency issue during deployment, signaling a need for collaboration and governance.

Let’s evaluate each option:

A . Create your own object with the same code base, replace the dependent object in the

application, and deploy to PROD:

This approach involves duplicating the object, which introduces redundancy, maintenance risks, and

potential version control issues. It violates Appian’s governance principles, as objects should be

owned and managed by their respective teams to ensure consistency and avoid conflicts. Appian’s

deployment best practices discourage duplicating objects unless absolutely necessary, making this an

unsustainable and risky solution.

B . Halt the production deployment and contact the other team for guidance on promoting the object

to PROD:

This is the correct step. When an object from another application (owned by a separate team) is a

dependency, Appian’s deployment process requires coordination to ensure both applications’

objects are deployed in sync. Halting the deployment prevents partial deployments that could break

functionality, and contacting the other team aligns with Appian’s collaboration and governance

guidelines. The other team can provide the necessary object version, adjust their deployment

timeline, or resolve the dependency, ensuring a stable PROD environment.

C . Check the dependencies of the necessary object. Deploy to PROD if there are few dependencies

and it is low risk:

This approach risks deploying an incomplete or unstable application if the dependency isn’t fully

resolved. Even with “few dependencies” and “low risk,” deploying without the other team’s object

could lead to runtime errors or broken functionality in PROD. Appian’s documentation emphasizes

thorough dependency management during deployment, requiring all objects (including those from

other applications) to be promoted together, making this risky and not recommended.

D . Push a functionally viable package to PROD without the dependencies, and plan the rest of the

deployment accordingly with the other team’s constraints:

Deploying without dependencies creates an incomplete solution, potentially leaving the application

non-functional or unstable in PROD. Appian’s deployment process ensures all dependencies are

included to maintain application integrity, and partial deployments are discouraged unless explicitly

planned (e.g., phased rollouts). This option delays resolution and increases risk, contradicting

Appian’s best practices for Production stability.

Conclusion: Halting the production deployment and contacting the other team for guidance (B) is the

next step. It ensures proper collaboration, aligns with Appian’s governance model, and prevents

deployment errors, providing a safe and effective resolution.

Reference:

Appian Documentation: "Deployment Best Practices" (Managing Dependencies Across Applications).

Appian Lead Developer Certification: Application Management Module (Cross-Team Collaboration).

Appian Best Practices: "Handling Production Deployments" (Dependency Resolution).

Question 4

You need to design a complex Appian integration to call a RESTful API. The RESTful API will be used

to update a case in a customer’s legacy system.

What are three prerequisites for designing the integration?

- A. Define the HTTP method that the integration will use.

- B. Understand the content of the expected body, including each field type and their limits.

- C. Understand whether this integration will be used in an interface or in a process model.

- D. Understand the different error codes managed by the API and the process of error handling in Appian.

- E. Understand the business rules to be applied to ensure the business logic of the data.

Answer:

A, B, D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, designing a complex integration to a RESTful API for updating a case in

a legacy system requires a structured approach to ensure reliability, performance, and alignment

with business needs. The integration involves sending a JSON payload (implied by the context) and

handling responses, so the focus is on technical and functional prerequisites. Let’s evaluate each

option:

A . Define the HTTP method that the integration will use:

This is a primary prerequisite. RESTful APIs use HTTP methods (e.g., POST, PUT, GET) to define the

operation—here, updating a case likely requires PUT or POST. Appian’s Connected System and

Integration objects require specifying the method to configure the HTTP request correctly.

Understanding the API’s method ensures the integration aligns with its design, making this essential

for design. Appian’s documentation emphasizes choosing the correct HTTP method as a foundational

step.

B . Understand the content of the expected body, including each field type and their limits:

This is also critical. The JSON payload for updating a case includes fields (e.g., text, dates, numbers),

and the API expects a specific structure with field types (e.g., string, integer) and limits (e.g., max

length, size constraints). In Appian, the Integration object requires a dictionary or CDT to construct

the body, and mismatches (e.g., wrong types, exceeding limits) cause errors (e.g., 400 Bad Request).

Appian’s best practices mandate understanding the API schema to ensure data compatibility, making

this a key prerequisite.

C . Understand whether this integration will be used in an interface or in a process model:

While knowing the context (interface vs. process model) is useful for design (e.g., synchronous vs.

asynchronous calls), it’s not a prerequisite for the integration itself—it’s a usage consideration.

Appian supports integrations in both contexts, and the integration’s design (e.g., HTTP method,

body) remains the same. This is secondary to technical API details, so it’s not among the top three

prerequisites.

D . Understand the different error codes managed by the API and the process of error handling in

Appian:

This is essential. RESTful APIs return HTTP status codes (e.g., 200 OK, 400 Bad Request, 500 Internal

Server Error), and the customer’s API likely documents these for failure scenarios (e.g., invalid data,

server issues). Appian’s Integration objects can handle errors via error mappings or process models,

and understanding these codes ensures robust error handling (e.g., retry logic, user notifications).

Appian’s documentation stresses error handling as a core design element for reliable integrations,

making this a primary prerequisite.

E . Understand the business rules to be applied to ensure the business logic of the data:

While business rules (e.g., validating case data before sending) are important for the overall

application, they aren’t a prerequisite for designing the integration itself—they’re part of the

application logic (e.g., process model or interface). The integration focuses on technical interaction

with the API, not business validation, which can be handled separately in Appian. This is a secondary

concern, not a core design requirement for the integration.

Conclusion: The three prerequisites are A (define the HTTP method), B (understand the body content

and limits), and D (understand error codes and handling). These ensure the integration is technically

sound, compatible with the API, and resilient to errors—critical for a complex RESTful API integration

in Appian.

Reference:

Appian Documentation: "Designing REST Integrations" (HTTP Methods, Request Body, Error

Handling).

Appian Lead Developer Certification: Integration Module (Prerequisites for Complex Integrations).

Appian Best Practices: "Building Reliable API Integrations" (Payload and Error Management).

To design a complex Appian integration to call a RESTful API, you need to have some prerequisites,

such as:

Define the HTTP method that the integration will use. The HTTP method is the action that the

integration will perform on the API, such as GET, POST, PUT, PATCH, or DELETE. The HTTP method

determines how the data will be sent and received by the API, and what kind of response will be

expected.

Understand the content of the expected body, including each field type and their limits. The body is

the data that the integration will send to the API, or receive from the API, depending on the HTTP

method. The body can be in different formats, such as JSON, XML, or form data. You need to

understand how to structure the body according to the API specification, and what kind of data types

and values are allowed for each field.

Understand the different error codes managed by the API and the process of error handling in

Appian. The error codes are the status codes that indicate whether the API request was successful or

not, and what kind of problem occurred if not. The error codes can range from 200 (OK) to 500

(Internal Server Error), and each code has a different meaning and implication. You need to

understand how to handle different error codes in Appian, and how to display meaningful messages

to the user or log them for debugging purposes.

The other two options are not prerequisites for designing the integration, but rather considerations

for implementing it.

Understand whether this integration will be used in an interface or in a process model. This is not a

prerequisite, but rather a decision that you need to make based on your application requirements

and design. You can use an integration either in an interface or in a process model, depending on

where you need to call the API and how you want to handle the response. For example, if you need

to update a case in real-time based on user input, you may want to use an integration in an interface.

If you need to update a case periodically based on a schedule or an event, you may want to use an

integration in a process model.

Understand the business rules to be applied to ensure the business logic of the data. This is not a

prerequisite, but rather a part of your application logic that you need to implement after designing

the integration. You need to apply business rules to validate, transform, or enrich the data that you

send or receive from the API, according to your business requirements and logic. For example, you

may need to check if the case status is valid before updating it in the legacy system, or you may need

to add some additional information to the case data before displaying it in Appian.

Question 5

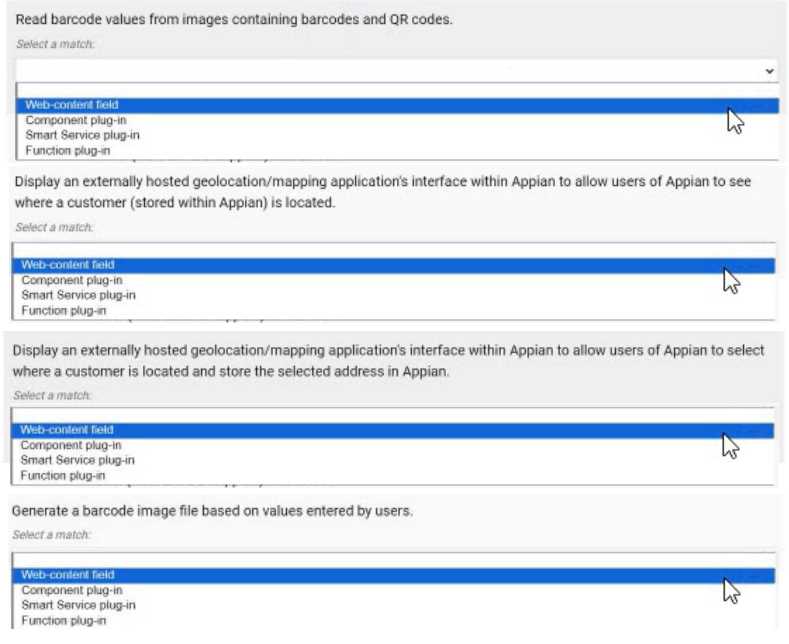

HOTSPOT

For each requirement, match the most appropriate approach to creating or utilizing plug-ins Each

approach will be used once.

Note: To change your responses, you may deselect your response by clicking the blank space at the

top of the selection list.

Answer:

Explanation:

Read barcode values from images containing barcodes and QR codes. → Smart Service plug-in

Display an externally hosted geolocation/mapping application’s interface within Appian to allow

users of Appian to see where a customer (stored within Appian) is located. → Web-content field

Display an externally hosted geolocation/mapping application’s interface within Appian to allow

users of Appian to select where a customer is located and store the selected address in Appian. →

Component plug-in

Generate a barcode image file based on values entered by users. → Function plug-in

Comprehensive and Detailed In-Depth Explanation:

Appian plug-ins extend functionality by integrating custom Java code into the platform. The four

approaches—Web-content field, Component plug-in, Smart Service plug-in, and Function plug-in—

serve distinct purposes, and each requirement must be matched to the most appropriate one based

on its use case. Appian’s Plug-in Development Guide provides the framework for these decisions.

Read barcode values from images containing barcodes and QR codes → Smart Service plug-in:

This requirement involves processing image data to extract barcode or QR code values, a task that

typically occurs within a process model (e.g., as part of a workflow). A Smart Service plug-in is ideal

because it allows custom Java logic to be executed as a node in a process, enabling the decoding of

images and returning the extracted values to Appian. This approach integrates seamlessly with

Appian’s process automation, making it the best fit for data extraction tasks.

Display an externally hosted geolocation/mapping application’s interface within Appian to allow

users of Appian to see where a customer (stored within Appian) is located → Web-content field:

This requires embedding an external mapping interface (e.g., Google Maps) within an Appian

interface. A Web-content field is the appropriate choice, as it allows you to embed HTML, JavaScript,

or iframe content from an external source directly into an Appian form or report. This approach is

lightweight and does not require custom Java development, aligning with Appian’s recommendation

for displaying external content without interactive data storage.

Display an externally hosted geolocation/mapping application’s interface within Appian to allow

users of Appian to select where a customer is located and store the selected address in Appian →

Component plug-in:

This extends the previous requirement by adding interactivity (selecting an address) and data

storage. A Component plug-in is suitable because it enables the creation of a custom interface

component (e.g., a map selector) that can be embedded in Appian interfaces. The plug-in can handle

user interactions, communicate with the external mapping service, and update Appian data stores,

offering a robust solution for interactive external integrations.

Generate a barcode image file based on values entered by users → Function plug-in:

This involves generating an image file dynamically based on user input, a task that can be executed

within an expression or interface. A Function plug-in is the best match, as it allows custom Java logic

to be called as an expression function (e.g., pluginGenerateBarcode(value)), returning the generated

image. This approach is efficient for single-purpose operations and integrates well with Appian’s

expression-based design.

Matching Rationale:

Each approach is used once, as specified, covering the spectrum of plug-in types: Smart Service for

process-level tasks, Web-content field for static external display, Component plug-in for interactive

components, and Function plug-in for expression-level operations.

Appian’s plug-in framework discourages overlap (e.g., using a Smart Service for display or a

Component for process tasks), ensuring the selected matches align with intended use cases.

Reference: Appian Documentation - Plug-in Development Guide, Appian Interface Design Best

Practices, Appian Lead Developer Training - Custom Integrations.

Question 6

You have 5 applications on your Appian platform in Production. Users are now beginning to use

multiple applications across the platform, and the client wants to ensure a consistent user

experience across all applications.

You notice that some applications use rich text, some use section layouts, and others use box layouts.

The result is that each application has a different color and size for the header.

What would you recommend to ensure consistency across the platform?

- A. Create constants for text size and color, and update each section to reference these values.

- B. In the common application, create a rule that can be used across the platform for section headers, and update each application to reference this new rule.

- C. In the common application, create one rule for each application, and update each application to reference its respective rule.

- D. In each individual application, create a rule that can be used for section headers, and update each application to reference its respective rule.

Answer:

B

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, ensuring a consistent user experience across multiple applications on

the Appian platform involves centralizing reusable components and adhering to Appian’s design

governance principles. The client’s concern about inconsistent headers (e.g., different colors, sizes,

layouts) across applications using rich text, section layouts, and box layouts requires a scalable,

maintainable solution. Let’s evaluate each option:

A . Create constants for text size and color, and update each section to reference these values:

Using constants (e.g., cons!TEXT_SIZE and cons!HEADER_COLOR) is a good practice for managing

values, but it doesn’t address layout consistency (e.g., rich text vs. section layouts vs. box layouts).

Constants alone can’t enforce uniform header design across applications, as they don’t encapsulate

layout logic (e.g., a!sectionLayout() vs. a!richTextDisplayField()). This approach would require manual

updates to each application’s components, increasing maintenance overhead and still risking

inconsistency. Appian’s documentation recommends using rules for reusable UI components, not just

constants, making this insufficient.

B . In the common application, create a rule that can be used across the platform for section headers,

and update each application to reference this new rule:

This is the best recommendation. Appian supports a “common application” (often called a shared or

utility application) to store reusable objects like expression rules, which can define consistent header

designs (e.g., rule!CommonHeader(size: "LARGE", color: "PRIMARY")). By creating a single rule for

headers and referencing it across all 5 applications, you ensure uniformity in layout, color, and size

(e.g., using a!sectionLayout() or a!boxLayout() consistently). Appian’s design best practices

emphasize centralizing UI components in a common application to reduce duplication, enforce

standards, and simplify maintenance—perfect for achieving a consistent user experience.

C . In the common application, create one rule for each application, and update each application to

reference its respective rule:

This approach creates separate header rules for each application (e.g., rule!App1Header,

rule!App2Header), which contradicts the goal of consistency. While housed in the common

application, it introduces variability (e.g., different colors or sizes per rule), defeating the purpose.

Appian’s governance guidelines advocate for a single, shared rule to maintain uniformity, making

this less efficient and unnecessary.

D . In each individual application, create a rule that can be used for section headers, and update each

application to reference its respective rule:

Creating separate rules in each application (e.g., rule!App1Header in App 1, rule!App2Header in App

2) leads to duplication and inconsistency, as each rule could differ in design. This approach increases

maintenance effort and risks diverging styles, violating the client’s requirement for a “consistent user

experience.” Appian’s best practices discourage duplicating UI logic, favoring centralized rules in a

common application instead.

Conclusion: Creating a rule in the common application for section headers and referencing it across

the platform (B) ensures consistency in header design (color, size, layout) while minimizing

duplication and maintenance. This leverages Appian’s application architecture for shared objects,

aligning with Lead Developer standards for UI governance.

Reference:

Appian Documentation: "Designing for Consistency Across Applications" (Common Application Best

Practices).

Appian Lead Developer Certification: UI Design Module (Reusable Components and Rules).

Appian Best Practices: "Maintaining User Experience Consistency" (Centralized UI Rules).

The best way to ensure consistency across the platform is to create a rule that can be used across the

platform for section headers. This rule can be created in the common application, and then each

application can be updated to reference this rule. This will ensure that all of the applications use the

same color and size for the header, which will provide a consistent user experience.

The other options are not as effective. Option A, creating constants for text size and color, and

updating each section to reference these values, would require updating each section in each

application. This would be a lot of work, and it would be easy to make mistakes. Option C, creating

one rule for each application, would also require updating each application. This would be less work

than option A, but it would still be a lot of work, and it would be easy to make mistakes. Option D,

creating a rule in each individual application, would not ensure consistency across the platform. Each

application would have its own rule, and the rules could be different. This would not provide a

consistent user experience.

Best Practices:

When designing a platform, it is important to consider the user experience. A consistent user

experience will make it easier for users to learn and use the platform.

When creating rules, it is important to use them consistently across the platform. This will ensure

that the platform has a consistent look and feel.

When updating the platform, it is important to test the changes to ensure that they do not break the

user experience.

Question 7

You are tasked to build a large-scale acquisition application for a prominent customer. The acquisition

process tracks the time it takes to fulfill a purchase request with an award.

The customer has structured the contract so that there are multiple application development teams.

How should you design for multiple processes and forms, while minimizing repeated code?

- A. Create a Center of Excellence (CoE).

- B. Create a common objects application.

- C. Create a Scrum of Scrums sprint meeting for the team leads.

- D. Create duplicate processes and forms as needed.

Answer:

B

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, designing a large-scale acquisition application with multiple

development teams requires a strategy to manage processes, forms, and code reuse effectively. The

goal is to minimize repeated code (e.g., duplicate interfaces, process models) while ensuring

scalability and maintainability across teams. Let’s evaluate each option:

A . Create a Center of Excellence (CoE):

A Center of Excellence is an organizational structure or team focused on standardizing practices,

training, and governance across projects. While beneficial for long-term consistency, it doesn’t

directly address the technical design of minimizing repeated code for processes and forms. It’s a

strategic initiative, not a design solution, and doesn’t solve the immediate need for code reuse.

Appian’s documentation mentions CoEs for governance but not as a primary design approach,

making this less relevant here.

B . Create a common objects application:

This is the best recommendation. In Appian, a “common objects application” (or shared application)

is used to store reusable components like expression rules, interfaces, process models, constants,

and data types (e.g., CDTs). For a large-scale acquisition application with multiple teams, centralizing

shared objects (e.g., rule!CommonForm, pm!CommonProcess) ensures consistency, reduces

duplication, and simplifies maintenance. Teams can reference these objects in their applications,

adhering to Appian’s design best practices for scalability. This approach minimizes repeated code

while allowing team-specific customizations, aligning with Lead Developer standards for large

projects.

C . Create a Scrum of Scrums sprint meeting for the team leads:

A Scrum of Scrums meeting is a coordination mechanism for Agile teams, focusing on aligning sprint

goals and resolving cross-team dependencies. While useful for collaboration, it doesn’t address the

technical design of minimizing repeated code—it’s a process, not a solution for code reuse. Appian’s

Agile methodologies support such meetings, but they don’t directly reduce duplication in processes

and forms, making this less applicable.

D . Create duplicate processes and forms as needed:

Duplicating processes and forms (e.g., copying interface!PurchaseForm for each team) leads to

redundancy, increased maintenance effort, and potential inconsistencies (e.g., divergent logic). This

contradicts the goal of minimizing repeated code and violates Appian’s design principles for

reusability and efficiency. Appian’s documentation strongly discourages duplication, favoring shared

objects instead, making this the least effective option.

Conclusion: Creating a common objects application (B) is the recommended design. It centralizes

reusable processes, forms, and other components, minimizing code duplication across teams while

ensuring consistency and scalability for the large-scale acquisition application. This leverages

Appian’s application architecture for shared resources, aligning with Lead Developer best practices

for multi-team projects.

Reference:

Appian Documentation: "Designing Large-Scale Applications" (Common Application for Reusable

Objects).

Appian Lead Developer Certification: Application Design Module (Minimizing Code Duplication).

Appian Best Practices: "Managing Multi-Team Development" (Shared Objects Strategy).

To build a large scale acquisition application for a prominent customer, you should design for

multiple processes and forms, while minimizing repeated code. One way to do this is to create a

common objects application, which is a shared application that contains reusable components, such

as rules, constants, interfaces, integrations, or data types, that can be used by multiple applications.

This way, you can avoid duplication and inconsistency of code, and make it easier to maintain and

update your applications. You can also use the common objects application to define common

standards and best practices for your application development teams, such as naming conventions,

coding styles, or documentation guidelines. Verified Reference: [Appian Best Practices], [Appian

Design Guidance]

Question 8

HOTSPOT

For each scenario outlined, match the best tool to use to meet expectations. Each tool will be used

once

Note: To change your responses, you may deselected your response by clicking the blank space at the

top of the selection list.

Answer:

Explanation:

As a user, if I update an object of type "Customer", the value of the given field should be displayed on

the "Company" Record List. → Database Complex View

As a user, if I update an object of type "Customer", a simple data transformation needs to be

performed on related objects of the same type (namely, all the customers related to the same

company). → Database Trigger

As a user, if I update an object of type "Customer", some complex data transformations need to be

performed on related objects of type "Customer", "Company", and "Contract". → Database Stored

Procedure

As a user, if I update an object of type "Customer", some simple data transformations need to be

performed on related objects of type "Company", "Address", and "Contract". → Write to Data Store

Entity smart service

Comprehensive and Detailed In-Depth Explanation:

Appian integrates with external databases to handle data updates and transformations, offering

various tools depending on the complexity and context of the task. The scenarios involve updating a

"Customer" object and triggering actions on related data, requiring careful selection of the best tool.

Appian’s Data Integration and Database Management documentation guides these decisions.

As a user, if I update an object of type "Customer", the value of the given field should be displayed on

the "Company" Record List → Database Complex View:

This scenario requires displaying updated customer data on a "Company" Record List, implying a

read-only operation to join or aggregate data across tables. A Database Complex View (e.g., a SQL

view combining "Customer" and "Company" tables) is ideal for this. Appian supports complex views

to predefine queries that can be used in Record Lists, ensuring the updated field value is reflected

without additional processing. This tool is best for read operations and does not involve write logic.

As a user, if I update an object of type "Customer", a simple data transformation needs to be

performed on related objects of the same type (namely, all the customers related to the same

company) → Database Trigger:

This involves a simple transformation (e.g., updating a flag or counter) on related "Customer" records

after an update. A Database Trigger, executed automatically on the database side when a "Customer"

record is modified, is the best fit. It can perform lightweight SQL updates on related records (e.g., via

a company ID join) without Appian process overhead. Appian recommends triggers for simple,

database-level automation, especially when transformations are confined to the same table type.

As a user, if I update an object of type "Customer", some complex data transformations need to be

performed on related objects of type "Customer", "Company", and "Contract" → Database Stored

Procedure:

This scenario involves complex transformations across multiple related object types, suggesting

multi-step logic (e.g., recalculating totals or updating multiple tables). A Database Stored Procedure

allows you to encapsulate this logic in SQL, callable from Appian, offering flexibility for complex

operations. Appian supports stored procedures for scenarios requiring transactional integrity and

intricate data manipulation across tables, making it the best choice here.

As a user, if I update an object of type "Customer", some simple data transformations need to be

performed on related objects of type "Company", "Address", and "Contract" → Write to Data Store

Entity smart service:

This requires simple transformations on related objects, which can be handled within Appian’s

process model. The "Write to Data Store Entity" smart service allows you to update multiple related

entities (e.g., "Company", "Address", "Contract") based on the "Customer" update, using Appian’s

expression rules for logic. This approach leverages Appian’s process automation, is user-friendly for

developers, and is recommended for straightforward updates within the Appian environment.

Matching Rationale:

Each tool is used once, covering the spectrum of database integration options: Database Complex

View for read/display, Database Trigger for simple database-side automation, Database Stored

Procedure for complex multi-table logic, and Write to Data Store Entity smart service for Appian-

managed simple updates.

Appian’s guidelines prioritize using the right tool based on complexity and context, ensuring

efficiency and maintainability.

Reference: Appian Documentation - Data Integration and Database Management, Appian Process

Model Guide - Smart Services, Appian Lead Developer Training - Database Optimization.

Question 9

You are required to create an integration from your Appian Cloud instance to an application hosted

within a customer’s self-managed environment.

The customer’s IT team has provided you with a REST API endpoint to test with:

https://internal.network/api/api/ping.

Which recommendation should you make to progress this integration?

- A. Expose the API as a SOAP-based web service.

- B. Deploy the API/service into Appian Cloud.

- C. Add Appian Cloud’s IP address ranges to the customer network’s allowed IP listing.

- D. Set up a VPN tunnel.

Answer:

D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, integrating an Appian Cloud instance with a customer’s self-managed

(on-premises) environment requires addressing network connectivity, security, and Appian’s cloud

architecture constraints. The provided endpoint (https://internal.network/api/api/ping) is a REST API

on an internal network, inaccessible directly from Appian Cloud due to firewall restrictions and lack

of public exposure. Let’s evaluate each option:

A . Expose the API as a SOAP-based web service:

Converting the REST API to SOAP isn’t a practical recommendation. The customer has provided a

REST endpoint, and Appian fully supports REST integrations via Connected Systems and Integration

objects. Changing the API to SOAP adds unnecessary complexity, development effort, and risks for

the customer, with no benefit to Appian’s integration capabilities. Appian’s documentation

emphasizes using the API’s native format (REST here), making this irrelevant.

B . Deploy the API/service into Appian Cloud:

Deploying the customer’s API into Appian Cloud is infeasible. Appian Cloud is a managed PaaS

environment, not designed to host customer applications or APIs. The API resides in the customer’s

self-managed environment, and moving it would require significant architectural changes, violating

security and operational boundaries. Appian’s integration strategy focuses on connecting to external

systems, not hosting them, ruling this out.

C . Add Appian Cloud’s IP address ranges to the customer network’s allowed IP listing:

This approach involves whitelisting Appian Cloud’s IP ranges (available in Appian documentation) in

the customer’s firewall to allow direct HTTP/HTTPS requests. However, Appian Cloud’s IPs are

dynamic and shared across tenants, making this unreliable for long-term integrations—changes in IP

ranges could break connectivity. Appian’s best practices discourage relying on IP whitelisting for

cloud-to-on-premises integrations due to this limitation, favoring secure tunnels instead.

D . Set up a VPN tunnel:

This is the correct recommendation. A Virtual Private Network (VPN) tunnel establishes a secure,

encrypted connection between Appian Cloud and the customer’s self-managed network, allowing

Appian to access the internal REST API (https://internal.network/api/api/ping). Appian supports

VPNs for cloud-to-on-premises integrations, and this approach ensures reliability, security, and

compliance with network policies. The customer’s IT team can configure the VPN, and Appian’s

documentation recommends this for such scenarios, especially when dealing with internal

endpoints.

Conclusion: Setting up a VPN tunnel (D) is the best recommendation. It enables secure, reliable

connectivity from Appian Cloud to the customer’s internal API, aligning with Appian’s integration

best practices for cloud-to-on-premises scenarios.

Reference:

Appian Documentation: "Integrating Appian Cloud with On-Premises Systems" (VPN and Network

Configuration).

Appian Lead Developer Certification: Integration Module (Cloud-to-On-Premises Connectivity).

Appian Best Practices: "Securing Integrations with Legacy Systems" (VPN Recommendations).

Question 10

You have an active development team (Team A) building enhancements for an application (App X)

and are currently using the TEST environment for User Acceptance Testing (UAT).

A separate operations team (Team B) discovers a critical error in the Production instance of App X

that they must remediate. However, Team B does not have a hotfix stream for which to accomplish

this. The available environments are DEV, TEST, and PROD.

Which risk mitigation effort should both teams employ to ensure Team A’s capital project is only

minorly interrupted, and Team B’s critical fix can be completed and deployed quickly to end users?

- A. Team B must communicate to Team A which component will be addressed in the hotfix to avoid overlap of changes. If overlap exists, the component must be versioned to its PROD state before being remediated and deployed, and then versioned back to its latest development state. If overlap does not exist, the component may be remediated and deployed without any version changes.

- B. Team A must analyze their current codebase in DEV to merge the hotfix changes into their latest enhancements. Team B is then required to wait for the hotfix to follow regular deployment protocols from DEV to the PROD environment.

- C. Team B must address changes in the TEST environment. These changes can then be tested and deployed directly to PROD. Once the deployment is complete, Team B can then communicate their changes to Team A to ensure they are incorporated as part of the next release.

- D. Team B must address the changes directly in PROD. As there is no hotfix stream, and DEV and TEST are being utilized for active development, it is best to avoid a conflict of components. Once Team A has completed their enhancements work, Team B can update DEV and TEST accordingly.

Answer:

A

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, managing concurrent development and operations (hotfix) activities

across limited environments (DEV, TEST, PROD) requires minimizing disruption to Team A’s

enhancements while ensuring Team B’s critical fix reaches PROD quickly. The scenario highlights no

hotfix stream, active UAT in TEST, and a critical PROD issue, necessitating a strategic approach. Let’s

evaluate each option:

A . Team B must communicate to Team A which component will be addressed in the hotfix to avoid

overlap of changes. If overlap exists, the component must be versioned to its PROD state before

being remediated and deployed, and then versioned back to its latest development state. If overlap

does not exist, the component may be remediated and deployed without any version changes:

This is the best approach. It ensures collaboration between teams to prevent conflicts, leveraging

Appian’s version control (e.g., object versioning in Appian Designer). Team B identifies the critical

component, checks for overlap with Team A’s work, and uses versioning to isolate changes. If no

overlap exists, the hotfix deploys directly; if overlap occurs, versioning preserves Team A’s work,

allowing the hotfix to deploy and then reverting the component for Team A’s continuation. This

minimizes interruption to Team A’s UAT, enables rapid PROD deployment, and aligns with Appian’s

change management best practices.

B . Team A must analyze their current codebase in DEV to merge the hotfix changes into their latest

enhancements. Team B is then required to wait for the hotfix to follow regular deployment protocols

from DEV to the PROD environment:

This delays Team B’s critical fix, as regular deployment (DEV → TEST → PROD) could take weeks,

violating the need for “quick deployment to end users.” It also risks introducing Team A’s untested

enhancements into the hotfix, potentially destabilizing PROD. Appian’s documentation discourages

mixing development and hotfix workflows, favoring isolated changes for urgent fixes, making this

inefficient and risky.

C . Team B must address changes in the TEST environment. These changes can then be tested and

deployed directly to PROD. Once the deployment is complete, Team B can then communicate their

changes to Team A to ensure they are incorporated as part of the next release:

Using TEST for hotfix development disrupts Team A’s UAT, as TEST is already in use for their

enhancements. Direct deployment from TEST to PROD skips DEV validation, increasing risk, and

doesn’t address overlap with Team A’s work. Appian’s deployment guidelines emphasize separate

streams (e.g., hotfix streams) to avoid such conflicts, making this disruptive and unsafe.

D . Team B must address the changes directly in PROD. As there is no hotfix stream, and DEV and

TEST are being utilized for active development, it is best to avoid a conflict of components. Once

Team A has completed their enhancements work, Team B can update DEV and TEST accordingly:

Making changes directly in PROD is highly discouraged in Appian due to lack of testing, version

control, and rollback capabilities, risking further instability. This violates Appian’s Production

governance and security policies, and delays Team B’s updates until Team A finishes, contradicting

the need for a “quick deployment.” Appian’s best practices mandate using lower environments for

changes, ruling this out.

Conclusion: Team B communicating with Team A, versioning components if needed, and deploying

the hotfix (A) is the risk mitigation effort. It ensures minimal interruption to Team A’s work, rapid

PROD deployment for Team B’s fix, and leverages Appian’s versioning for safe, controlled changes—

aligning with Lead Developer standards for multi-team coordination.

Reference:

Appian Documentation: "Managing Production Hotfixes" (Versioning and Change Management).

Appian Lead Developer Certification: Application Management Module (Hotfix Strategies).

Appian Best Practices: "Concurrent Development and Operations" (Minimizing Risk in Limited

Environments).

Question 11

As part of an upcoming release of an application, a new nullable field is added to a table that

contains customer dat

a. The new field is used by a report in the upcoming release and is calculated using data from

another table.

Which two actions should you consider when creating the script to add the new field?

- A. Create a script that adds the field and leaves it null.

- B. Create a rollback script that removes the field.

- C. Create a script that adds the field and then populates it.

- D. Create a rollback script that clears the data from the field.

- E. Add a view that joins the customer data to the data used in calculation.

Answer:

B, C

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, adding a new nullable field to a database table for an upcoming

release requires careful planning to ensure data integrity, report functionality, and rollback capability.

The field is used in a report and calculated from another table, so the script must handle both

deployment and potential reversibility. Let’s evaluate each option:

A . Create a script that adds the field and leaves it null:

Adding a nullable field and leaving it null is technically feasible (e.g., using ALTER TABLE ADD

COLUMN in SQL), but it doesn’t address the report’s need for calculated data. Since the field is used

in a report and calculated from another table, leaving it null risks incomplete or incorrect reporting

until populated, delaying functionality. Appian’s data management best practices recommend

populating data during deployment for immediate usability, making this insufficient as a standalone

action.

B . Create a rollback script that removes the field:

This is a critical action. In Appian, database changes (e.g., adding a field) must be reversible in case of

deployment failure or rollback needs (e.g., during testing or PROD issues). A rollback script that

removes the field (e.g., ALTER TABLE DROP COLUMN) ensures the database can return to its original

state, minimizing risk. Appian’s deployment guidelines emphasize rollback scripts for schema

changes, making this essential for safe releases.

C . Create a script that adds the field and then populates it:

This is also essential. Since the field is nullable, calculated from another table, and used in a report,

populating it during deployment ensures immediate functionality. The script can use SQL (e.g.,

UPDATE table SET new_field = (SELECT calculated_value FROM other_table WHERE condition)) to

populate data, aligning with Appian’s data fabric principles for maintaining data consistency.

Appian’s documentation recommends populating new fields during deployment for reporting

accuracy, making this a key action.

D . Create a rollback script that clears the data from the field:

Clearing data (e.g., UPDATE table SET new_field = NULL) is less effective than removing the field

entirely. If the deployment fails, the field’s existence with null values could confuse reports or

processes, requiring additional cleanup. Appian’s rollback strategies favor reverting schema changes

completely (removing the field) rather than leaving it with nulls, making this less reliable and

unnecessary compared to B.

E . Add a view that joins the customer data to the data used in calculation:

Creating a view (e.g., CREATE VIEW customer_report AS SELECT ... FROM customer_table JOIN

other_table ON ...) is useful for reporting but isn’t a prerequisite for adding the field. The scenario

focuses on the field addition and population, not reporting structure. While a view could optimize

queries, it’s a secondary step, not a primary action for the script itself. Appian’s data modeling best

practices suggest views as post-deployment optimizations, not script requirements.

Conclusion: The two actions to consider are B (create a rollback script that removes the field) and C

(create a script that adds the field and then populates it). These ensure the field is added with data

for immediate report usability and provide a safe rollback option, aligning with Appian’s deployment

and data management standards for schema changes.

Reference:

Appian Documentation: "Database Schema Changes" (Adding Fields and Rollback Scripts).

Appian Lead Developer Certification: Data Management Module (Schema Deployment Strategies).

Appian Best Practices: "Managing Data Changes in Production" (Populating and Rolling Back Fields).

Question 12

You are the lead developer for an Appian project, in a backlog refinement meeting. You are

presented with the following user story:

“As a restaurant customer, I need to be able to place my food order online to avoid waiting in line for

takeout.”

Which two functional acceptance criteria would you consider ‘good’?

- A. The user will click Save, and the order information will be saved in the ORDER table and have audit history.

- B. The user will receive an email notification when their order is completed.

- C. The system must handle up to 500 unique orders per day.

- D. The user cannot submit the form without filling out all required fields.

Answer:

A, D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, defining “good” functional acceptance criteria for a user story requires

ensuring they are specific, testable, and directly tied to the user’s need (placing an online food order

to avoid waiting in line). Good criteria focus on functionality, usability, and reliability, aligning with

Appian’s Agile and design best practices. Let’s evaluate each option:

A . The user will click Save, and the order information will be saved in the ORDER table and have

audit history:

This is a “good” criterion. It directly validates the core functionality of the user story—placing an

order online. Saving order data in the ORDER table (likely via a process model or Data Store Entity)

ensures persistence, and audit history (e.g., using Appian’s audit logs or database triggers) tracks

changes, supporting traceability and compliance. This is specific, testable (e.g., verify data in the

table and logs), and essential for the user’s goal, aligning with Appian’s data management and user

experience guidelines.

B . The user will receive an email notification when their order is completed:

While useful, this is a “nice-to-have” enhancement, not a core requirement of the user story. The

story focuses on placing an order online to avoid waiting, not on completion notifications. Email

notifications add value but aren’t essential for validating the primary functionality. Appian’s user

story best practices prioritize criteria tied to the main user need, making this secondary and not

“good” in this context.

C . The system must handle up to 500 unique orders per day:

This is a non-functional requirement (performance/scalability), not a functional acceptance criterion.

It describes system capacity, not specific user behavior or functionality. While important for design,

it’s not directly testable for the user story’s outcome (placing an order) and isn’t tied to the user’s

experience. Appian’s Agile methodologies separate functional and non-functional requirements,

making this less relevant as a “good” criterion here.

D . The user cannot submit the form without filling out all required fields:

This is a “good” criterion. It ensures data integrity and usability by preventing incomplete orders,

directly supporting the user’s ability to place a valid online order. In Appian, this can be implemented

using form validation (e.g., required attributes in SAIL interfaces or process model validations),

making it specific, testable (e.g., verify form submission fails with missing fields), and critical for a

reliable user experience. This aligns with Appian’s UI design and user story validation standards.

Conclusion: The two “good” functional acceptance criteria are A (order saved with audit history) and

D (required fields enforced). These directly validate the user story’s functionality (placing a valid

order online), are testable, and ensure a reliable, user-friendly experience—aligning with Appian’s

Agile and design best practices for user stories.

Reference:

Appian Documentation: "Writing Effective User Stories and Acceptance Criteria" (Functional

Requirements).

Appian Lead Developer Certification: Agile Development Module (Acceptance Criteria Best

Practices).

Appian Best Practices: "Designing User Interfaces in Appian" (Form Validation and Data Persistence).

Question 13

You are designing a process that is anticipated to be executed multiple times a day. This process

retrieves data from an external system and then calls various utility processes as needed. The main

process will not use the results of the utility processes, and there are no user forms anywhere.

Which design choice should be used to start the utility processes and minimize the load on the

execution engines?

- A. Use the Start Process Smart Service to start the utility processes.

- B. Start the utility processes via a subprocess synchronously.

- C. Use Process Messaging to start the utility process.

- D. Start the utility processes via a subprocess asynchronously.

Answer:

D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, designing a process that executes frequently (multiple times a day)

and calls utility processes without using their results requires optimizing performance and

minimizing load on Appian’s execution engines. The absence of user forms indicates a backend

process, so user experience isn’t a concern—only engine efficiency matters. Let’s evaluate each

option:

A . Use the Start Process Smart Service to start the utility processes:

The Start Process Smart Service launches a new process instance independently, creating a separate

process in the Work Queue. While functional, it increases engine load because each utility process

runs as a distinct instance, consuming engine resources and potentially clogging the Java Work

Queue, especially with frequent executions. Appian’s performance guidelines discourage

unnecessary separate process instances for utility tasks, favoring integrated subprocesses, making

this less optimal.

B . Start the utility processes via a subprocess synchronously:

Synchronous subprocesses (e.g., a!startProcess with isAsync: false) execute within the main process

flow, blocking until completion. For utility processes not used by the main process, this creates

unnecessary delays, increasing execution time and engine load. With frequent daily executions,

synchronous subprocesses could strain engines, especially if utility processes are slow or numerous.

Appian’s documentation recommends asynchronous execution for non-dependent, non-blocking

tasks, ruling this out.

C . Use Process Messaging to start the utility process:

Process Messaging (e.g., sendMessage() in Appian) is used for inter-process communication, not for

starting processes. It’s designed to pass data between running processes, not initiate new ones.

Attempting to use it for starting utility processes would require additional setup (e.g., a listening

process) and isn’t a standard or efficient method. Appian’s messaging features are for coordination,

not process initiation, making this inappropriate.

D . Start the utility processes via a subprocess asynchronously:

This is the best choice. Asynchronous subprocesses (e.g., a!startProcess with isAsync: true) execute

independently of the main process, offloading work to the engine without blocking or delaying the

parent process. Since the main process doesn’t use the utility process results and there are no user

forms, asynchronous execution minimizes engine load by distributing tasks across time, reducing

Work Queue pressure during frequent executions. Appian’s performance best practices recommend

asynchronous subprocesses for non-dependent, utility tasks to optimize engine utilization, making

this ideal for minimizing load.

Conclusion: Starting the utility processes via a subprocess asynchronously (D) minimizes engine load

by allowing independent execution without blocking the main process, aligning with Appian’s

performance optimization strategies for frequent, backend processes.

Reference:

Appian Documentation: "Process Model Performance" (Synchronous vs. Asynchronous

Subprocesses).

Appian Lead Developer Certification: Process Design Module (Optimizing Engine Load).

Appian Best Practices: "Designing Efficient Utility Processes" (Asynchronous Execution).

Question 14

As part of your implementation workflow, users need to retrieve data stored in a third-party Oracle

database on an interface. You need to design a way to query this information.

How should you set up this connection and query the data?

- A. Configure a Query Database node within the process model. Then, type in the connection information, as well as a SQL query to execute and return the data in process variables.

- B. Configure a timed utility process that queries data from the third-party database daily, and stores it in the Appian business database. Then use a!queryEntity using the Appian data source to retrieve the data.

- C. Configure an expression-backed record type, calling an API to retrieve the data from the third- party database. Then, use a!queryRecordType to retrieve the data.

- D. In the Administration Console, configure the third-party database as a “New Data Source.” Then, use a!queryEntity to retrieve the data.

Answer:

D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, designing a solution to query data from a third-party Oracle database

for display on an interface requires secure, efficient, and maintainable integration. The scenario

focuses on real-time retrieval for users, so the design must leverage Appian’s data connectivity

features. Let’s evaluate each option:

A . Configure a Query Database node within the process model. Then, type in the connection

information, as well as a SQL query to execute and return the data in process variables:

The Query Database node (part of the Smart Services) allows direct SQL execution against a

database, but it requires manual connection details (e.g., JDBC URL, credentials), which isn’t scalable

or secure for Production. Appian’s documentation discourages using Query Database for ongoing

integrations due to maintenance overhead, security risks (e.g., hardcoding credentials), and lack of

governance. This is better for one-off tasks, not real-time interface queries, making it unsuitable.

B . Configure a timed utility process that queries data from the third-party database daily, and stores

it in the Appian business database. Then use a!queryEntity using the Appian data source to retrieve

the data:

This approach syncs data daily into Appian’s business database (e.g., via a timer event and Query

Database node), then queries it with a!queryEntity. While it works for stale data, it introduces

latency (up to 24 hours) for users, which doesn’t meet real-time needs on an interface. Appian’s best

practices recommend direct data source connections for up-to-date data, not periodic caching,

unless latency is acceptable—making this inefficient here.

C . Configure an expression-backed record type, calling an API to retrieve the data from the third-

party database. Then, use a!queryRecordType to retrieve the data:

Expression-backed record types use expressions (e.g., a!httpQuery()) to fetch data, but they’re

designed for external APIs, not direct database queries. The scenario specifies an Oracle database,

not an API, so this requires building a custom REST service on the Oracle side, adding complexity and

latency. Appian’s documentation favors Data Sources for database queries over API calls when direct

access is available, making this less optimal and over-engineered.

D . In the Administration Console, configure the third-party database as a “New Data Source.” Then,

use a!queryEntity to retrieve the data:

This is the best choice. In the Appian Administration Console, you can configure a JDBC Data Source

for the Oracle database, providing connection details (e.g., URL, driver, credentials). This creates a

secure, managed connection for querying via a!queryEntity, which is Appian’s standard function for

Data Store Entities. Users can then retrieve data on interfaces using expression-backed records or

queries, ensuring real-time access with minimal latency. Appian’s documentation recommends Data

Sources for database integrations, offering scalability, security, and governance—perfect for this

requirement.

Conclusion: Configuring the third-party database as a New Data Source and using a!queryEntity (D) is

the recommended approach. It provides direct, real-time access to Oracle data for interface display,

leveraging Appian’s native data connectivity features and aligning with Lead Developer best practices

for third-party database integration.

Reference:

Appian Documentation: "Configuring Data Sources" (JDBC Connections and a!queryEntity).

Appian Lead Developer Certification: Data Integration Module (Database Query Design).

Appian Best Practices: "Retrieving External Data in Interfaces" (Data Source vs. API Approaches).

Question 15

Users must be able to navigate throughout the application while maintaining complete visibility in

the application structure and easily navigate to previous locations. Which Appian Interface Pattern

would you recommend?

- A. Use Billboards as Cards pattern on the homepage to prominently display application choices.

- B. Implement an Activity History pattern to track an organization’s activity measures.

- C. Implement a Drilldown Report pattern to show detailed information about report data.

- D. Include a Breadcrumbs pattern on applicable interfaces to show the organizational hierarchy.

Answer:

D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

The requirement emphasizes navigation with complete visibility of the application structure and the

ability to return to previous locations easily. The Breadcrumbs pattern is specifically designed to

meet this need. According to Appian’s design best practices, the Breadcrumbs pattern provides a

visual trail of the user’s navigation path, showing the hierarchy of pages or sections within the

application. This allows users to understand their current location relative to the overall structure

and quickly navigate back to previous levels by clicking on the breadcrumb links.

Option A (Billboards as Cards): This pattern is useful for presenting high-level options or choices on a

homepage in a visually appealing way. However, it does not address navigation visibility or the ability

to return to previous locations, making it irrelevant to the requirement.

Option B (Activity History): This pattern tracks and displays a log of activities or actions within the

application, typically for auditing or monitoring purposes. It does not enhance navigation or provide

visibility into the application structure.

Option C (Drilldown Report): This pattern allows users to explore detailed data within reports by

drilling into specific records. While it supports navigation within data, it is not designed for general

application navigation or maintaining structural visibility.

Option D (Breadcrumbs): This is the correct choice as it directly aligns with the requirement. Per

Appian’s Interface Patterns documentation, Breadcrumbs improve usability by showing a hierarchical

path (e.g., Home > Section > Subsection) and enabling backtracking, fulfilling both visibility and

navigation needs.

Reference: Appian Design Guide - Interface Patterns (Breadcrumbs section), Appian Lead Developer

Training - User Experience Design Principles.